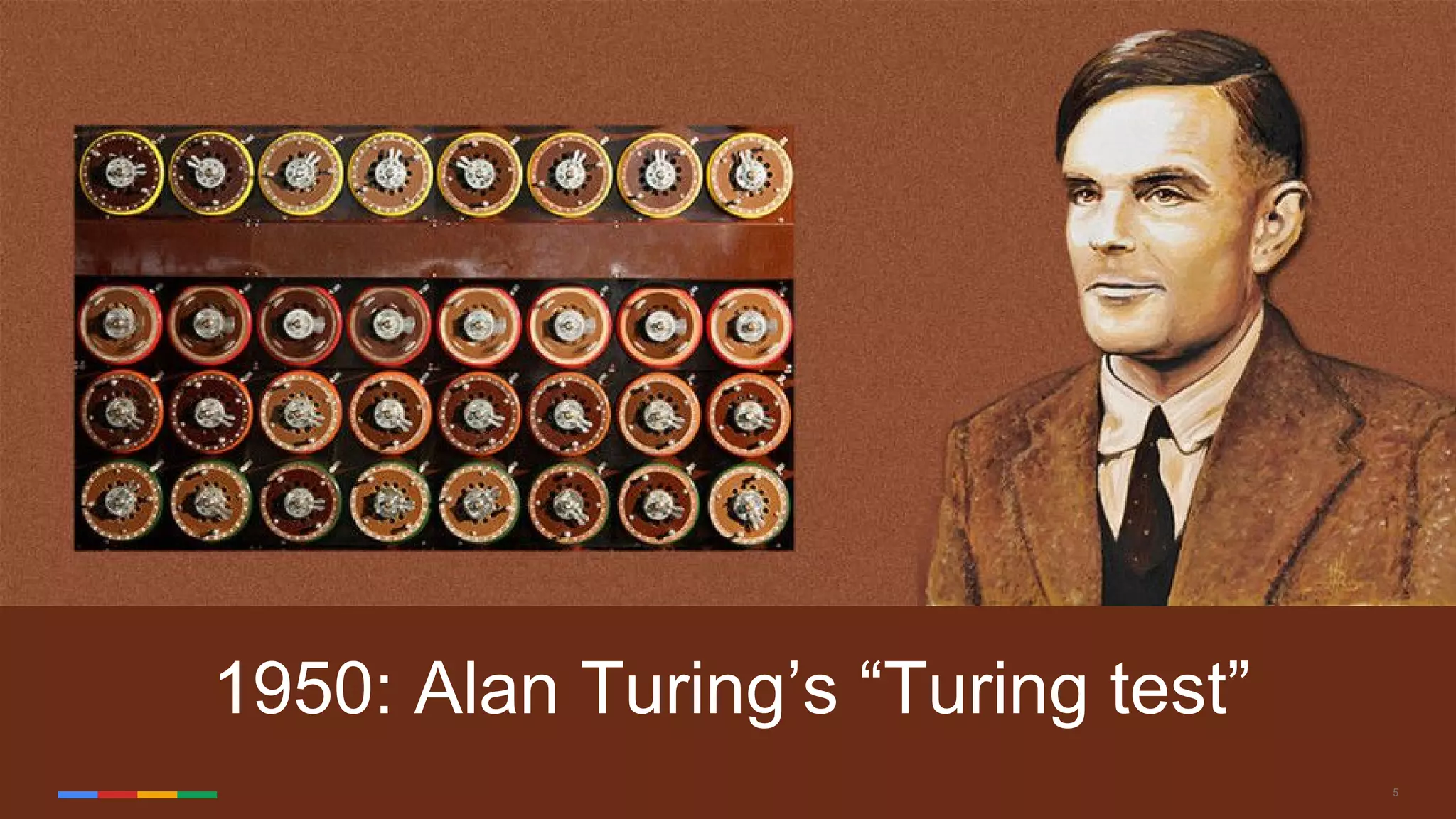

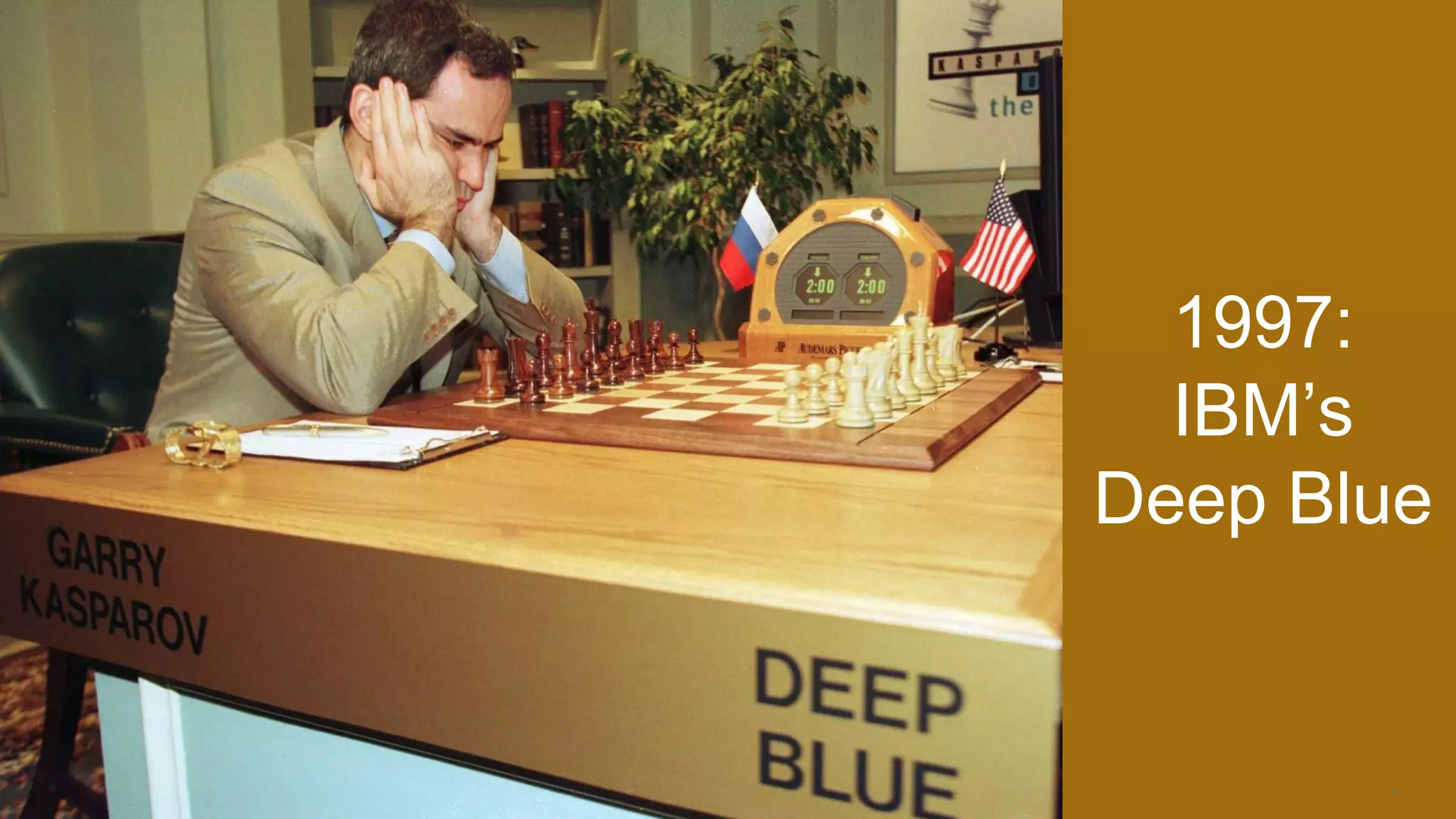

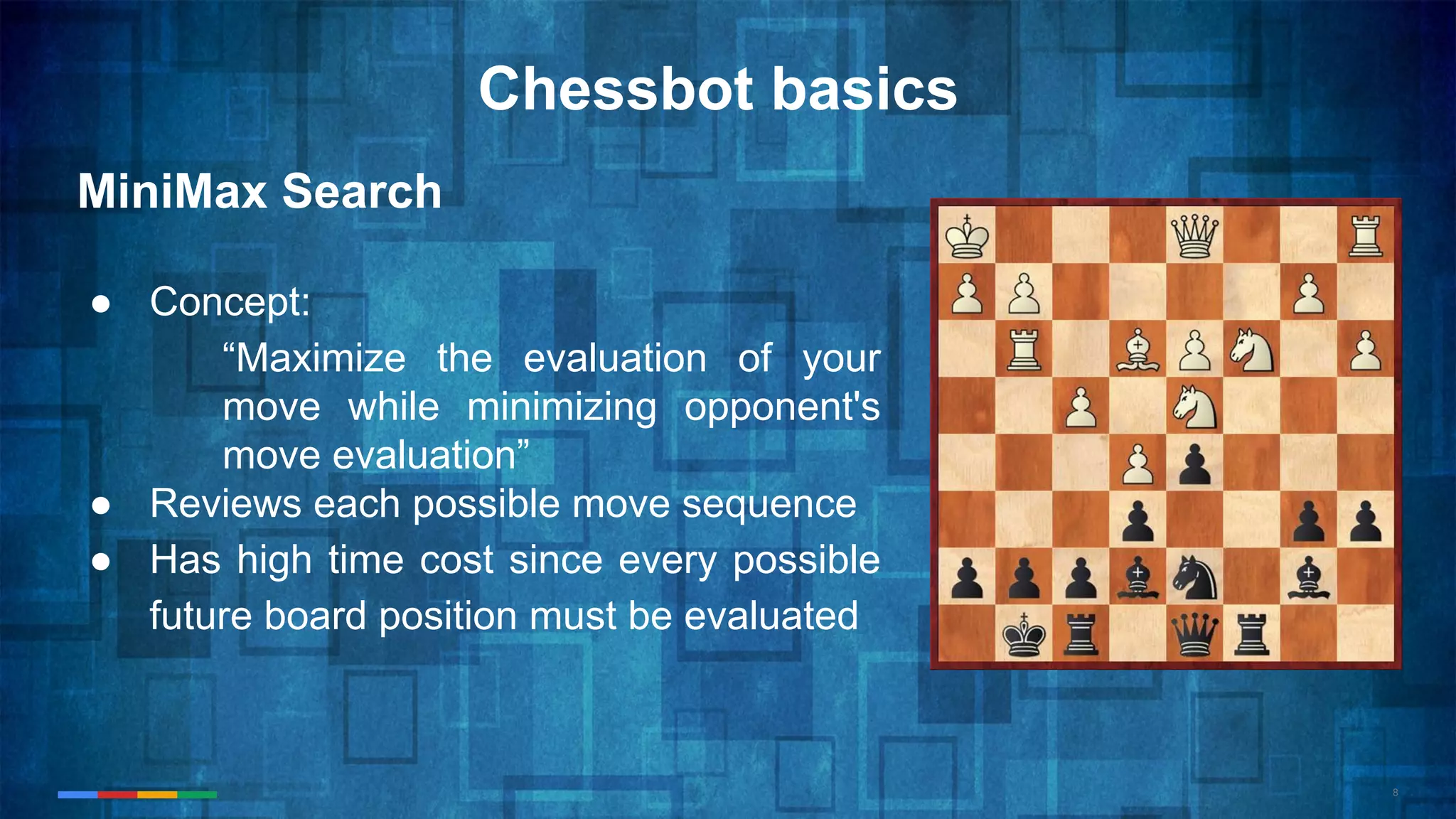

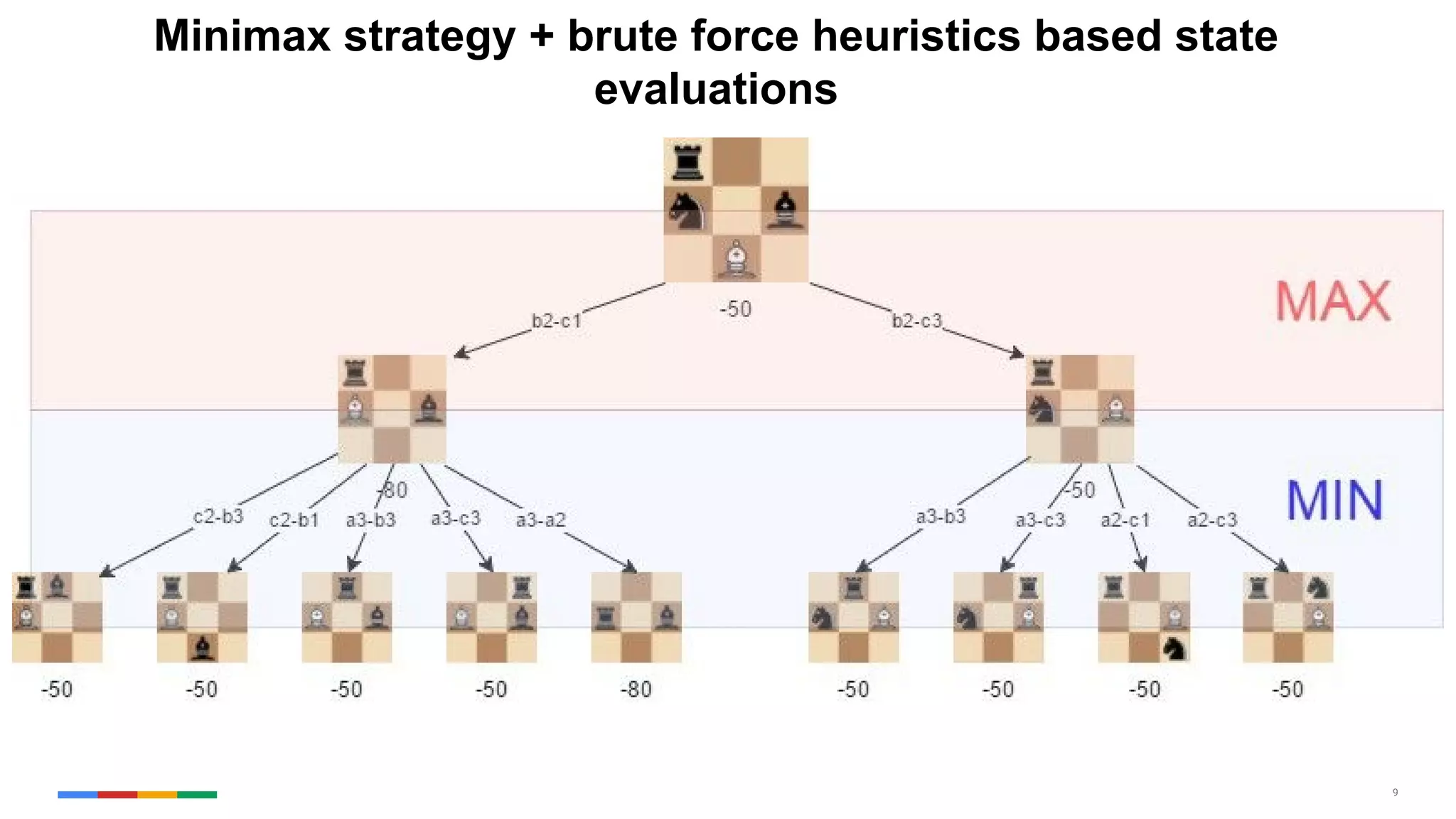

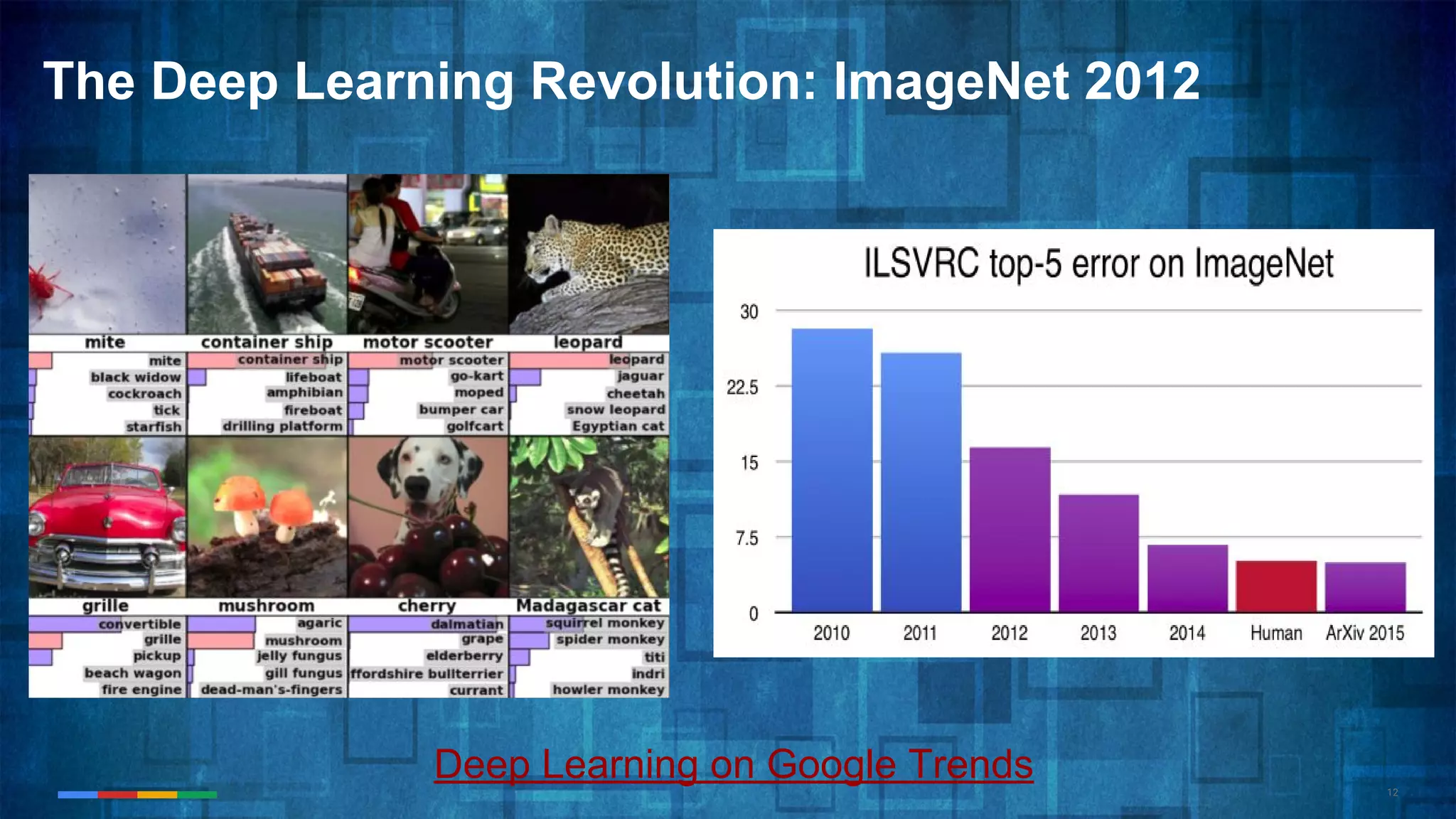

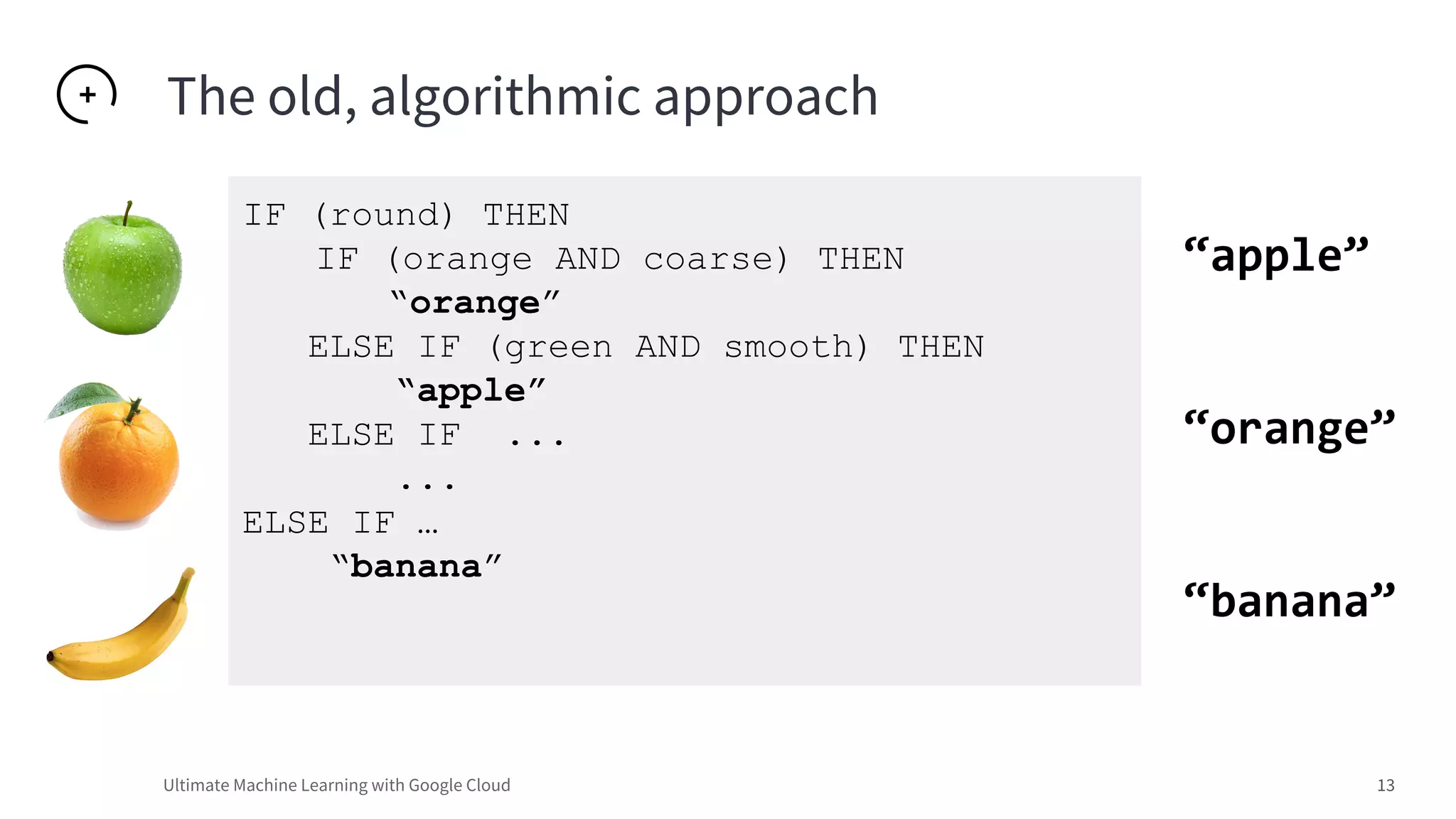

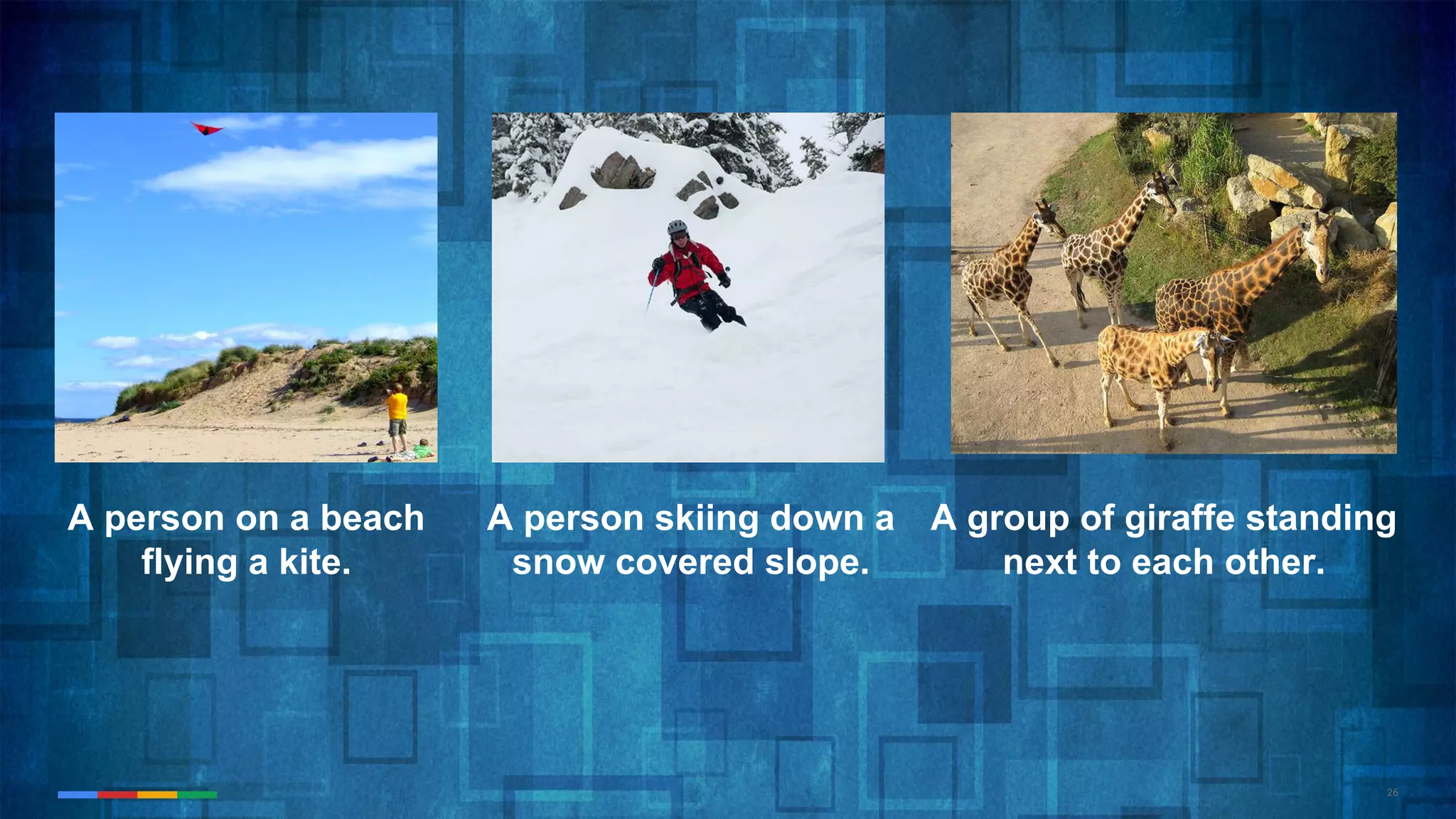

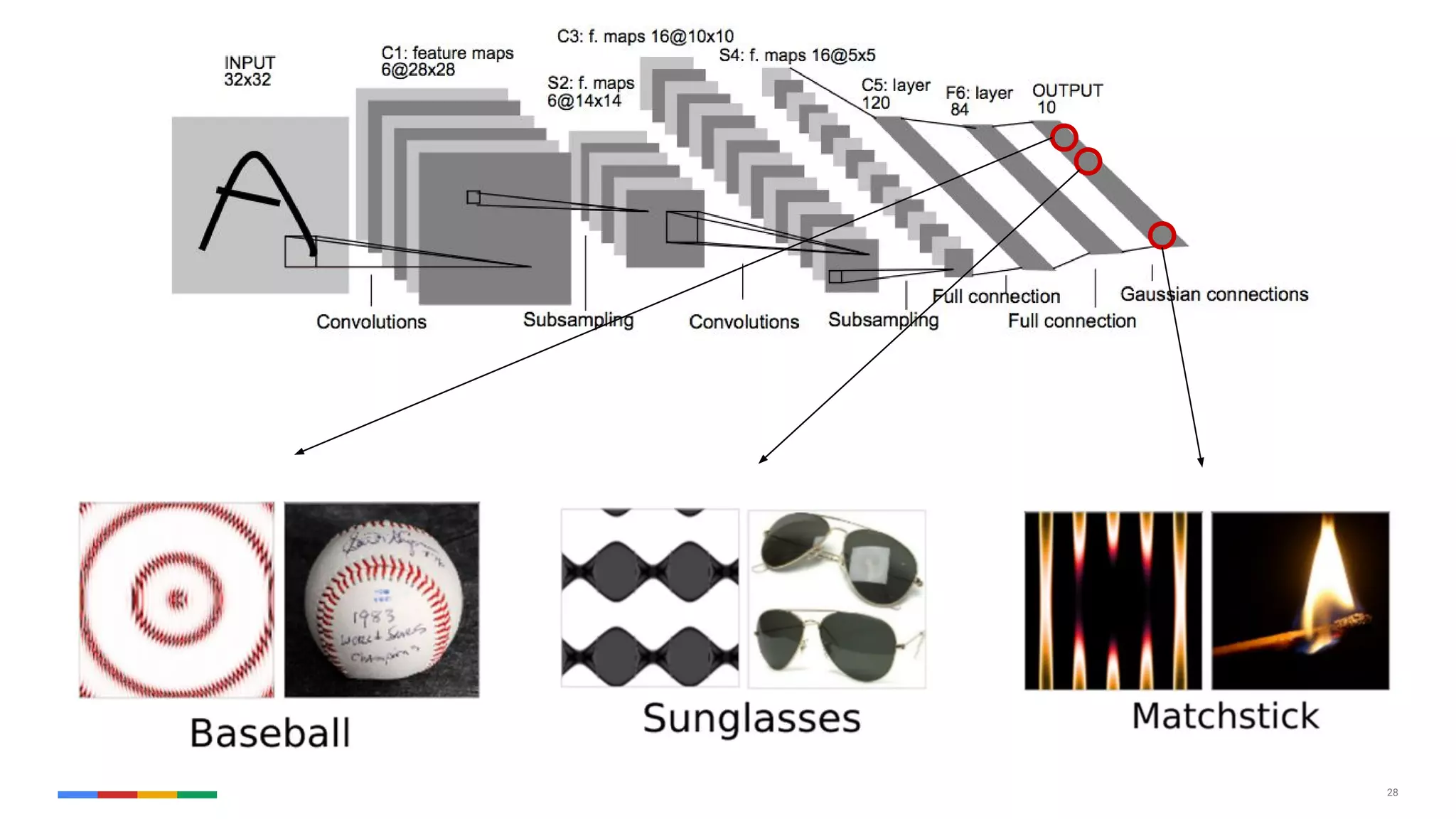

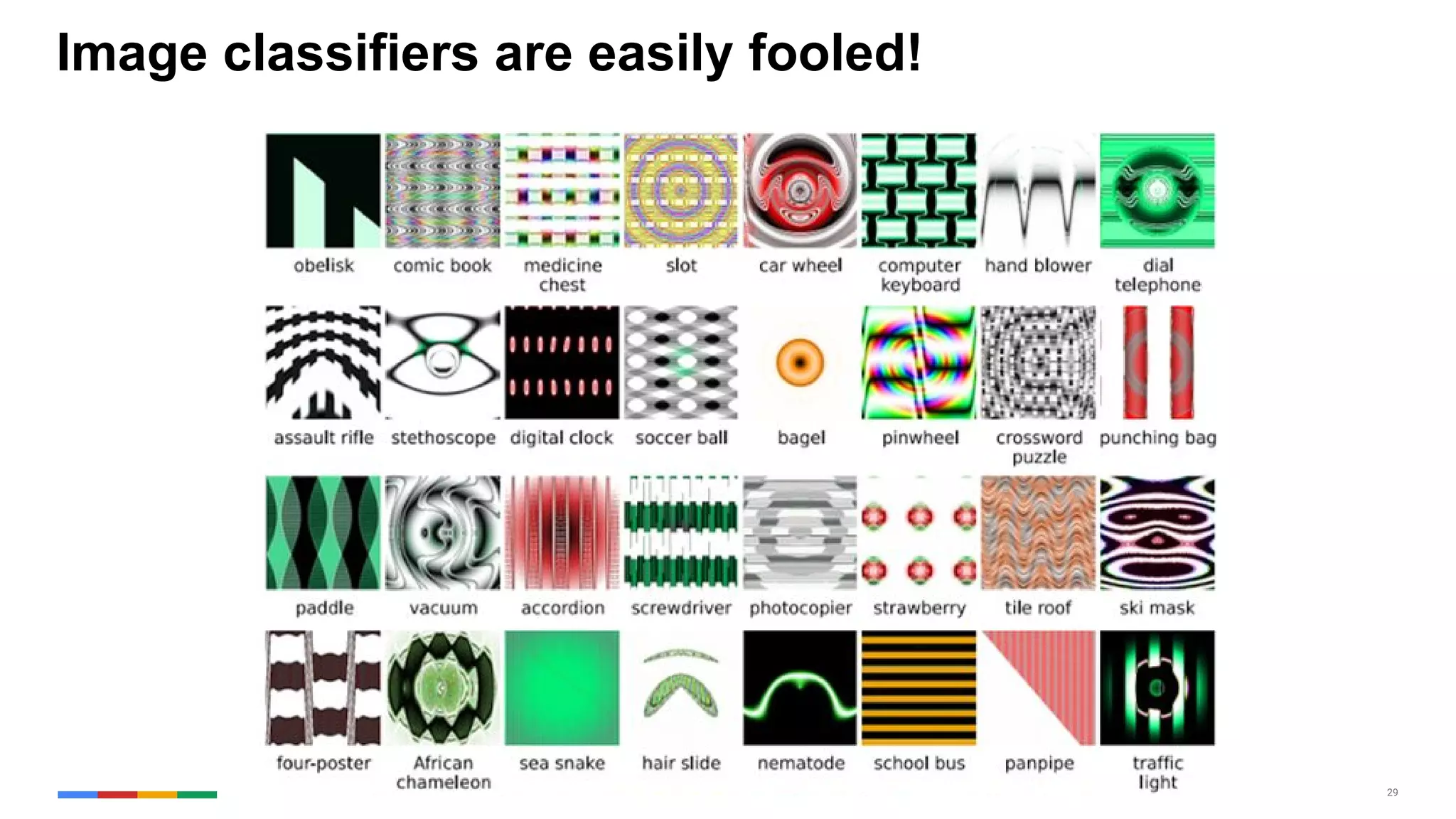

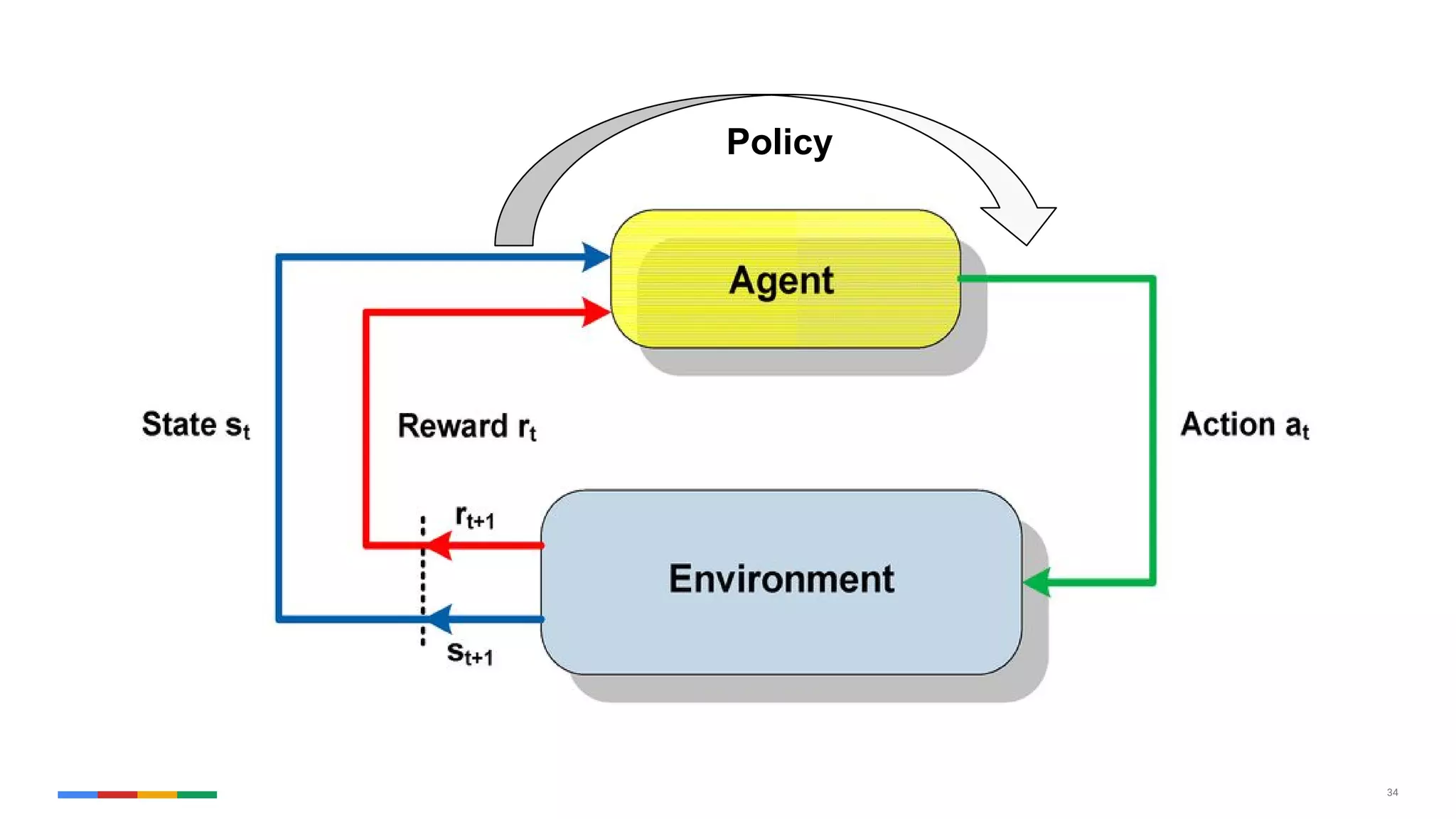

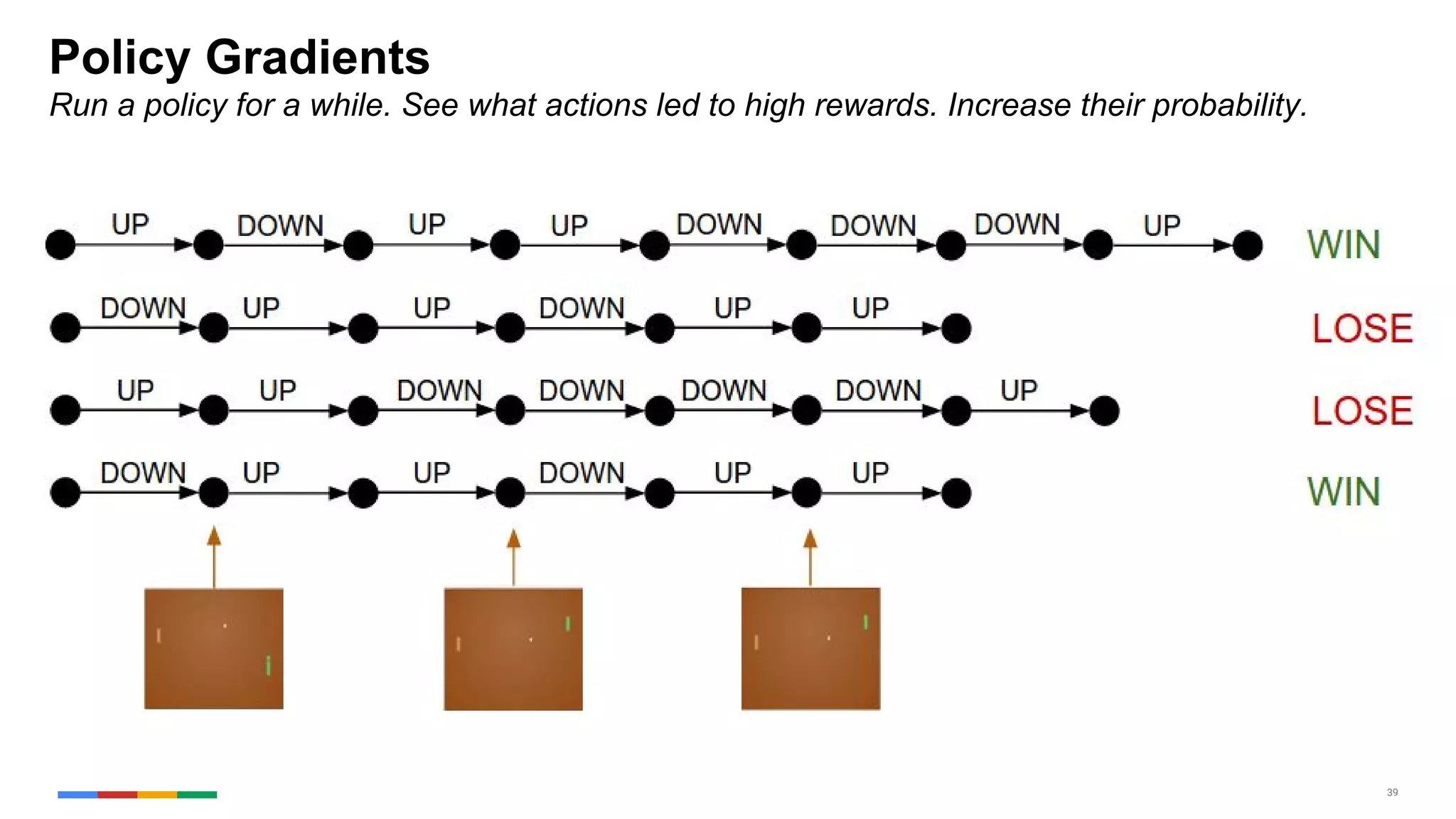

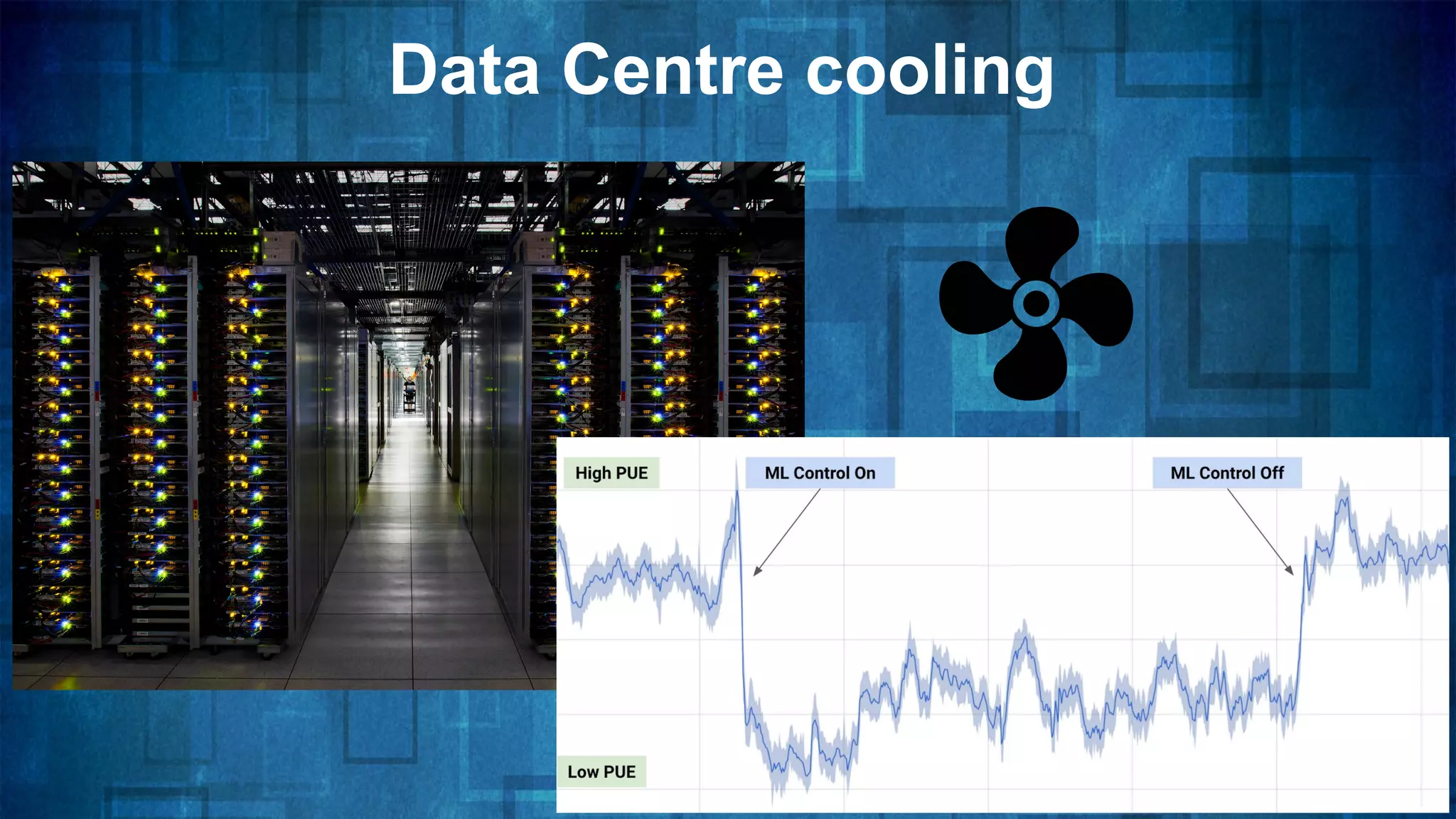

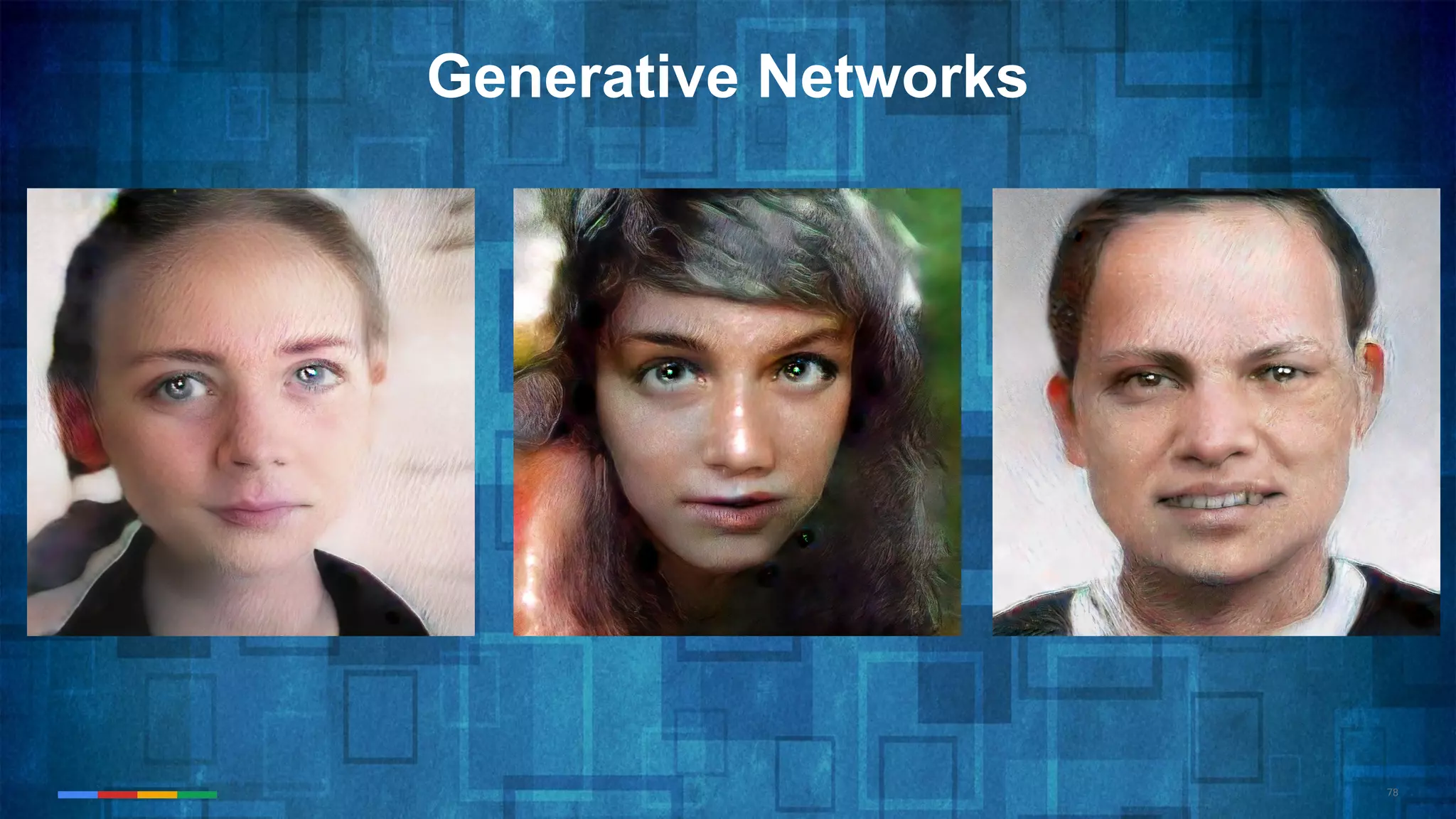

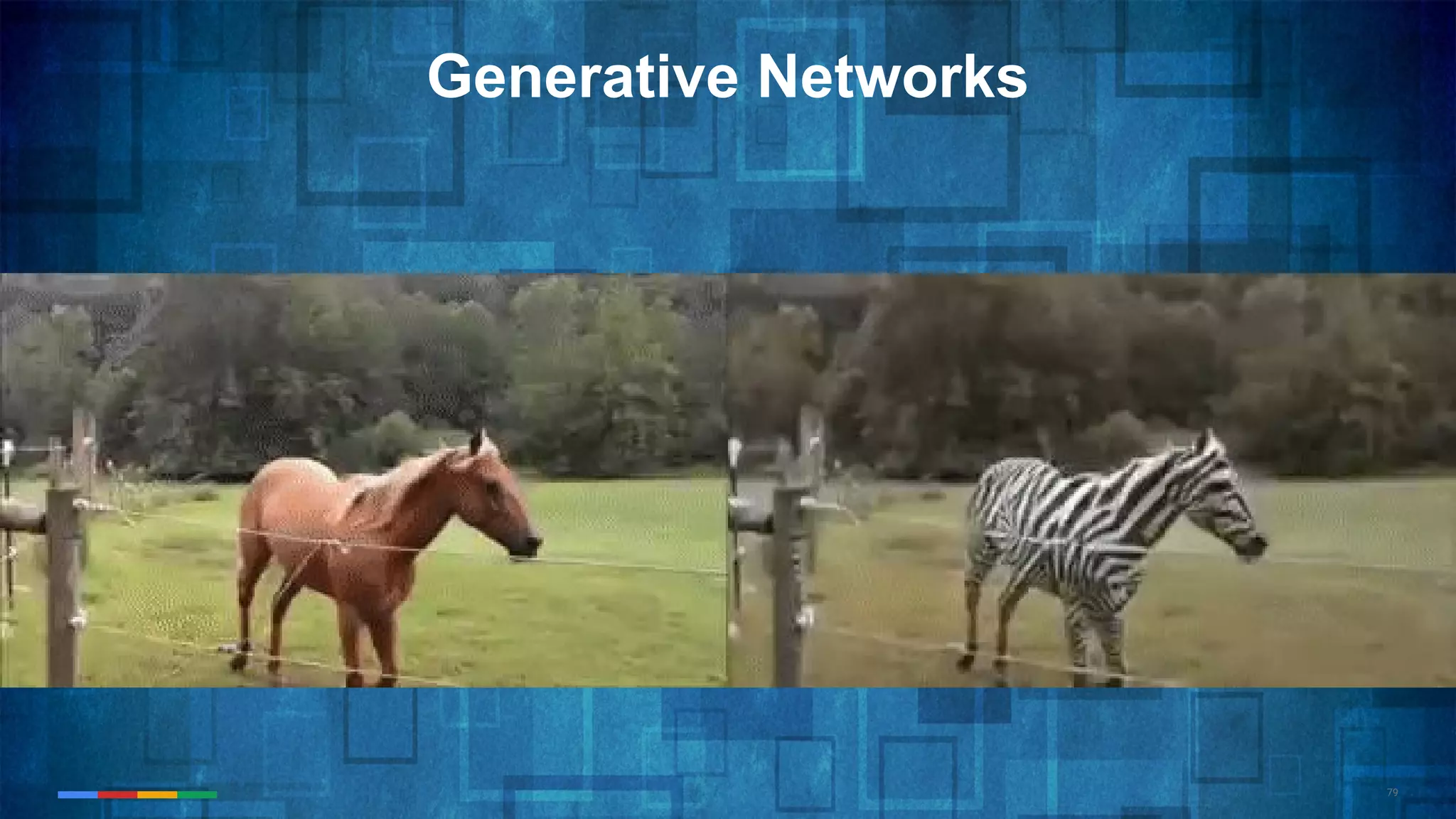

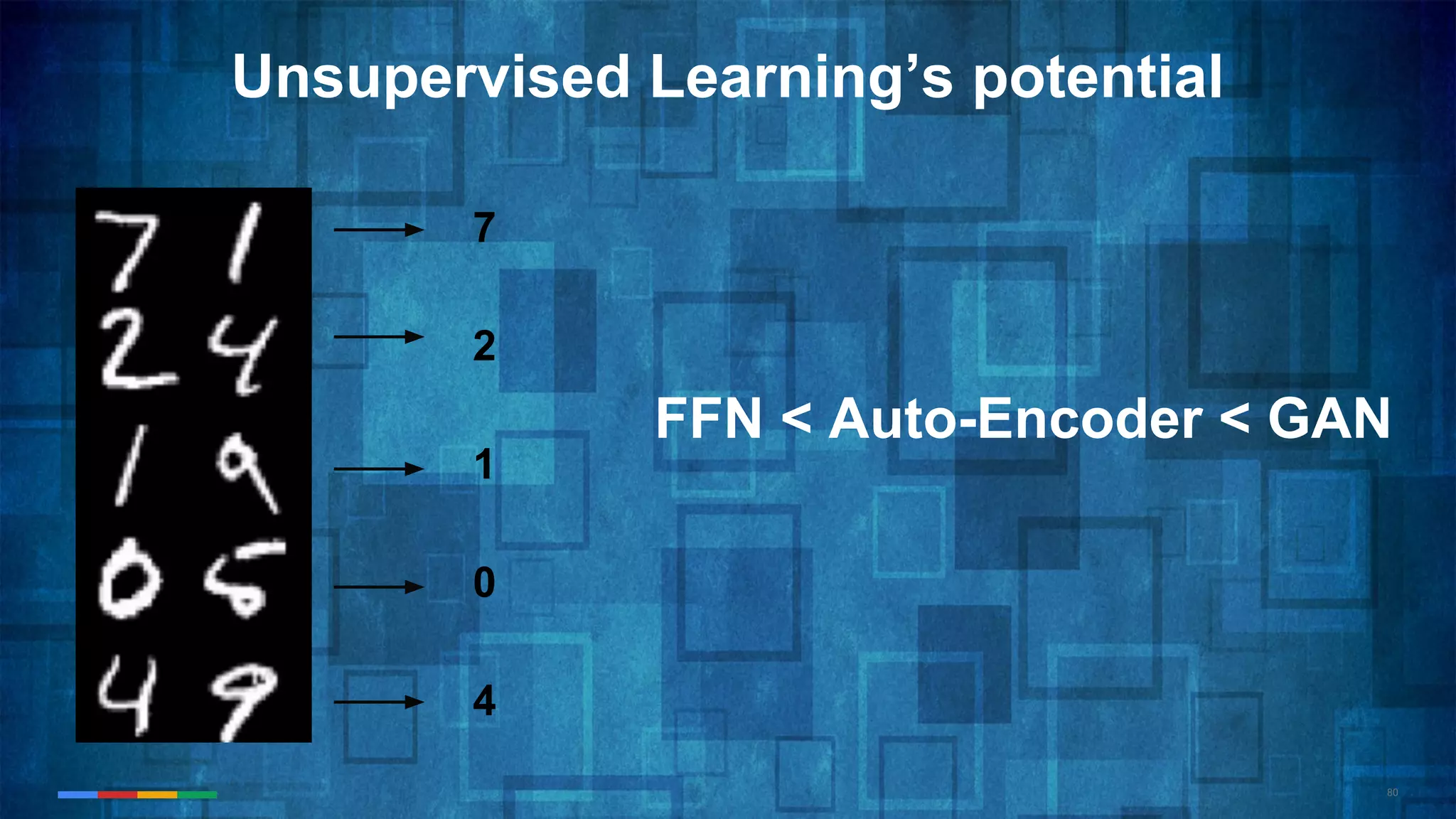

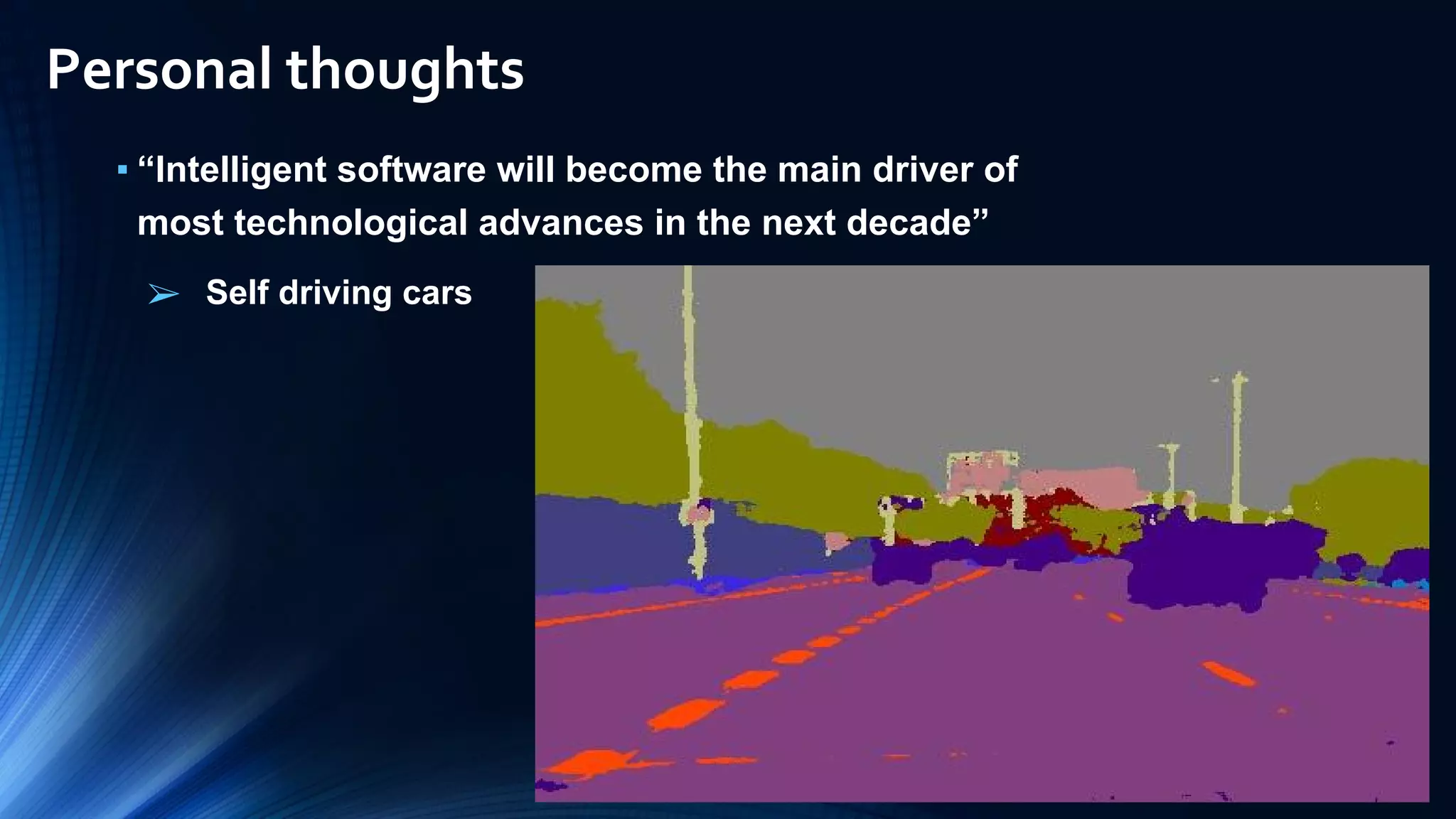

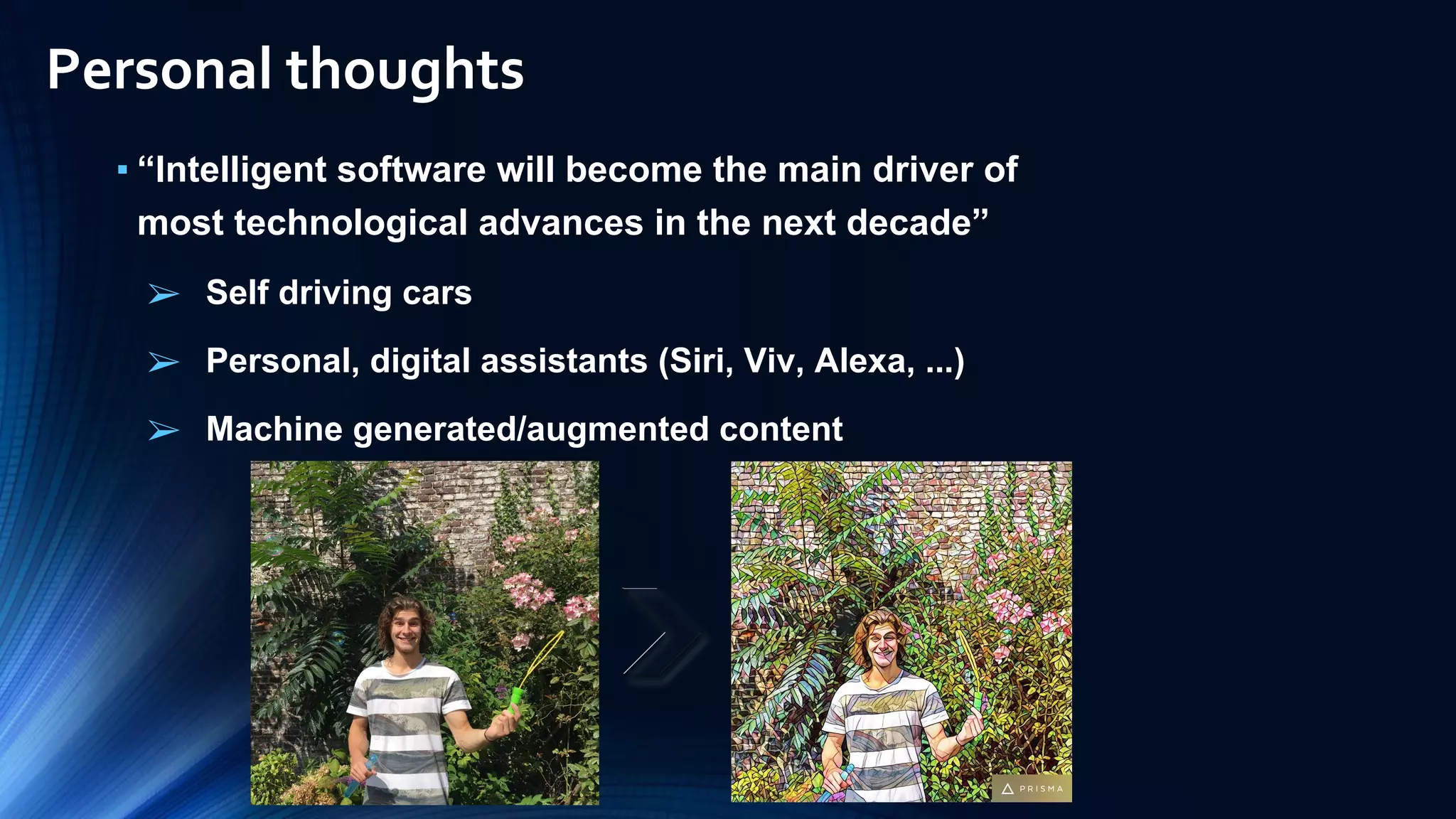

The document provides an overview of the history and evolution of artificial intelligence (AI) and reinforcement learning, highlighting key milestones such as Alan Turing's Turing test and IBM's Deep Blue. It discusses the practical applications of machine learning, the limitations of current AI systems, and explores promising areas of research. The author predicts that intelligent software will drive significant technological advancements in the next decade, impacting areas such as self-driving cars and virtual reality.