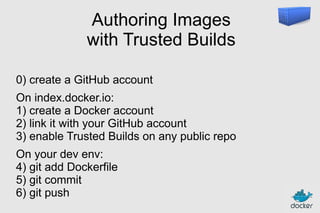

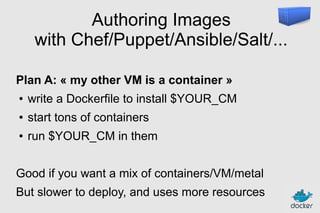

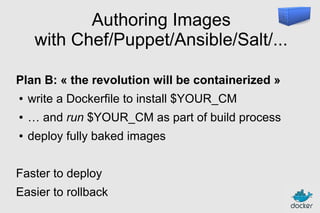

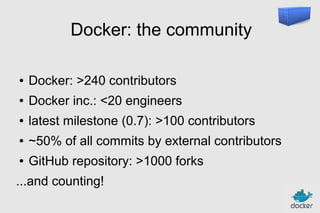

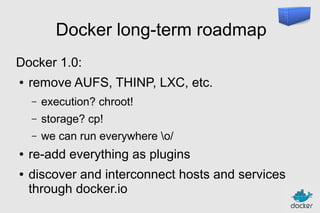

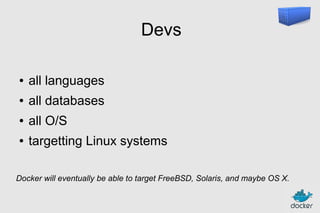

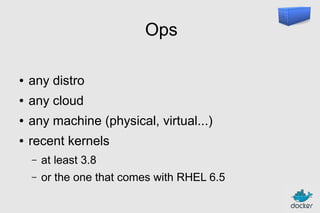

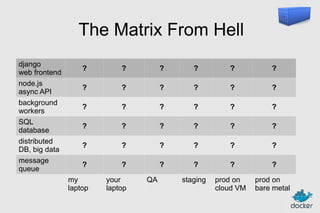

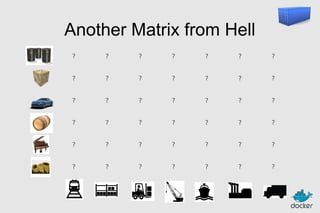

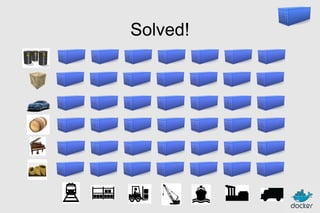

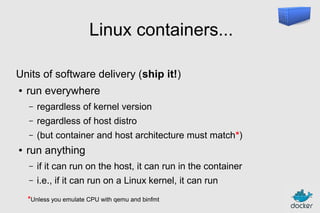

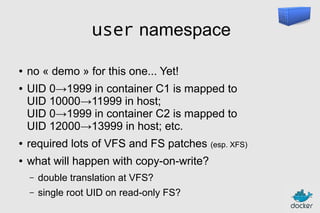

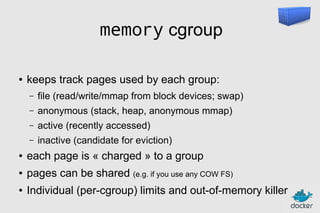

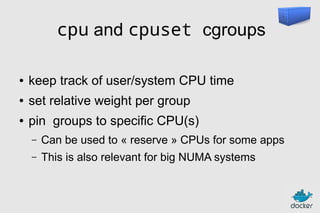

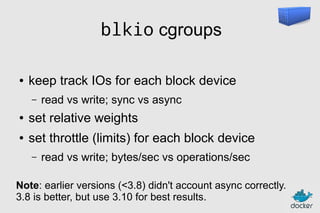

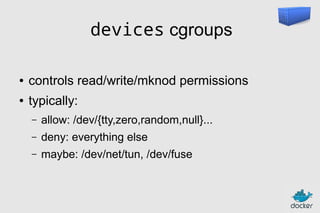

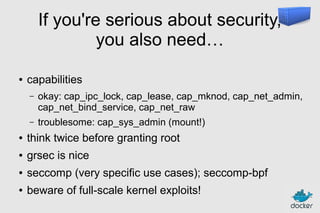

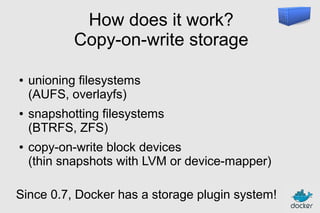

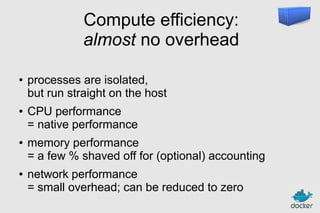

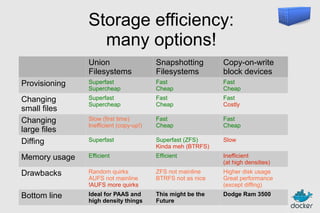

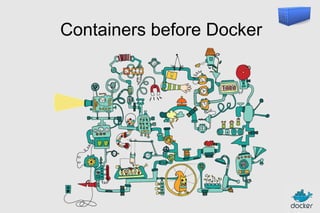

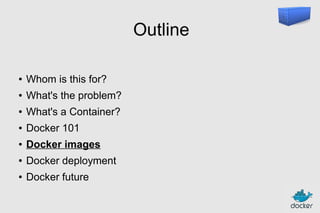

This document serves as a comprehensive introduction to Docker and container technology, outlining its benefits for developers, operations, and executives. It explains key concepts such as containers, Docker images, deployment techniques, and isolation mechanisms provided by namespaces and cgroups. The document also discusses Docker's evolution, advantages over traditional methods, and its growing community and roadmap for future development.

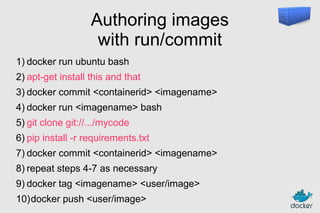

![Authoring images

with a Dockerfile

FROM ubuntu

RUN

RUN

RUN

RUN

RUN

apt-get

apt-get

apt-get

apt-get

apt-get

-y update

install -y

install -y

install -y

install -y

g++

erlang-dev erlang-manpages erlang-base-hipe ...

libmozjs185-dev libicu-dev libtool ...

make wget

RUN wget http://.../apache-couchdb-1.3.1.tar.gz | tar -C /tmp -zxfRUN cd /tmp/apache-couchdb-* && ./configure && make install

RUN printf "[httpd]nport = 8101nbind_address = 0.0.0.0" >

/usr/local/etc/couchdb/local.d/docker.ini

EXPOSE 8101

CMD ["/usr/local/bin/couchdb"]

docker build -t jpetazzo/couchdb .](https://image.slidesharecdn.com/2013-12-03-docker101-131203121046-phpapp01/85/Introduction-to-Docker-as-presented-at-December-2013-Global-Hackathon-49-320.jpg)