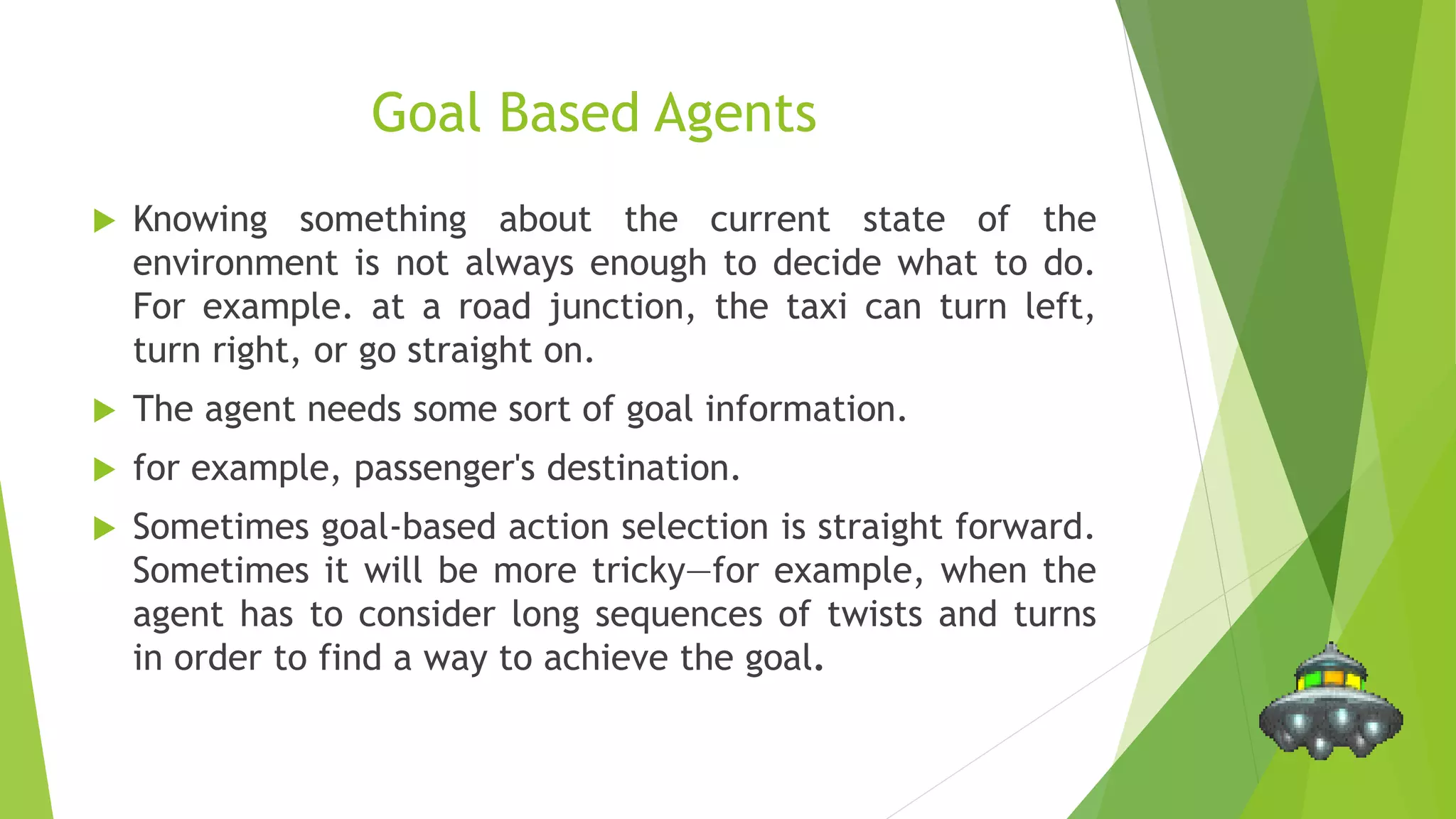

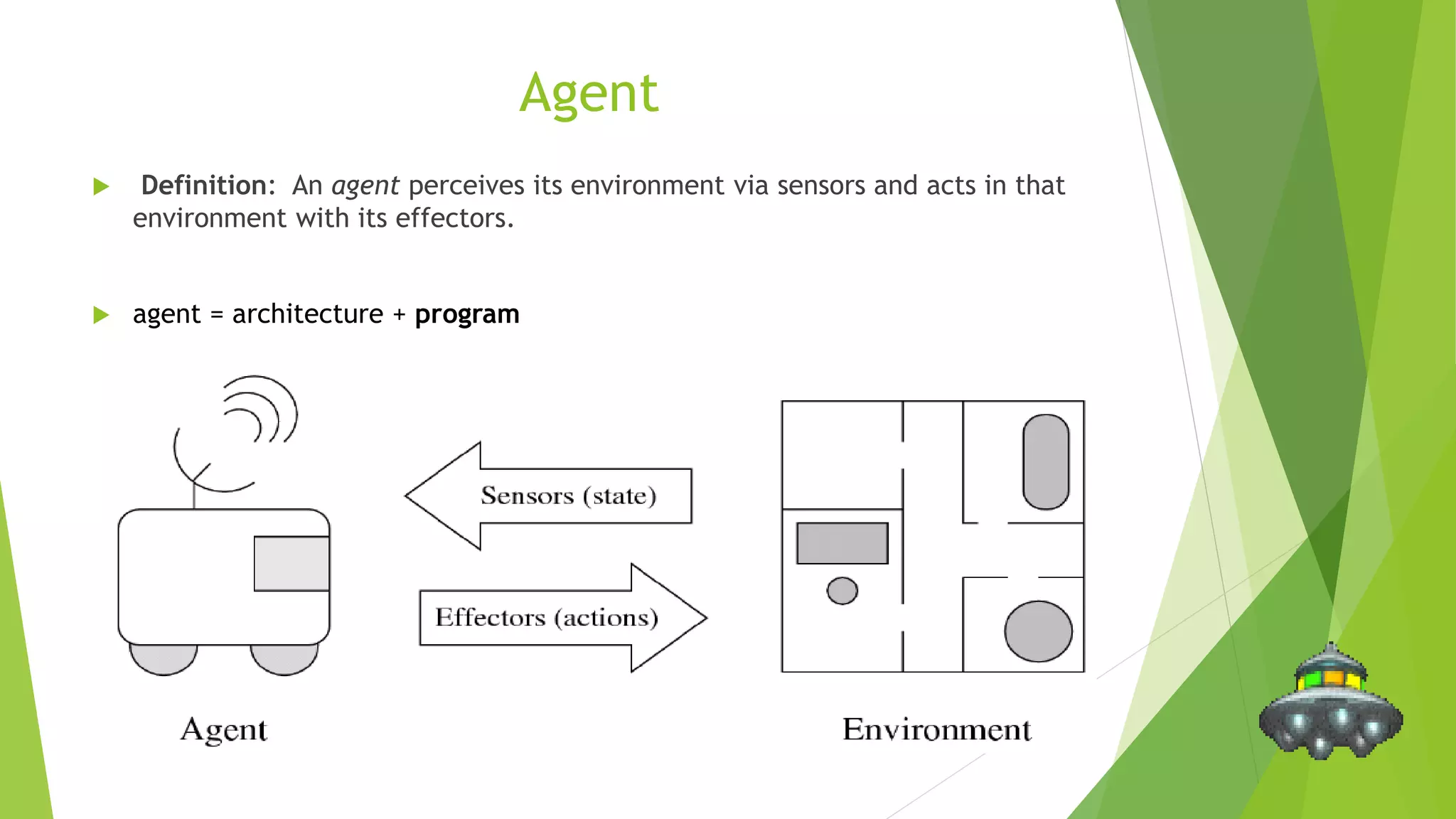

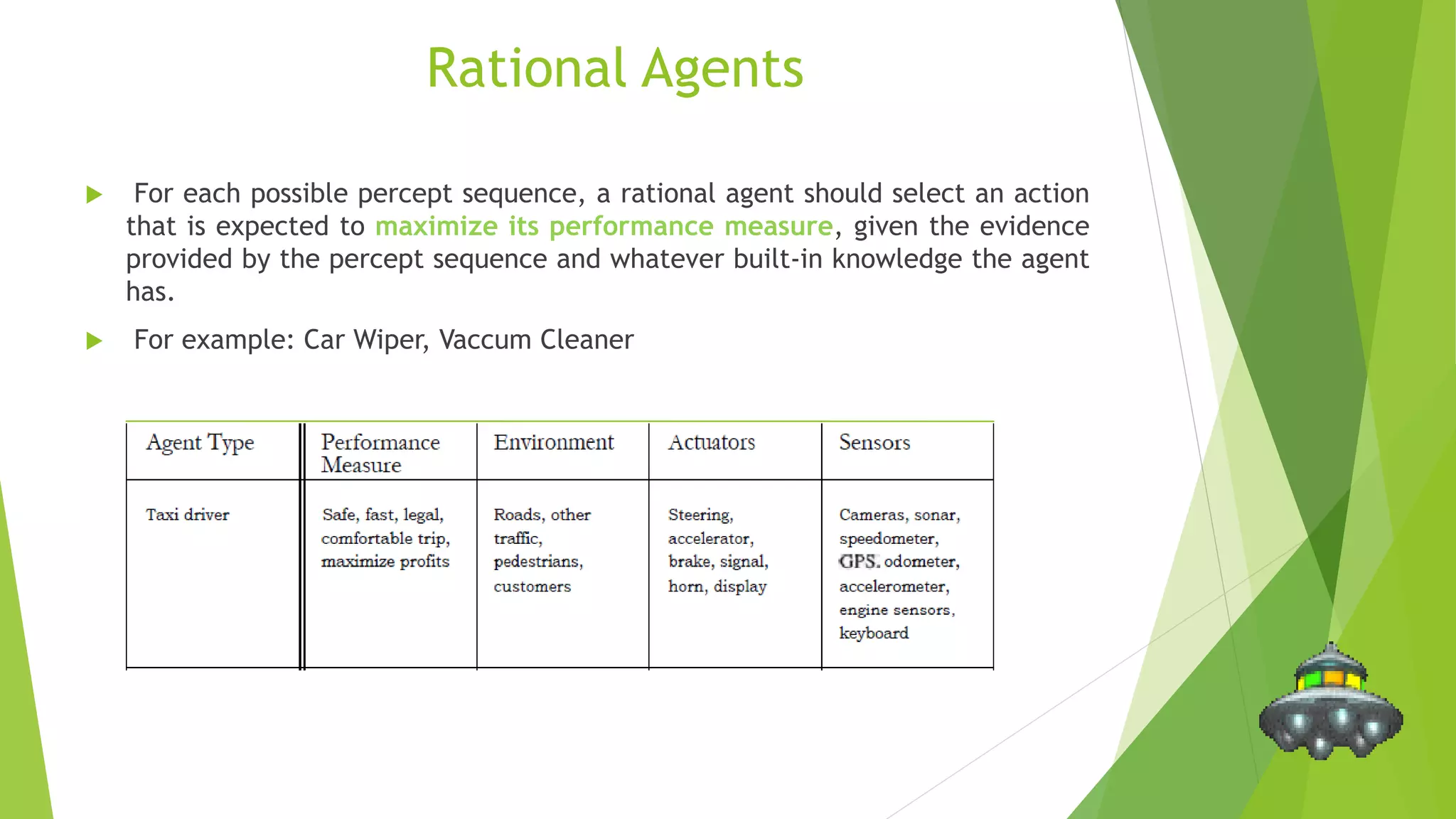

Artificial intelligence (AI) aims to create intelligent machines that can perceive and act like humans. Alan Turing first proposed testing machine intelligence with the Turing Test in 1956. An intelligent agent perceives its environment through sensors and acts through effectors. Rational agents are expected to select actions that maximize their performance given perceived inputs and built-in knowledge. Different types of AI agents include simple reflex agents that react to current inputs, model-based reflex agents that track the world state, goal-based agents that consider goals, and utility-based agents that evaluate action outcomes.

![Simple Reflex Agents

It is the simplest type. These agents select actions on the

bases of current percept.

Has no memory.

EX: if car-in-front-is-breaking then initiate-braking

function REFLEX-VACUUM-AGENT( [location,status])

returns an action

if status = Dirty then return Suck

else if location = A then return Right

else if location = B then return Left](https://image.slidesharecdn.com/uniti-1-150703160731-lva1-app6892/75/Introduction-To-Artificial-Intelligence-10-2048.jpg)