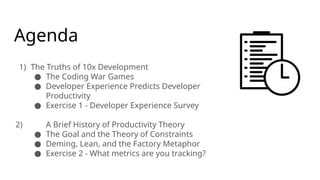

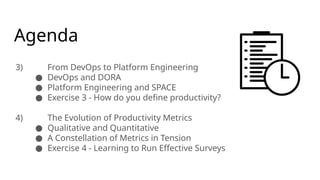

Ready to measure and improve developer productivity in your organization?

Join Justin Reock, Deputy CTO at DX, for an interactive session where you'll learn actionable strategies to measure and increase engineering performance.

Leave this session equipped with a comprehensive understanding of developer productivity and a roadmap to create a high-performing engineering team in your company.