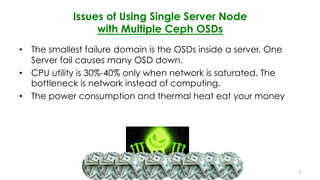

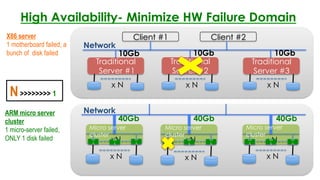

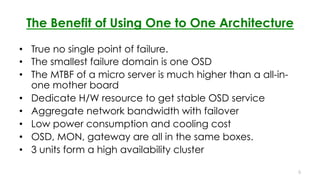

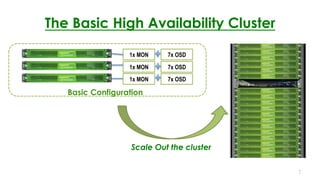

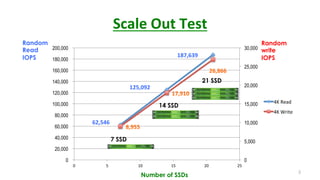

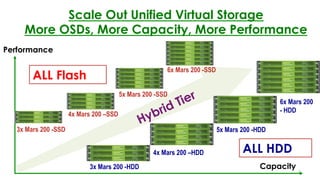

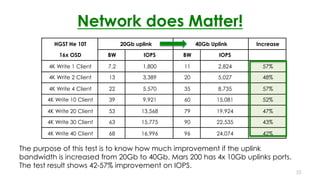

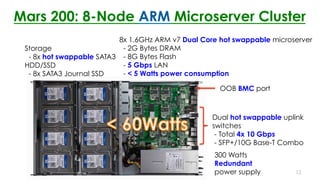

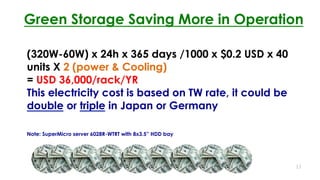

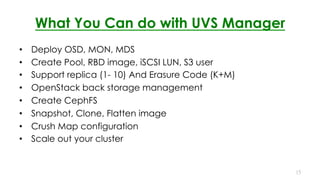

The document discusses the performance of Ceph in ARM-based microserver clusters, highlighting the advantages of a one-to-one architecture for high availability and efficient resource utilization. It details the limitations of using single server nodes with multiple Ceph OSDs and emphasizes the self-healing capabilities of the system, which can outperform traditional RAID. Additionally, it includes test results showing improved IOPS with increased uplink bandwidth, and the overall benefits of a unified virtual storage management system.