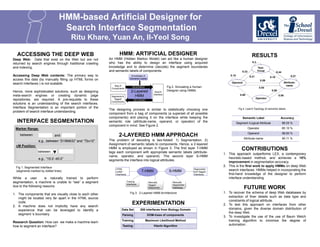

This document describes using a Hidden Markov Model (HMM) approach to segment deep web search interfaces. The HMM acts as an artificial designer that can determine segment boundaries and label components based on acquired knowledge. A two-layered HMM is employed, with the first layer assigning semantic labels and the second layer segmenting the interface. The approach outperforms previous heuristic methods, achieving a 10% improvement in segmentation accuracy. Future work involves extracting more schema details, testing on other domains, and exploring alternative training algorithms.