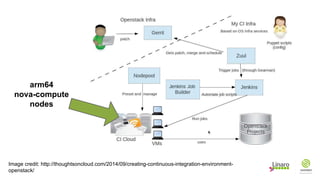

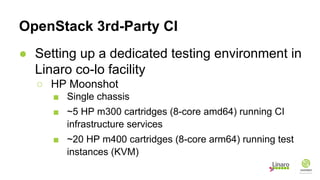

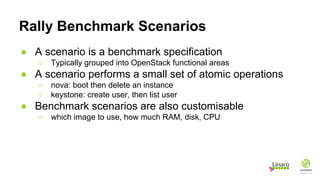

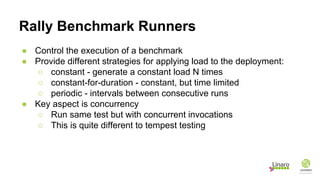

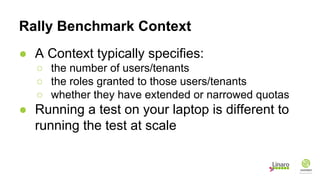

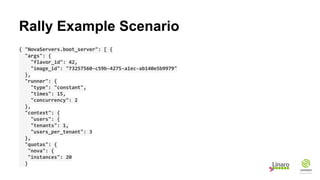

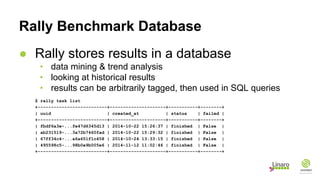

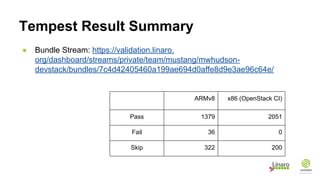

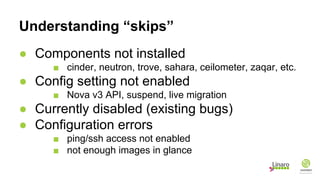

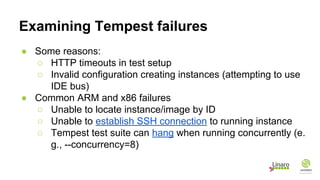

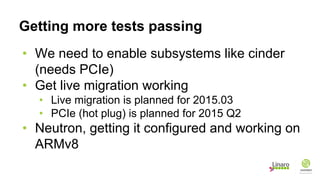

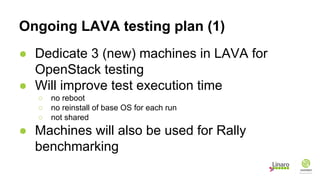

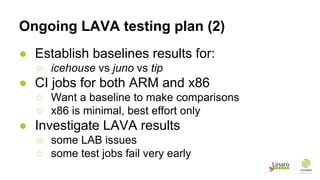

The document outlines updates on OpenStack testing and performance benchmarking, focusing on third-party continuous integration, the Rally framework, and Tempest results. The goal is to establish ARM as a recognized platform for OpenStack through dedicated testing systems and to utilize Rally for performance measurement and benchmarking. Key points include the set-up of testing environments, plans for additional project support, and ongoing issues and strategies for improving test results.