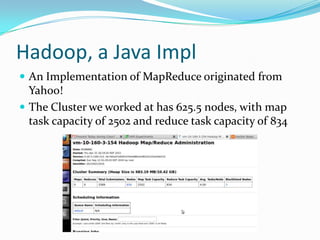

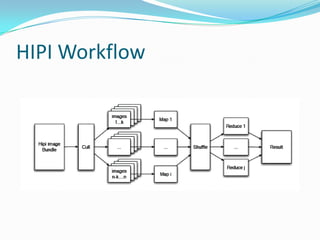

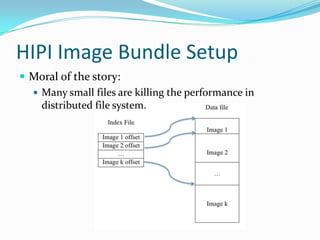

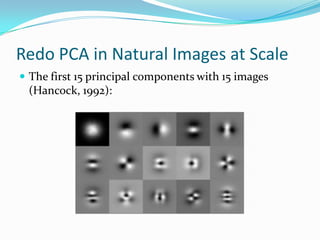

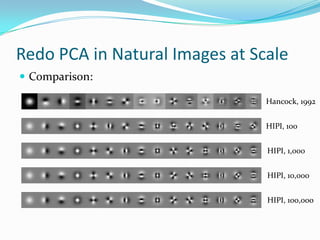

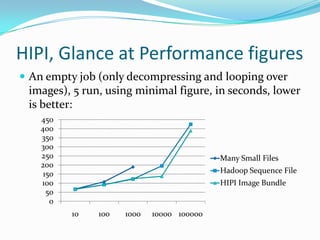

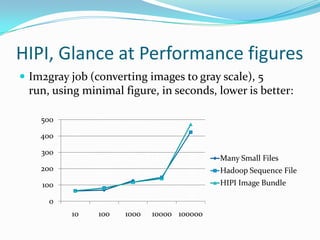

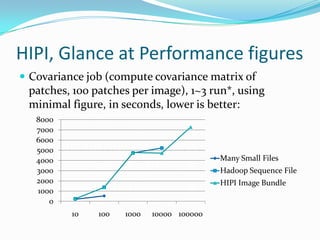

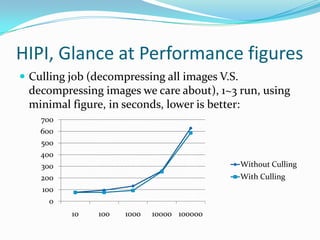

This document discusses HIPI, a computer vision framework for processing large image datasets in a distributed manner. It introduces HIPI's MapReduce-based workflow and how it implements image processing tasks at scale. Performance tests show that HIPI can redo principal component analysis on image datasets orders of magnitude larger than previous works. Optimizations like culling help improve performance by only decompressing necessary images. Overall, HIPI offers performance on par or better than alternatives and significant improvements for processing large collections of images.