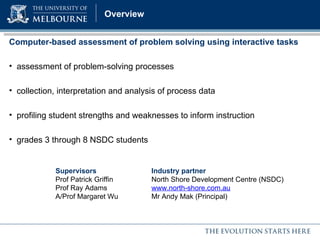

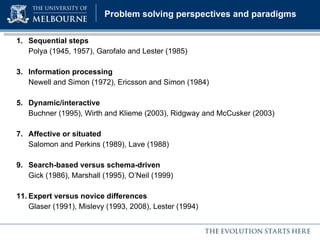

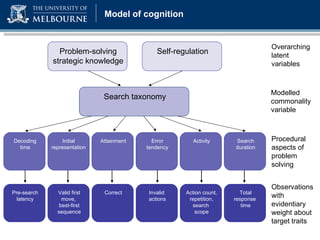

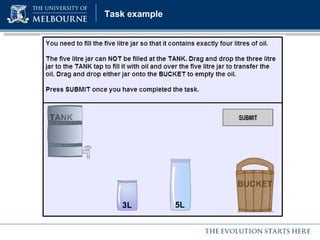

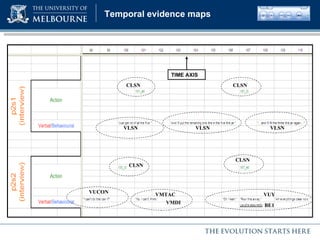

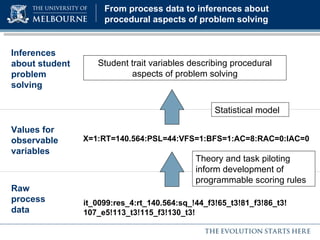

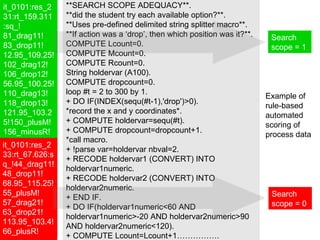

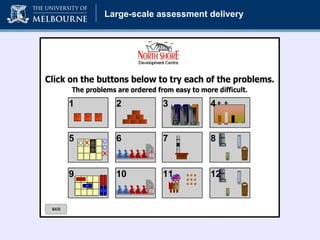

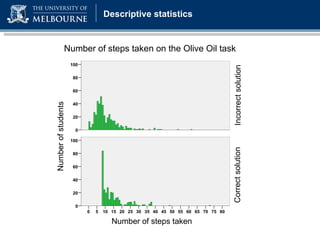

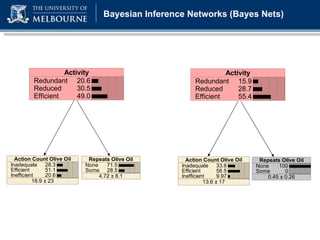

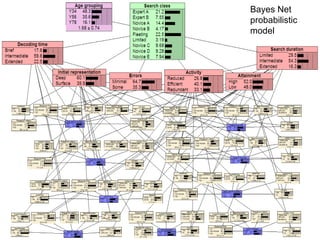

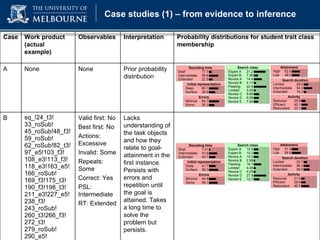

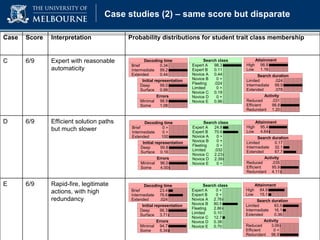

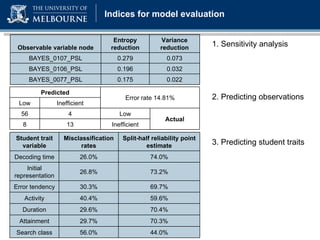

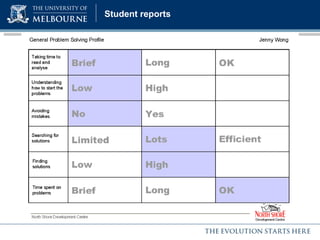

The document discusses a presentation on computer-based assessment of problem solving at the University of Heidelberg, focusing on the development of interactive tasks to evaluate student problem-solving skills for grades 3 to 8. It emphasizes the importance of understanding the cognitive processes involved in problem solving, using statistical modeling and process data to inform instructional methods. The research aims to enhance educational practices by identifying student strengths and weaknesses through innovative assessment methodologies.