The document discusses several key factors for optimizing HBase performance including:

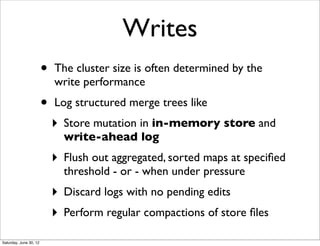

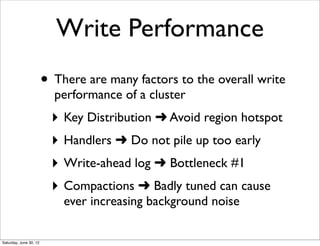

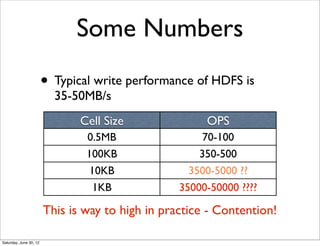

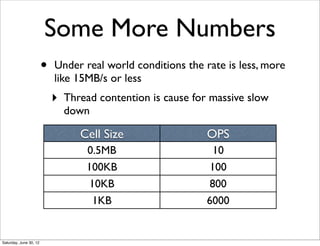

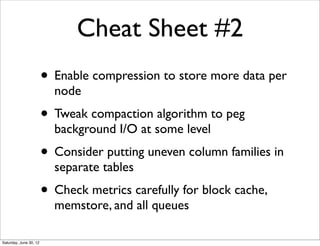

1. Reads and writes compete for disk, network, and thread resources so they can cause bottlenecks.

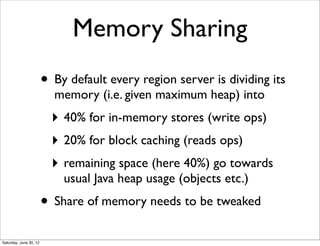

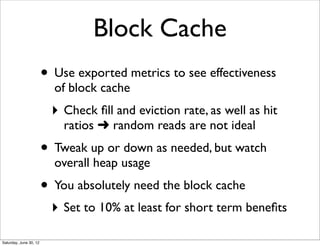

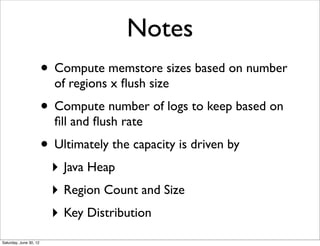

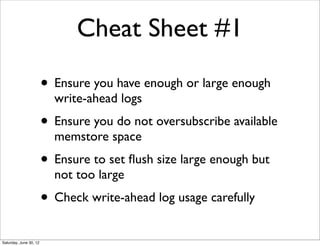

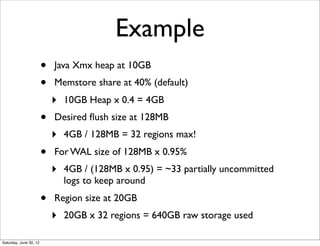

2. Memory allocation needs to balance space for memstores, block caching, and Java heap usage.

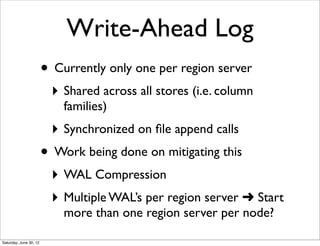

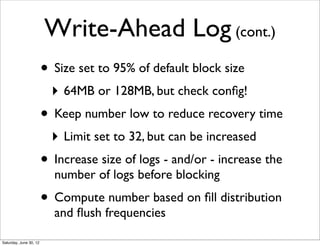

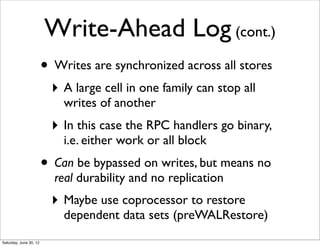

3. The write-ahead log can be a major bottleneck and increasing its size or number of logs can improve write performance.

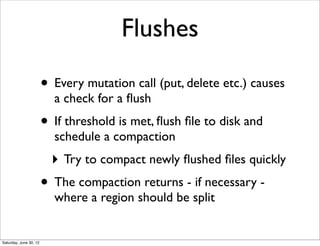

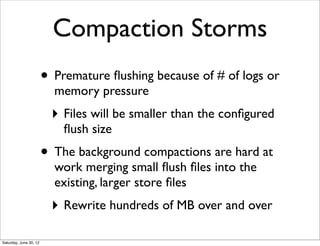

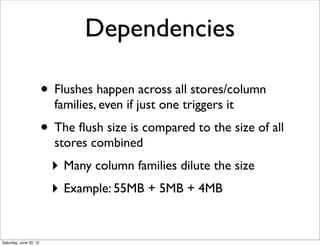

4. Flushes and compactions need to be tuned to avoid premature flushes causing "compaction storms".