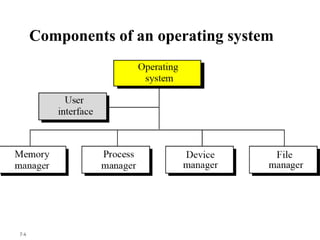

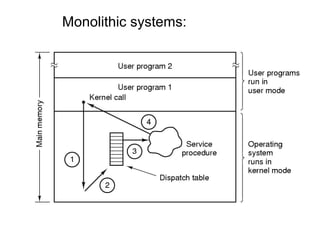

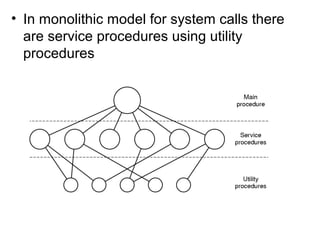

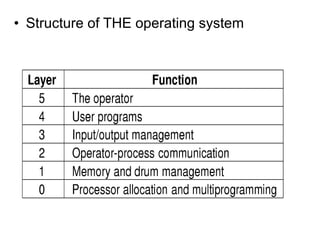

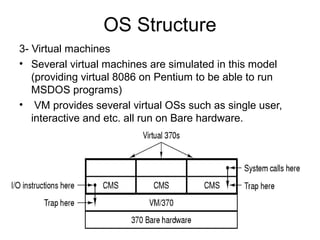

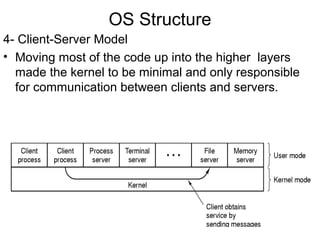

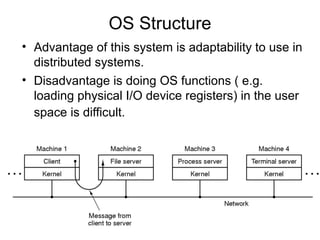

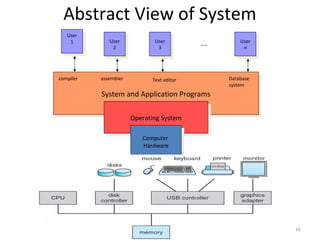

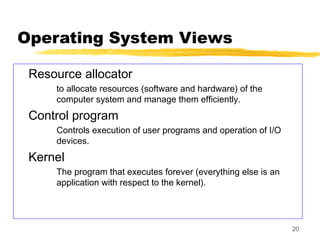

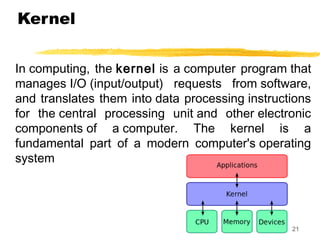

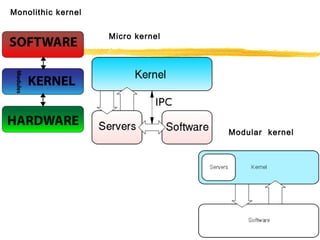

This document discusses operating system structures and components. It describes four main OS designs: monolithic systems, layered systems, virtual machines, and client-server models. For each design, it provides details on how the system is organized and which components are responsible for which tasks. It also discusses some advantages and disadvantages of the different approaches. The document concludes by explaining how client-server models address issues with distributing OS functions to user space by having some critical servers run in the kernel while still communicating with user processes.