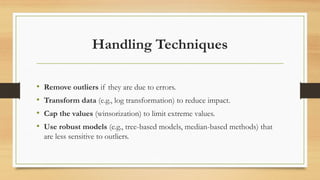

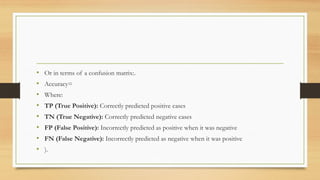

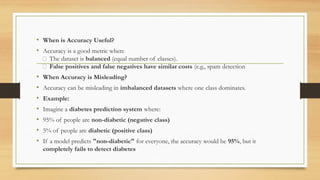

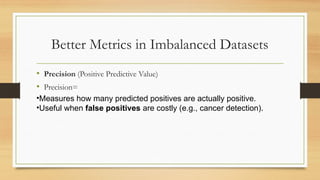

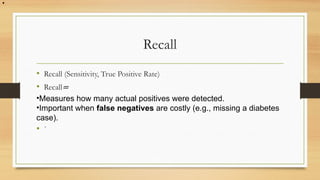

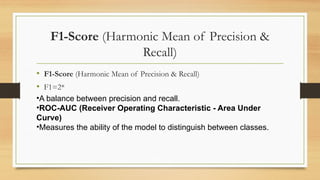

This document discusses outliers in machine learning, categorizing them into global, contextual, and collective outliers, along with their causes and effects on statistical analysis and model performance. It also outlines methods for detecting and handling outliers and emphasizes the importance of evaluation metrics like accuracy, precision, recall, F1-score, and ROC-AUC in assessing model performance, especially in imbalanced datasets. The document highlights that accurate metrics are crucial for effective model training and evaluation.