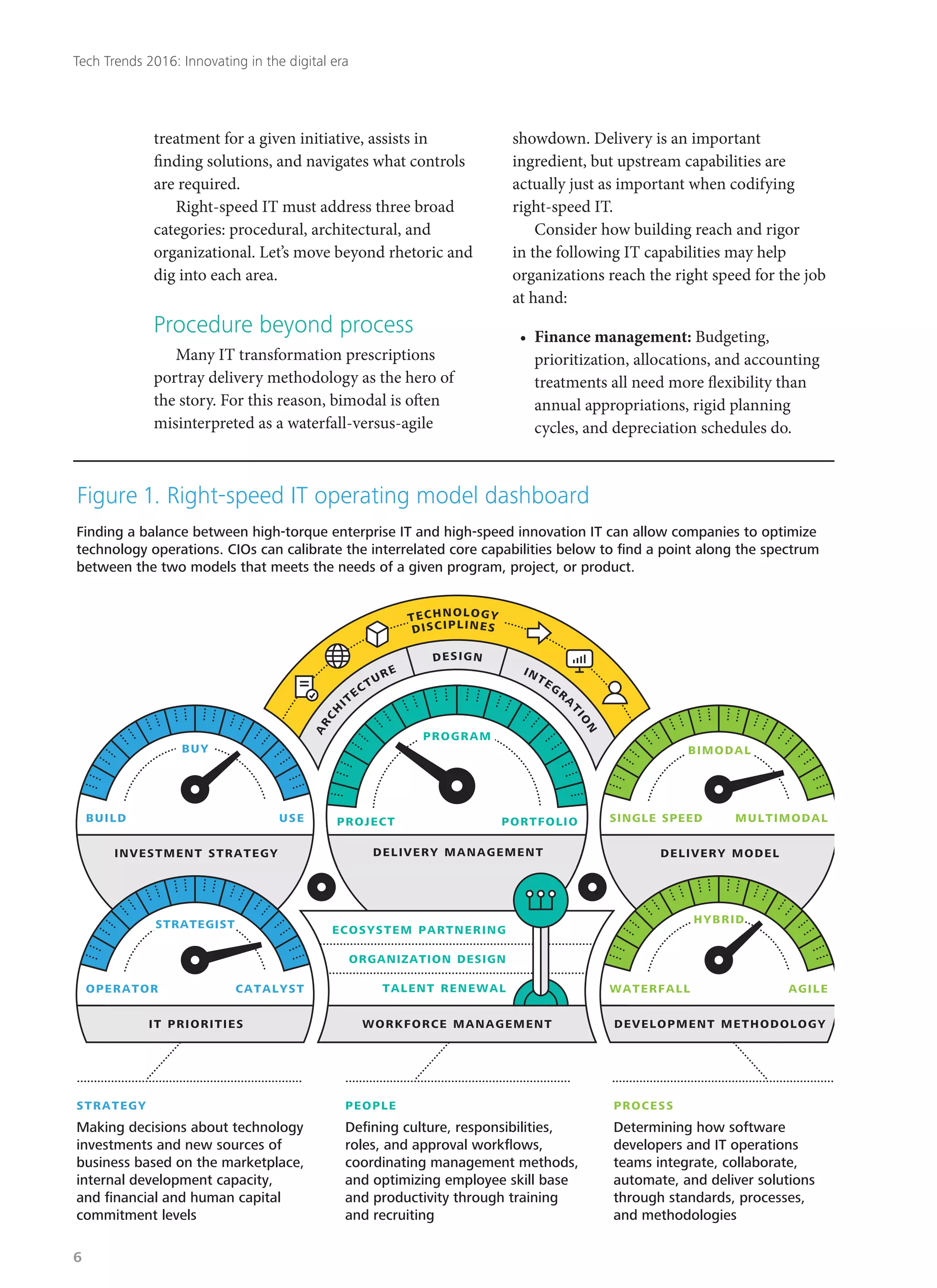

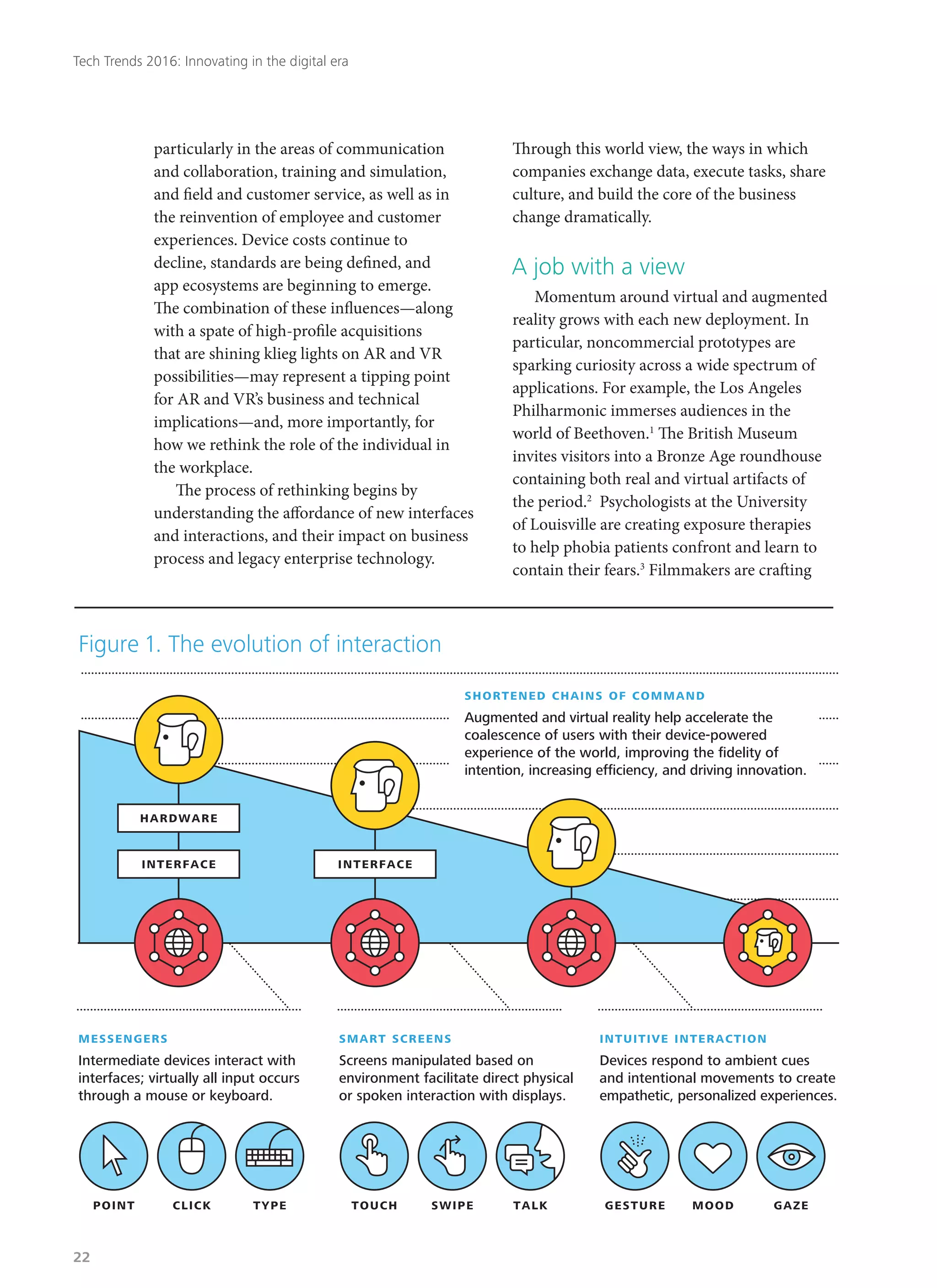

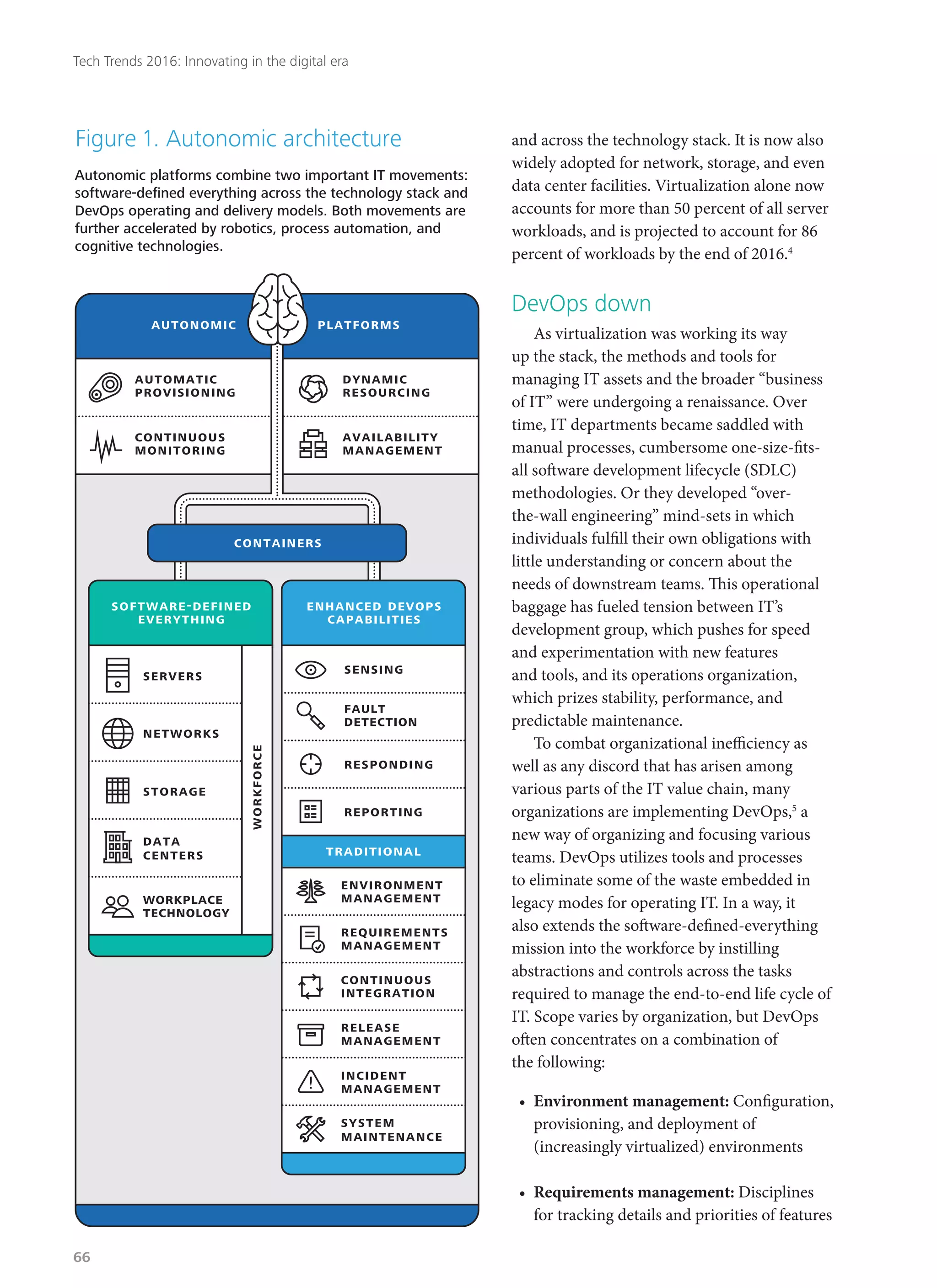

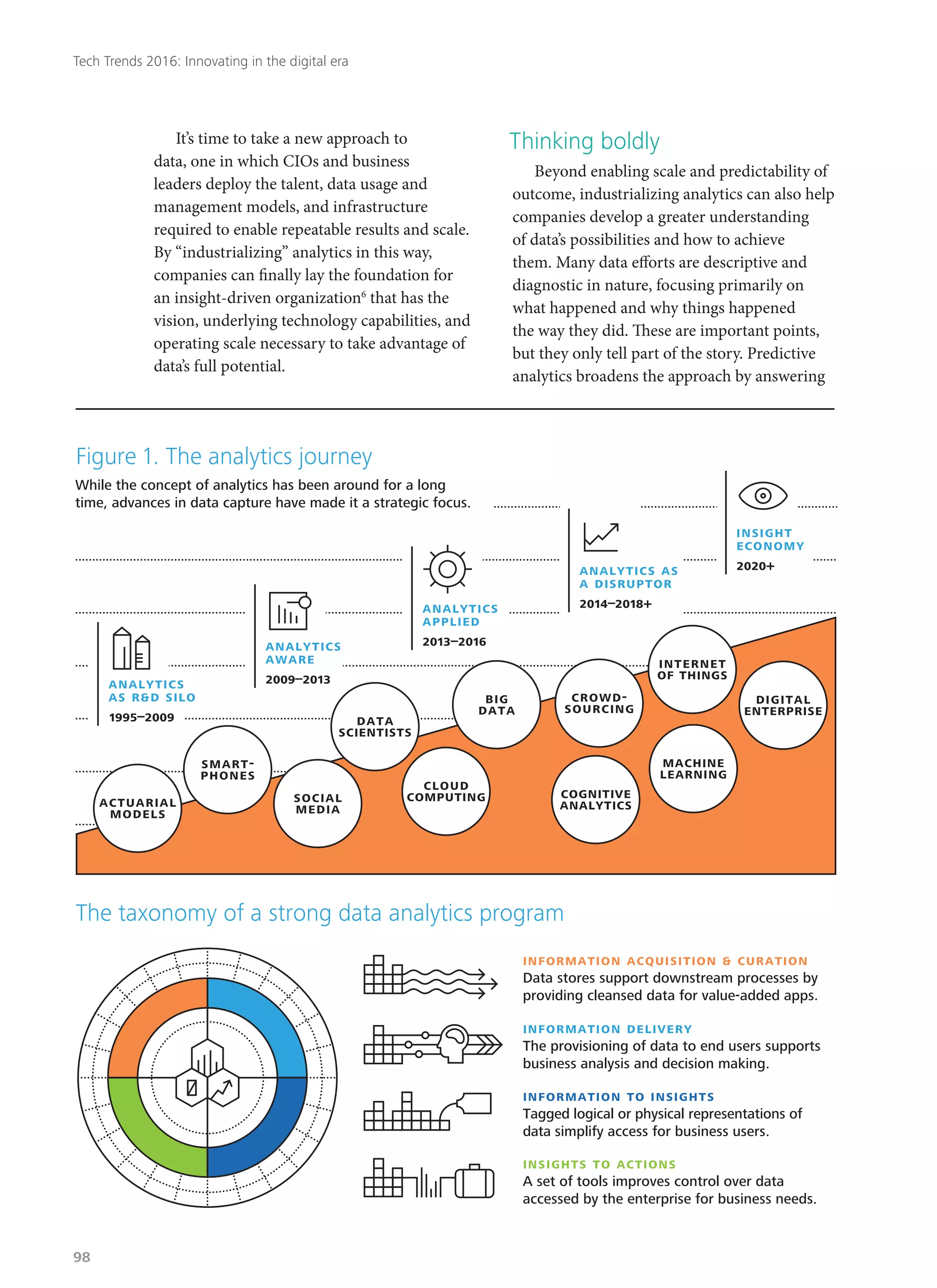

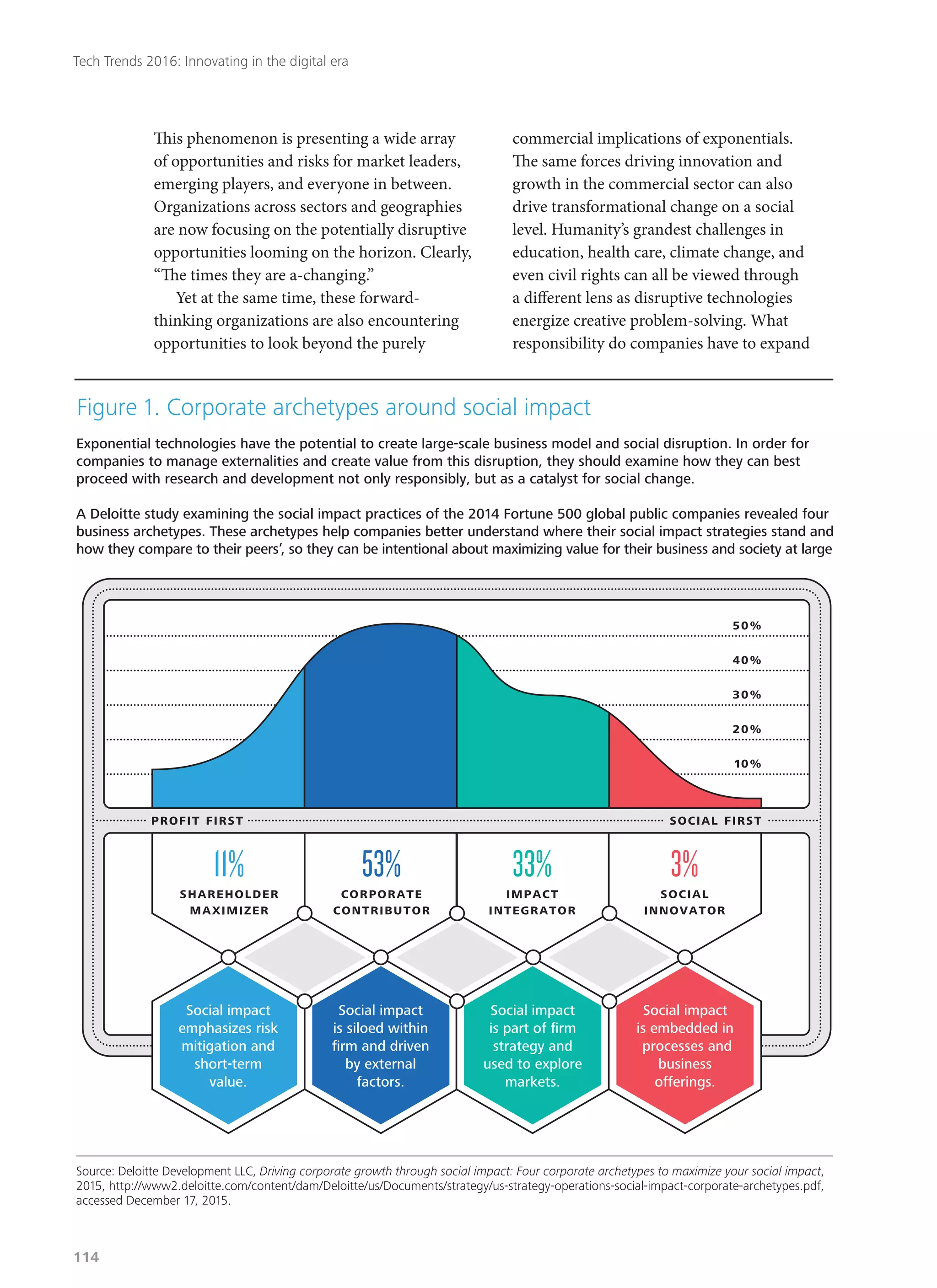

Deloitte's 2016 technology trends report explores how businesses must adapt to rapid digital innovation, with a focus on eight key trends likely to disrupt industries in the next two years. The report emphasizes the need for organizations to embrace a 'right-speed IT' approach, balancing stability with agility in technology management while addressing emerging cyber risks. As customer expectations evolve, companies are encouraged to innovate and rethink their operations to remain competitive in a constantly changing environment.