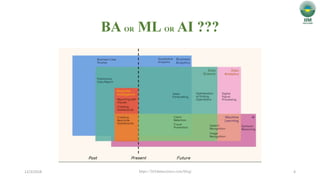

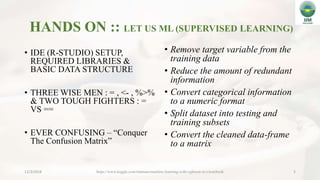

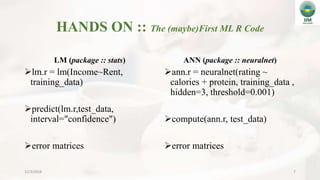

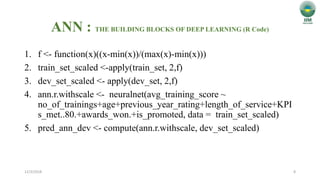

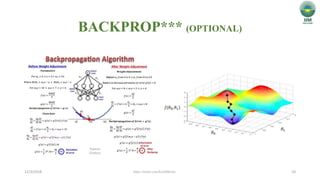

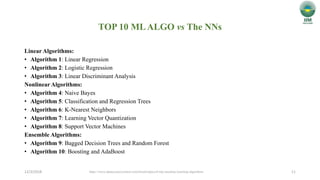

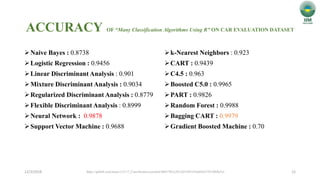

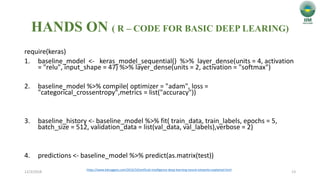

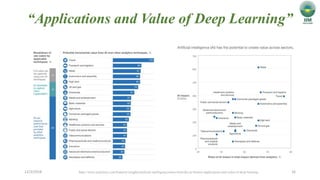

This document provides an introduction to deep learning through a series of sections on topics like the ontology of AI, deep learning in action demonstrated through examples, the differences between BA, ML and AI, hands-on examples of supervised learning in RStudio, an overview of neural networks and backpropagation, a comparison of different machine learning algorithms, and applications of deep learning with examples in areas like computer vision. It also profiles some of the leading figures in deep learning and artificial intelligence research.