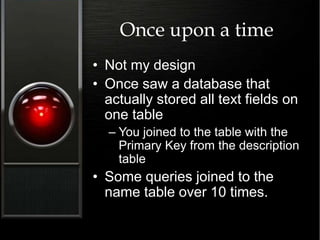

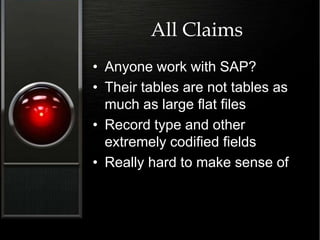

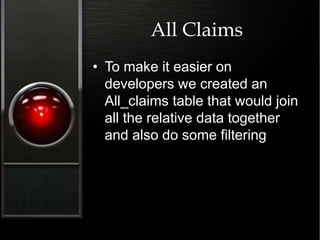

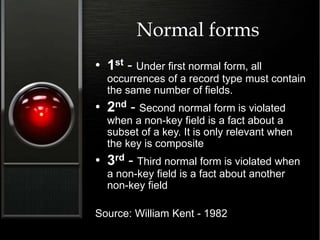

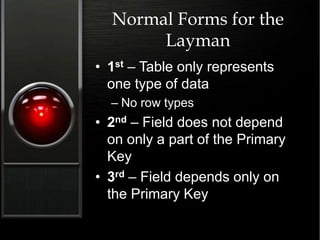

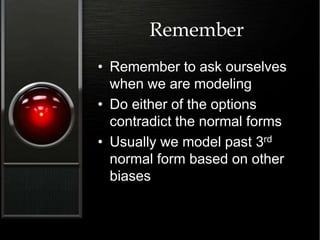

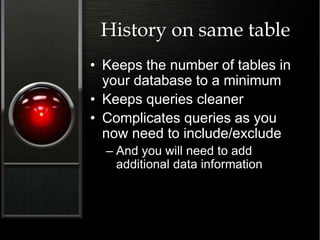

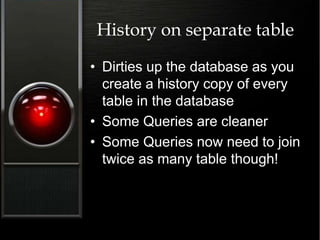

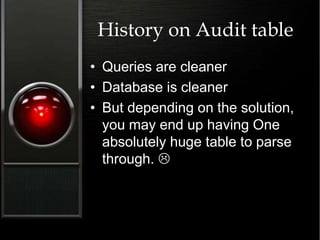

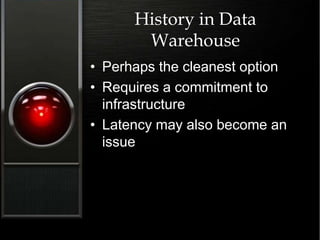

Terry Bunio provides examples from their experience as a data modeler of common mistakes made in data modeling. Some key mistakes discussed include: anthropomorphizing data models by modeling real world entities instead of application needs; overengineering models with unnecessary flexibility; choosing poor primary keys like GUIDs; overusing surrogate keys; using composite primary keys; handling deleted records and null values incorrectly; and making complex historical data models instead of deferring history to a data warehouse. The document advocates for simpler, more application-focused data models without unnecessary complexity.