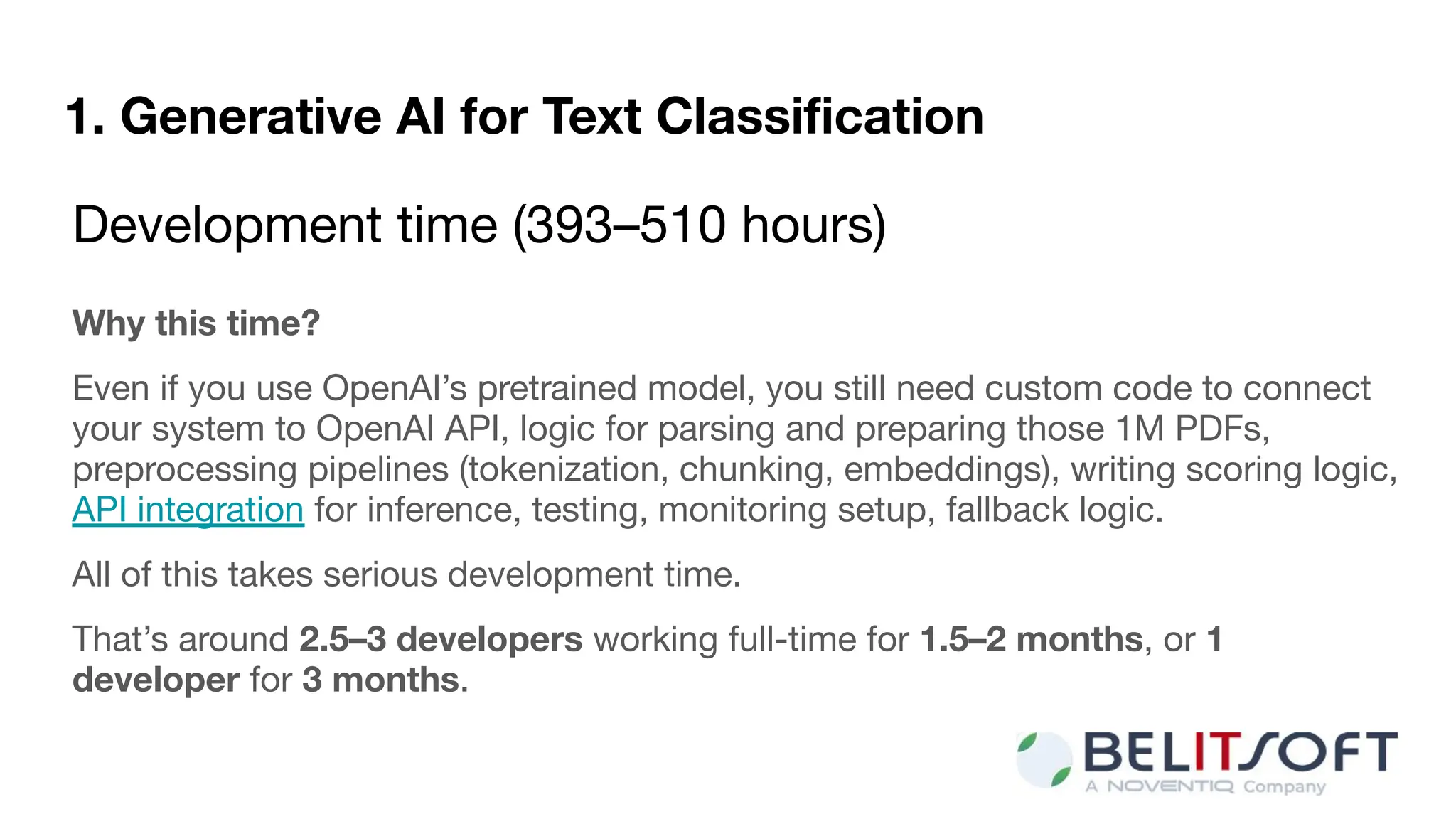

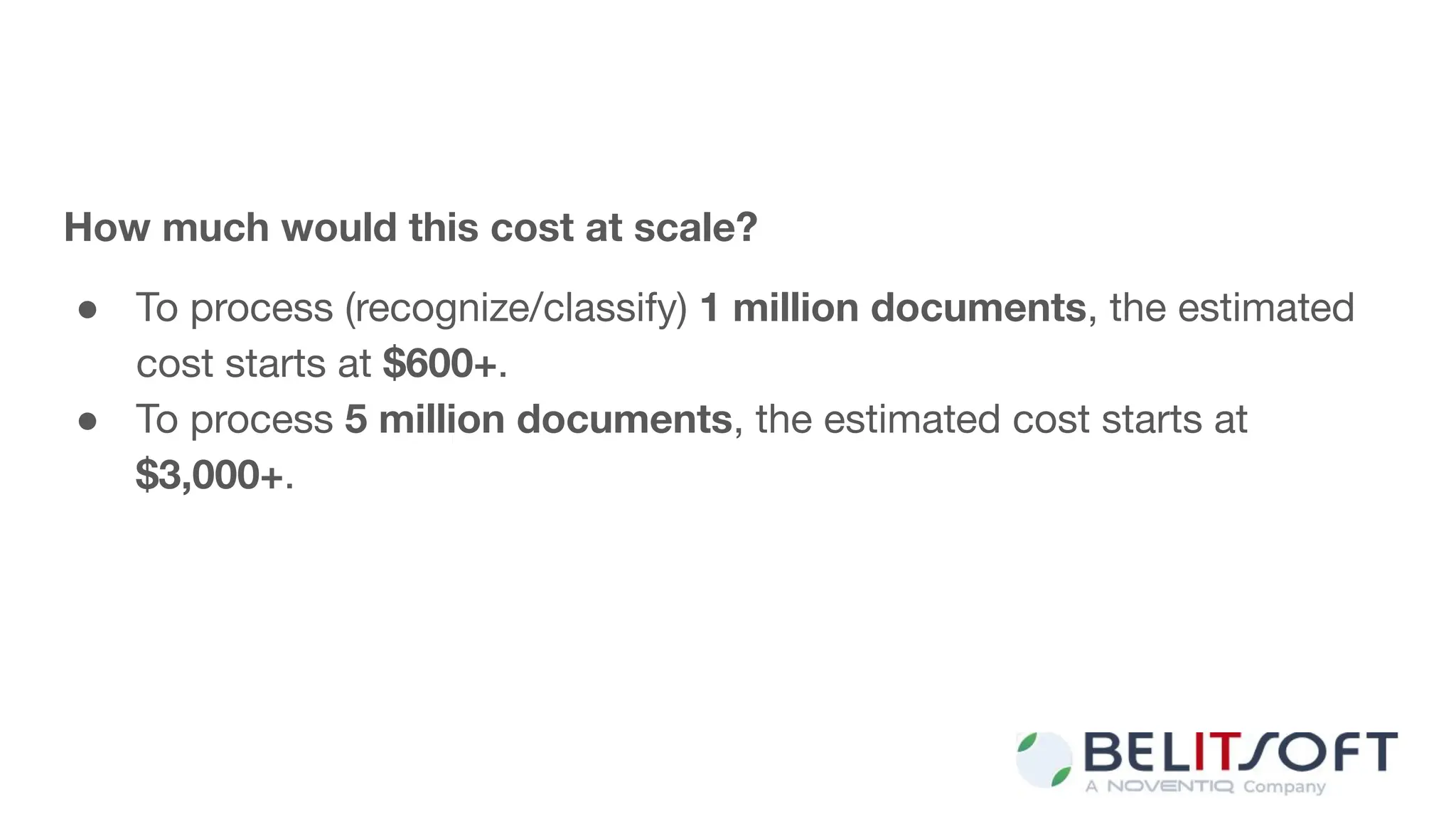

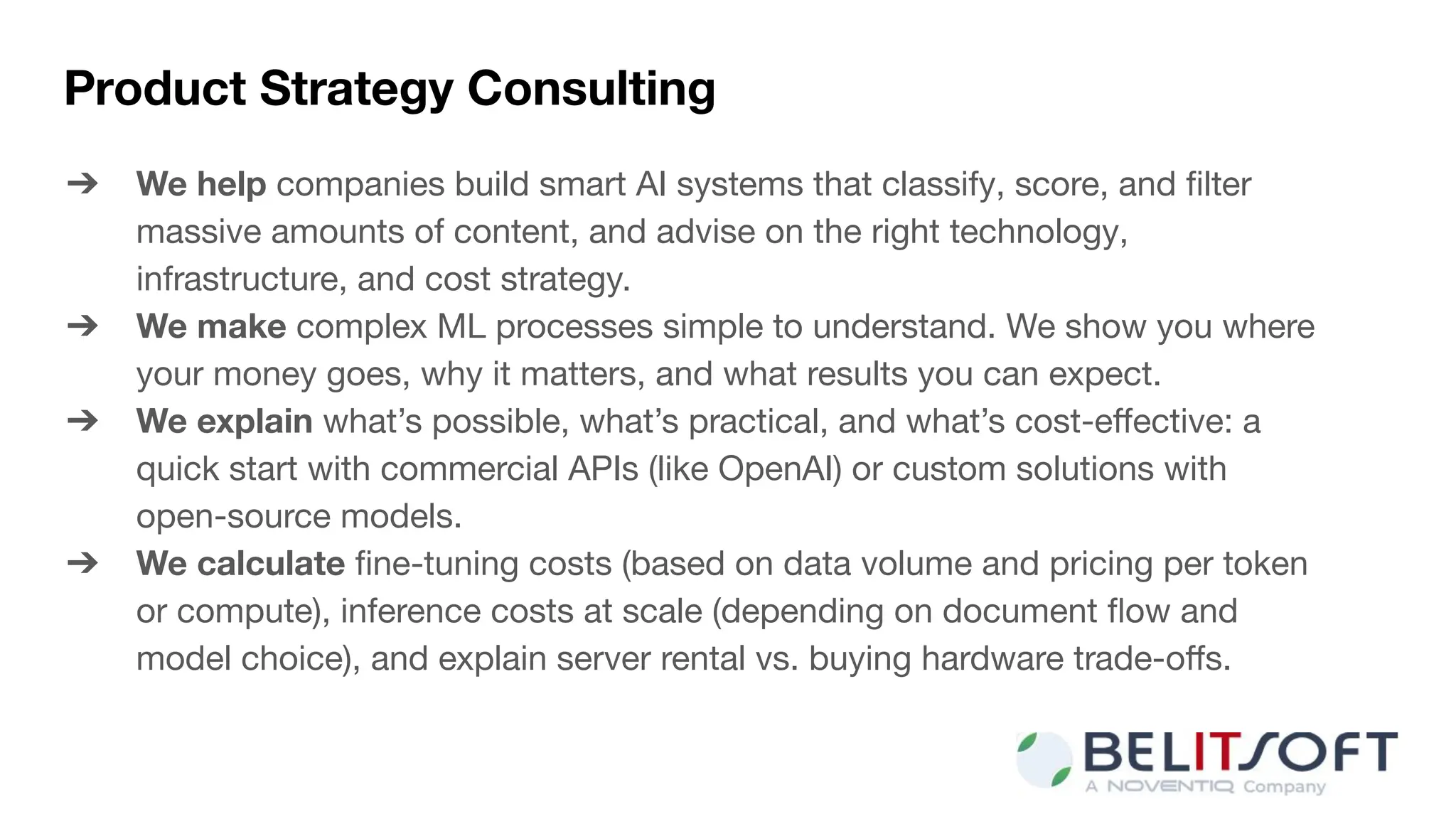

Our clients often ask us about the cost of building a AI document classification software that automatically analyzes, scores, selects, and prepares large volumes of content for future business use.

Read more on the https://belitsoft.com/how-much-does-it-cost-to-develop-ai-classification-system