This document provides a brief overview of concurrency concepts in Python, focusing on threading. It defines processes and threads, explaining that threads run within a process and can share resources, while processes are independent and communicate via interprocess communication. It discusses why to use concurrency for performance, responsiveness, and non-blocking behavior. It also covers the Global Interpreter Lock in Python and its implications for threading, and provides examples of creating and running threads.

![In [1]: import random

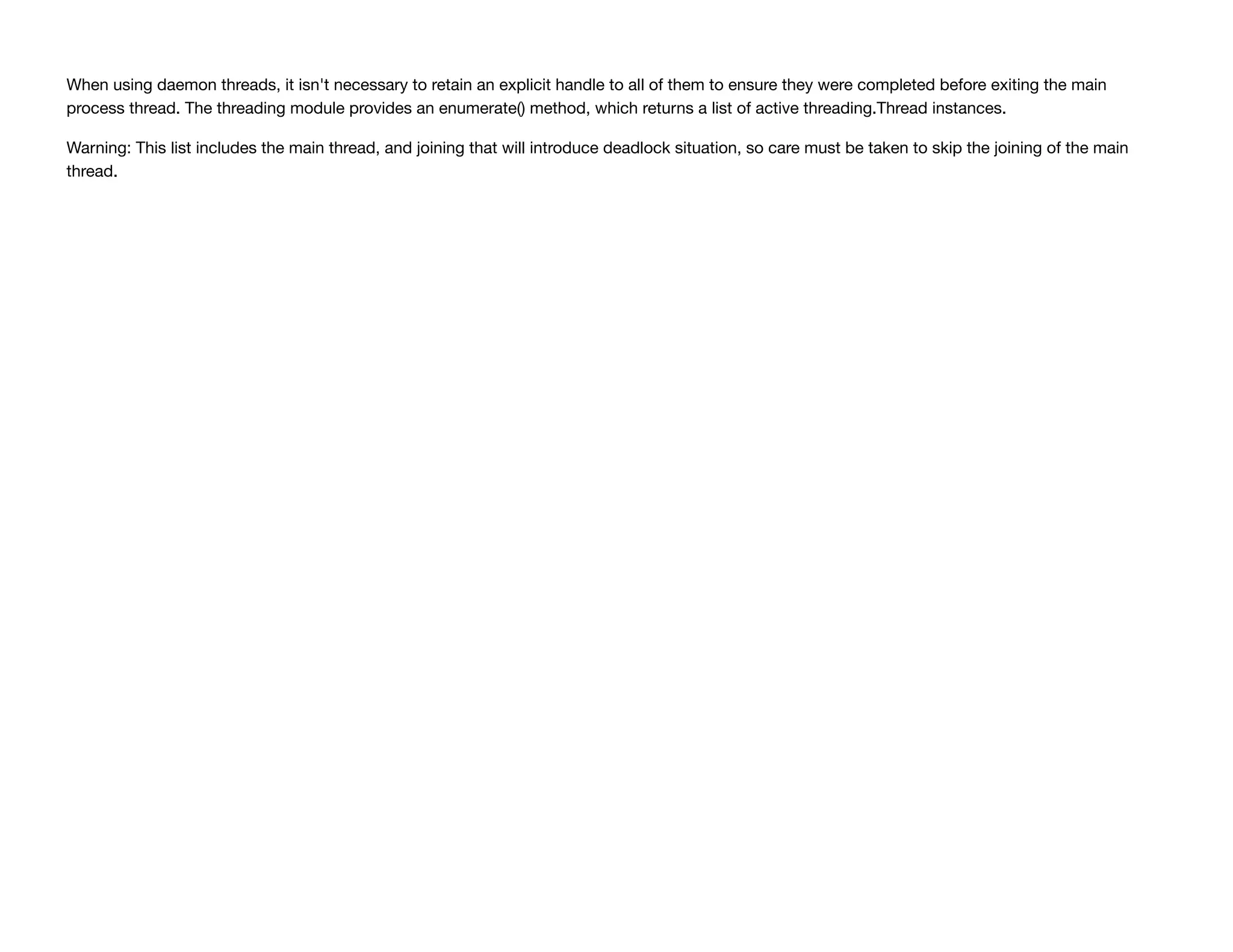

import threading

import logging

import time

reload(logging) # Needed because Jupyter mucks with the logging module.

reload(threading) # Ditto. Jupyter also mucks with the threading module.

logging.basicConfig(level=logging.INFO, format='%(asctime)s (%(threadName)-10s) %(message)s',

datefmt='%M:%S')](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-5-2048.jpg)

![In [2]: def _worker(fruit):

'''Perform some "work" by eating a piece of `fruit`.'''

logging.info('Eating %s ...' % repr(fruit)) # Indicates "work" is being performed.

logging.info('*Burp* That was a good %s!' % repr(fruit)) # Indicates the "work" is done.

def serial_example():

'''Executes 5 `_worker` methods serially, not using threads.'''

for fruit in ('apple', 'banana', 'grape', 'cherry', 'strawberry'):

_worker(fruit)

if __name__ == '__main__':

serial_example()

The Basics

"Why did the multithreaded Chicken cross the road?"

to To other side. get the

Concurrent vs Serial

Re-entrant vs Non-reentrant

Thread Safe

threading.Thread

run() vs start()

13:06 (MainThread) Eating 'apple' ...

13:06 (MainThread) *Burp* That was a good 'apple'!

13:06 (MainThread) Eating 'banana' ...

13:06 (MainThread) *Burp* That was a good 'banana'!

13:06 (MainThread) Eating 'grape' ...

13:06 (MainThread) *Burp* That was a good 'grape'!

13:06 (MainThread) Eating 'cherry' ...

13:06 (MainThread) *Burp* That was a good 'cherry'!

13:06 (MainThread) Eating 'strawberry' ...

13:06 (MainThread) *Burp* That was a good 'strawberry'!](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-8-2048.jpg)

![In [3]: def _worker():

'''A basic "worker" method which logs to stdout, indicating that it did some work.'''

logging.info('Doing Work ...')

def basic_example():

'''Executes 5 `_worker` methods on threads.'''

for _ in range(5):

thread_inst = threading.Thread(target=_worker)

thread_inst.start()

if __name__ == '__main__':

basic_example()

13:06 (Thread-1 ) Doing Work ...

13:06 (Thread-2 ) Doing Work ...

13:06 (Thread-3 ) Doing Work ...

13:06 (Thread-4 ) Doing Work ...

13:06 (Thread-5 ) Doing Work ...](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-9-2048.jpg)

![In [4]: def _worker(fruit):

'''Perform some "work" by eating a piece of `fruit` yet finishing after a random amount of tim

e.'''

logging.info('Eating %s ...' % repr(fruit)) # Indicates "work" is being perfored.

seconds_to_sleep = random.randint(1, 10) / 10.

logging.debug('This will take %s seconds to eat' % seconds_to_sleep)

time.sleep(seconds_to_sleep)

logging.info('*Burp* That was a good %s!' % repr(fruit)) # Indicates the "work" is done.

def asynchronous_example():

'''Executes 5 `_worker` methods on threads.'''

threads = list()

for fruit in ('apple', 'banana', 'grape', 'cherry', 'strawberry'):

thread_inst = threading.Thread(target=_worker, args=(fruit,))

threads.append(thread_inst)

[thread_inst.start() for thread_inst in threads] # pylint: disable-msg=expression-not-assigned

[thread_inst.join() for thread_inst in threads] # Needed for Jupyter

if __name__ == '__main__':

asynchronous_example()

13:06 (Thread-6 ) Eating 'apple' ...

13:06 (Thread-7 ) Eating 'banana' ...

13:06 (Thread-8 ) Eating 'grape' ...

13:06 (Thread-9 ) Eating 'cherry' ...

13:06 (Thread-10 ) Eating 'strawberry' ...

13:06 (Thread-8 ) *Burp* That was a good 'grape'!

13:06 (Thread-6 ) *Burp* That was a good 'apple'!

13:06 (Thread-10 ) *Burp* That was a good 'strawberry'!

13:06 (Thread-9 ) *Burp* That was a good 'cherry'!

13:07 (Thread-7 ) *Burp* That was a good 'banana'!](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-10-2048.jpg)

![In [5]: def _worker(seconds_to_sleep=.1):

'''A "worker" method that indicates when work is starting, waits a static amount of time, then in

dicates when work is done.'''

logging.info('Starting %s' % threading.currentThread().getName())

time.sleep(seconds_to_sleep)

logging.info('Exiting %s' % threading.currentThread().getName())

def daemon_vs_non_daemon_example():

daemon_thread_inst = threading.Thread(target=_worker, name='Daemon Worker', args=(3,))

daemon_thread_inst.setDaemon(True)

non_daemon_thread_inst = threading.Thread(target=_worker, name='Non-daemon Worker')

daemon_thread_inst.start()

non_daemon_thread_inst.start()

# Remove these two lines and you will not see "Exiting Daemon Worker".

daemon_thread_inst.join()

non_daemon_thread_inst.join()

if __name__ == '__main__':

daemon_vs_non_daemon_example()

Advanced Features

currentThread()

enumerate()

13:07 (Daemon Worker) Starting Daemon Worker

13:07 (Non-daemon Worker) Starting Non-daemon Worker

13:07 (Non-daemon Worker) Exiting Non-daemon Worker

13:10 (Daemon Worker) Exiting Daemon Worker](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-12-2048.jpg)

![In [18]: def _worker(fruit):

'''Perform some "work" by eating a piece of `fruit` yet finishing after a random amount of tim

e.'''

logging.info('Eating %s ...' % repr(fruit)) # Indicates "work" is being perfored.

seconds_to_sleep = random.randint(5, 15)

logging.debug('This will take %s seconds to eat' % seconds_to_sleep)

time.sleep(seconds_to_sleep)

logging.info('*Burp* That was a good %s!' % repr(fruit)) # Indicates the "work" is done.

def enumeration_example():

for fruit in ('apple', 'banana', 'grape', 'cherry', 'strawberry'):

thread_inst = threading.Thread(target=_worker, name='%s' % fruit, args=(fruit,))

thread_inst.setDaemon(True)

thread_inst.start()

main_thread = threading.currentThread()

for thread_inst in threading.enumerate():

logging.debug('Active threads: %s' % repr(', '.join(sorted([thread_inst.name for thread_inst

in threading.enumerate()]))))

if thread_inst is main_thread:

continue

logging.info('Joining on %s Worker ...' % repr(thread_inst.getName()))

thread_inst.join(16) # Needed for Jupyter

if __name__ == '__main__':

enumeration_example()

14:19 (apple ) Eating 'apple' ...

14:19 (banana ) Eating 'banana' ...

14:19 (grape ) Eating 'grape' ...

14:19 (cherry ) Eating 'cherry' ...

14:19 (strawberry) Eating 'strawberry' ...

14:19 (MainThread) Joining on 'strawberry' Worker ...

14:25 (grape ) *Burp* That was a good 'grape'!

14:26 (banana ) *Burp* That was a good 'banana'!

14:27 (cherry ) *Burp* That was a good 'cherry'!

14:28 (strawberry) *Burp* That was a good 'strawberry'!

14:31 (apple ) *Burp* That was a good 'apple'!](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-14-2048.jpg)

![Subclassing

Can overload the run() method

Useful for extending functionality

In [7]: class _CustomThread(threading.Thread):

def __init__(self, foo, *args, **kwargs):

super(_CustomThread, self).__init__(*args, **kwargs)

self.foo_ = foo

self.args = args

self.kwargs = kwargs

def run(self):

logging.info('Executing Foo %s with args %s and kwargs %s' % (repr(self.foo_),

repr(self.args), repr(self.kwargs)))

def subclassing_example():

for index in range(1, 6):

thread_inst = _CustomThread(foo='Fighter', name='Worker %d' % index)

thread_inst.start()

if __name__ == '__main__':

subclassing_example()

13:21 (Worker 1 ) Executing Foo 'Fighter' with args () and kwargs {'name': 'Worker 1'}

13:21 (Worker 2 ) Executing Foo 'Fighter' with args () and kwargs {'name': 'Worker 2'}

13:21 (Worker 3 ) Executing Foo 'Fighter' with args () and kwargs {'name': 'Worker 3'}

13:21 (Worker 4 ) Executing Foo 'Fighter' with args () and kwargs {'name': 'Worker 4'}

13:21 (Worker 5 ) Executing Foo 'Fighter' with args () and kwargs {'name': 'Worker 5'}](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-15-2048.jpg)

![In [19]: def _worker():

'''A very basic "worker" method which logs to stdout, indicating that it did some work.'''

logging.info('Doing Work ...')

def timer_example():

thread_inst_1 = threading.Timer(3, _worker)

thread_inst_1.setName('Worker 1')

thread_inst_2 = threading.Timer(3, _worker)

thread_inst_2.setName('Worker 2')

logging.info('Starting timer threads ...')

thread_inst_1.start()

thread_inst_2.start()

logging.info('Waiting before canceling %s ...' % repr(thread_inst_2.getName()))

time.sleep(2.)

logging.info('Canceling %s ...' % repr(thread_inst_2.getName()))

thread_inst_2.cancel()

logging.info('Done!')

if __name__ == '__main__':

timer_example()

Signaling

threading.Event

One thread signals an event; the others wait for it

Useful applications

14:42 (MainThread) Starting timer threads ...

14:42 (MainThread) Waiting before canceling 'Worker 2' ...

14:44 (MainThread) Canceling 'Worker 2' ...

14:44 (MainThread) Done!

14:45 (Worker 1 ) Doing Work ...](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-17-2048.jpg)

![class threading.Event

The internal flag is initially false.

is_set()

isSet()

Return true if and only if the internal flag is true.

set()

Set the internal flag to true. All threads waiting for it to become true are awakened. Threads that c

all wait() once the flag is true will not block at all.

clear()

Reset the internal flag to false. Subsequently, threads calling wait() will block until set() is call

ed to set the internal flag to true again.

wait([timeout])

Block until the internal flag is true. If the internal flag is true on entry, return immediately. Oth

erwise, block until another thread calls set() to set the flag to true, or until the optional timeout occ

urs.

When the timeout argument is present and not None, it should be a floating point number specifying a time

out for the operation in seconds (or fractions thereof).

This method returns the internal flag on exit, so it will always return True except if a timeout is given

and the operation times out.](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-18-2048.jpg)

![In [9]: EVENT = threading.Event()

def _blocking_event():

logging.info('Starting')

event_is_set = EVENT.wait()

logging.info('Event is set: %s' % repr(event_is_set))

def _non_blocking_event(timeout):

while not EVENT.isSet():

logging.info('Starting')

event_is_set = EVENT.wait(timeout)

logging.info('Event is set: %s' % repr(event_is_set))

if event_is_set:

logging.info('Processing event ...')

else:

logging.info('Waiting ...')

In [20]: def signaling_example():

blocking_thread_inst = threading.Thread(target=_blocking_event, name='Blocking Event')

non_blocking_thread_inst = threading.Thread(target=_non_blocking_event, name='Non-Blocking

Event', args=(2.,))

blocking_thread_inst.start()

non_blocking_thread_inst.start()

logging.info('Waiting until Event.set() is called ...')

time.sleep(5.)

EVENT.set()

logging.info('Event is set')

if __name__ == '__main__':

signaling_example()

14:52 (Blocking Event) Starting

14:52 (MainThread) Waiting until Event.set() is called ...

14:52 (Blocking Event) Event is set: True

14:57 (MainThread) Event is set](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-19-2048.jpg)

![Controlling access to shared resources (such as physical hardware, test equipment, memory, Disk I/O, etc.) is important to prevent corruption or

missed data.

In Python, not all data structures are equal with regards to being thread-safe. So what data structures are thread-safe and which are not? Python’s

built-in data structures (Lists, Dictionaries, etc.) are thread-safe as a side-effect of having atomic byte-codes for manipulating them (the GIL is not

released in the middle of an update). Other data structures implemented in Python, or simpler types like Integers and Floats, don’t have that

protection. To guard against simultaneous access to an object, use a threading.Lock object.

A primitive lock is in one of two states, “locked” or “unlocked”. It is created in the unlocked state. It has two basic methods, acquire() and release().

When the state is unlocked, acquire() changes the state to locked and returns immediately. When the state is locked, acquire() blocks until a call to

release() in another thread changes it to unlocked, then the acquire() call resets it to locked and returns. The release() method should only be called in

the locked state; it changes the state to unlocked and returns immediately. If an attempt is made to release an unlocked lock, a ThreadError will be

raised.

When more than one thread is blocked in acquire() waiting for the state to turn to unlocked, only one thread proceeds when a release() call resets the

state to unlocked; which one of the waiting threads proceeds is not defined, and may vary across implementations.

All methods are executed atomically.

Lock.acquire([blocking]) Acquire a lock, blocking or non-blocking.

When invoked with the blocking argument set to True (the default), block until the lock is unlocked, then set it to locked and return True.

When invoked with the blocking argument set to False, do not block. If a call with blocking set to True would block, return False immediately;

otherwise, set the lock to locked and return True.

Lock.release() Release a lock.

When the lock is locked, reset it to unlocked, and return. If any other threads are blocked waiting for the lock to become unlocked, allow exactly one

of them to proceed.

When invoked on an unlocked lock, a ThreadError is raised.

There is no return value.](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-21-2048.jpg)

![In [11]: LOCK = threading.Lock()

def _worker():

logging.info('Waiting for lock ...')

LOCK.acquire()

logging.info('Acquired lock')

try:

logging.info('Doing work on shared resource ...')

time.sleep(random.randint(30, 50) / 10.)

finally:

LOCK.release()

logging.info('Released lock')

In [12]: def locking_example():

threads = list()

for index in range(1, 3):

thread_inst = threading.Thread(target=_worker, name='Worker %d' % index)

threads.append(thread_inst)

random.shuffle(threads)

[thread_inst.start() for thread_inst in threads] # pylint: disable-msg=expression-not-assigned

logging.info('Waiting for worker threads to finish ...')

main_thread = threading.currentThread()

for thread_inst in threading.enumerate():

if thread_inst is not main_thread:

thread_inst.join(10) # Needed for Jupyter since they overload the threading module!

if __name__ == '__main__':

locking_example()

13:28 (Worker 1 ) Waiting for lock ...

13:28 (Worker 2 ) Waiting for lock ...

13:28 (MainThread) Waiting for worker threads to finish ...

13:28 (Worker 1 ) Acquired lock

13:28 (Worker 1 ) Doing work on shared resource ...

13:28 (Non-Blocking Event) Event is set: True

13:28 (Non-Blocking Event) Processing event ...

13:31 (Worker 1 ) Released lock

13:31 (Worker 2 ) Acquired lock

13:31 (Worker 2 ) Doing work on shared resource ...

13:34 (Worker 2 ) Released lock](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-22-2048.jpg)

![Reentrant Locks

threading.RLock

Useful applications

Normal threading.Lock objects cannot be acquired more than once, even by the same thread. This can produce undesirable side-effects, especially if

a lock is accessed by more than one method in the call chain.

A reentrant lock is a synchronization primitive that may be acquired multiple times by the same thread. Internally, it uses the concepts of “owning

thread” and “recursion level” in addition to the locked/unlocked state used by primitive locks. In the locked state, some thread owns the lock; in the

unlocked state, no thread owns it.

To lock the lock, a thread calls its acquire() method; this returns once the thread owns the lock. To unlock the lock, a thread calls its release() method.

acquire()/release() call pairs may be nested; only the final release() (the release() of the outermost pair) resets the lock to unlocked and allows another

thread blocked in acquire() to proceed.

RLock.acquire([blocking=1]) Acquire a lock, blocking or non-blocking.

When invoked without arguments: if this thread already owns the lock, increment the recursion level by one, and return immediately. Otherwise, if

another thread owns the lock, block until the lock is unlocked. Once the lock is unlocked (not owned by any thread), then grab ownership, set the

recursion level to one, and return. If more than one thread is blocked waiting until the lock is unlocked, only one at a time will be able to grab

ownership of the lock. There is no return value in this case.

When invoked with the blocking argument set to true, do the same thing as when called without arguments, and return true.

When invoked with the blocking argument set to false, do not block. If a call without an argument would block, return false immediately; otherwise, do

the same thing as when called without arguments, and return true.

RLock.release() Release a lock, decrementing the recursion level. If after the decrement it is zero, reset the lock to unlocked (not owned by any thread),

and if any other threads are blocked waiting for the lock to become unlocked, allow exactly one of them to proceed. If after the decrement the

recursion level is still nonzero, the lock remains locked and owned by the calling thread.

Only call this method when the calling thread owns the lock. A RuntimeError is raised if this method is called when the lock is unlocked.

There is no return value.](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-23-2048.jpg)

![In [13]: logging.basicConfig(level=logging.INFO, format='%(asctime)s (%(threadName)-10s) %(message)s')

def rlocking_example():

lock = threading.Lock()

logging.info('First try to acquire a threading.Lock object: %s' % bool(lock.acquire()))

logging.info('Second try to acquire a threading.Lock object: %s' % bool(lock.acquire(0)))

rlock = threading.RLock()

logging.info('First try to acquire a threading.RLock object: %s' % bool(rlock.acquire()))

logging.info('Second try to acquire a threading.RLock object: %s' % bool(rlock.acquire(0)))

if __name__ == '__main__':

rlocking_example()

Using threading.Lock as Context Managers

Eliminates try/finally block

Cleaner, more readable and safer code

13:34 (MainThread) First try to acquire a threading.Lock object: True

13:34 (MainThread) Second try to acquire a threading.Lock object: False

13:34 (MainThread) First try to acquire a threading.RLock object: True

13:34 (MainThread) Second try to acquire a threading.RLock object: True](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-24-2048.jpg)

![In [14]: LOCK = threading.Lock()

def _worker():

logging.info('Waiting for lock ...')

with LOCK:

logging.info('Acquired lock')

logging.info('Doing work on shared resource ...')

time.sleep(random.randint(30, 50) / 10.)

logging.info('Released lock')

def locking_example():

threads = list()

for index in range(1, 3):

thread_inst = threading.Thread(target=_worker, name='Worker %d' % index)

threads.append(thread_inst)

random.shuffle(threads)

[thread_inst.start() for thread_inst in threads] # pylint: disable-msg=expression-not-assigned

logging.info('Waiting for worker threads to finish ...')

main_thread = threading.currentThread()

for thread_inst in threading.enumerate():

if thread_inst is not main_thread:

thread_inst.join(10) # Needed for Jupyter since they overload the threading module!

if __name__ == '__main__':

locking_example()

13:34 (Worker 2 ) Waiting for lock ...

13:34 (Worker 1 ) Waiting for lock ...

13:34 (MainThread) Waiting for worker threads to finish ...

13:34 (Worker 2 ) Acquired lock

13:34 (Worker 2 ) Doing work on shared resource ...

13:39 (Worker 2 ) Released lock

13:39 (Worker 1 ) Acquired lock

13:39 (Worker 1 ) Doing work on shared resource ...

13:44 (Worker 1 ) Released lock](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-25-2048.jpg)

![In [15]: CONDITION = threading.Condition()

def _consumer():

logging.info('Starting consumer thread')

with CONDITION:

CONDITION.wait()

logging.info('Resource is available to consumer')

def _producer():

logging.info('Starting producer thread')

with CONDITION:

logging.info('Making resource available')

CONDITION.notifyAll()

def synchronization_example():

thread_inst_consumer_1 = threading.Thread(target=_consumer, name='Consumer 1')

thread_inst_consumer_2 = threading.Thread(target=_consumer, name='Consumer 2')

thread_inst_consumer_3 = threading.Thread(target=_consumer, name='Consumer 3')

thread_inst_producer = threading.Thread(target=_producer, name='Producer')

for thread_inst in (thread_inst_consumer_1, thread_inst_consumer_2, thread_inst_consumer_3):

thread_inst.start()

time.sleep(2)

thread_inst_producer.start()

if __name__ == '__main__':

synchronization_example()

13:44 (Consumer 1) Starting consumer thread

13:44 (Consumer 2) Starting consumer thread

13:44 (Consumer 3) Starting consumer thread

13:46 (Producer ) Starting producer thread

13:46 (Producer ) Making resource available

13:46 (Consumer 2) Resource is available to consumer

13:46 (Consumer 3) Resource is available to consumer

13:46 (Consumer 1) Resource is available to consumer](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-27-2048.jpg)

![Resource Management

threading.Semaphore

Blocks when internal counter reaches 0

Useful applications

Sometimes it is useful to allow more than one worker access to a resource at a time, while still limiting the overall number. For example, a connection

pool might support a fixed number of simultaneous connections, or a network application might support a fixed number of concurrent downloads. A

threading.Semaphore is one way to manage those connections.

class threading.Semaphore([value]) The optional argument gives the initial value for the internal counter; it defaults to 1. If the value given is less than

0, ValueError is raised.

acquire([blocking]) Acquire a semaphore.

When invoked without arguments: if the internal counter is larger than zero on entry, decrement it by one and return immediately. If it is zero on entry,

block, waiting until some other thread has called release() to make it larger than zero. This is done with proper interlocking so that if multiple acquire()

calls are blocked, release() will wake exactly one of them up. The implementation may pick one at random, so the order in which blocked threads are

awakened should not be relied on. There is no return value in this case.

When invoked with blocking set to true, do the same thing as when called without arguments, and return true.

When invoked with blocking set to false, do not block. If a call without an argument would block, return false immediately; otherwise, do the same

thing as when called without arguments, and return true.

release() Release a semaphore, incrementing the internal counter by one. When it was zero on entry and another thread is waiting for it to become

larger than zero again, wake up that thread.](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-28-2048.jpg)

![In [16]: LOCK = threading.Lock()

SEMAPHORE = threading.Semaphore(2)

def _worker():

logging.info('Waiting for lock ...')

with LOCK:

logging.info('Acquired lock')

logging.info('Doing work on shared resource ...')

time.sleep(random.randint(30, 50) / 10.)

logging.info('Released lock')

def arbiter():

logging.info('Waiting to join the pool ...')

with SEMAPHORE:

logging.info('Now part of the pool party!')

_worker()](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-29-2048.jpg)

![In [17]: def resource_management_example():

for index in range(1, 4):

thread_inst = threading.Thread(target=arbiter, name='Worker %d' % index)

thread_inst.start()

if __name__ == '__main__':

resource_management_example()

Problems

Added complexity

Deadlock

Livelock

Starvation

13:46 (Worker 1 ) Waiting to join the pool ...

13:46 (Worker 2 ) Waiting to join the pool ...

13:46 (Worker 1 ) Now part of the pool party!

13:46 (Worker 3 ) Waiting to join the pool ...

13:46 (Worker 2 ) Now part of the pool party!

13:46 (Worker 1 ) Waiting for lock ...

13:46 (Worker 2 ) Waiting for lock ...

13:46 (Worker 1 ) Acquired lock

13:46 (Worker 1 ) Doing work on shared resource ...

13:50 (Worker 1 ) Released lock

13:50 (Worker 2 ) Acquired lock

13:50 (Worker 3 ) Now part of the pool party!

13:50 (Worker 2 ) Doing work on shared resource ...

13:50 (Worker 3 ) Waiting for lock ...

13:54 (Worker 2 ) Released lock

13:54 (Worker 3 ) Acquired lock

13:54 (Worker 3 ) Doing work on shared resource ...

13:58 (Worker 3 ) Released lock](https://image.slidesharecdn.com/ef70f8bd-e9f9-412f-b9f9-5a0dd59c4dac-170105061522/75/concurrency-30-2048.jpg)