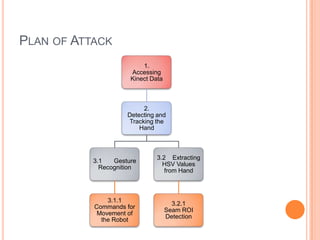

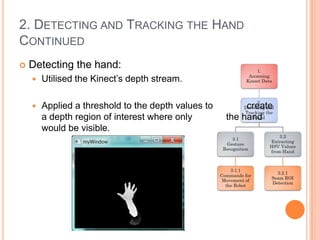

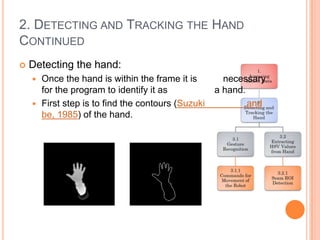

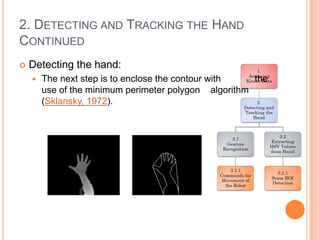

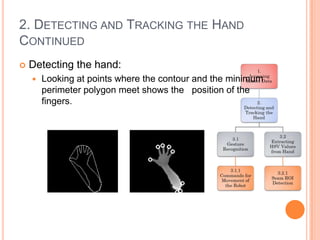

This document discusses a project to use computer vision and a Microsoft Kinect sensor to enable real-time gesture control of a welding robot. The project aims to detect and track a user's hand gestures to control robot movement, and to define the weld seam region of interest to allow for seam detection. The plan involves accessing Kinect data, detecting and tracking the hand in 3D space, recognizing gestures for robot movement commands, extracting color values from the hand for skin detection, and using the hand position to define the seam region of interest. The work so far has successfully defined the hand and fingers, tracked hand motion, and extracted the seam region. Further work is needed to finalize the gesture commands and integrate control of the robot.