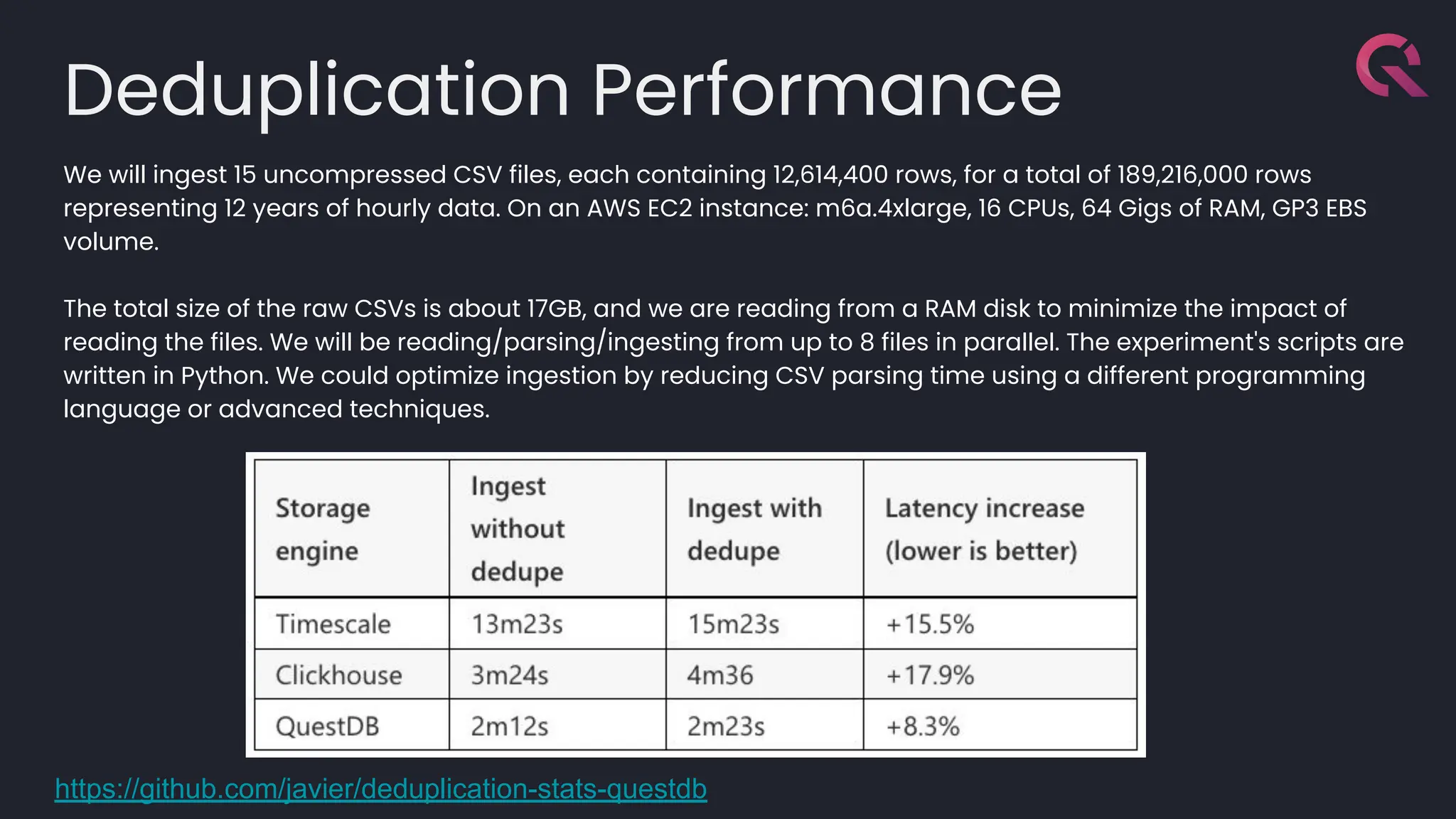

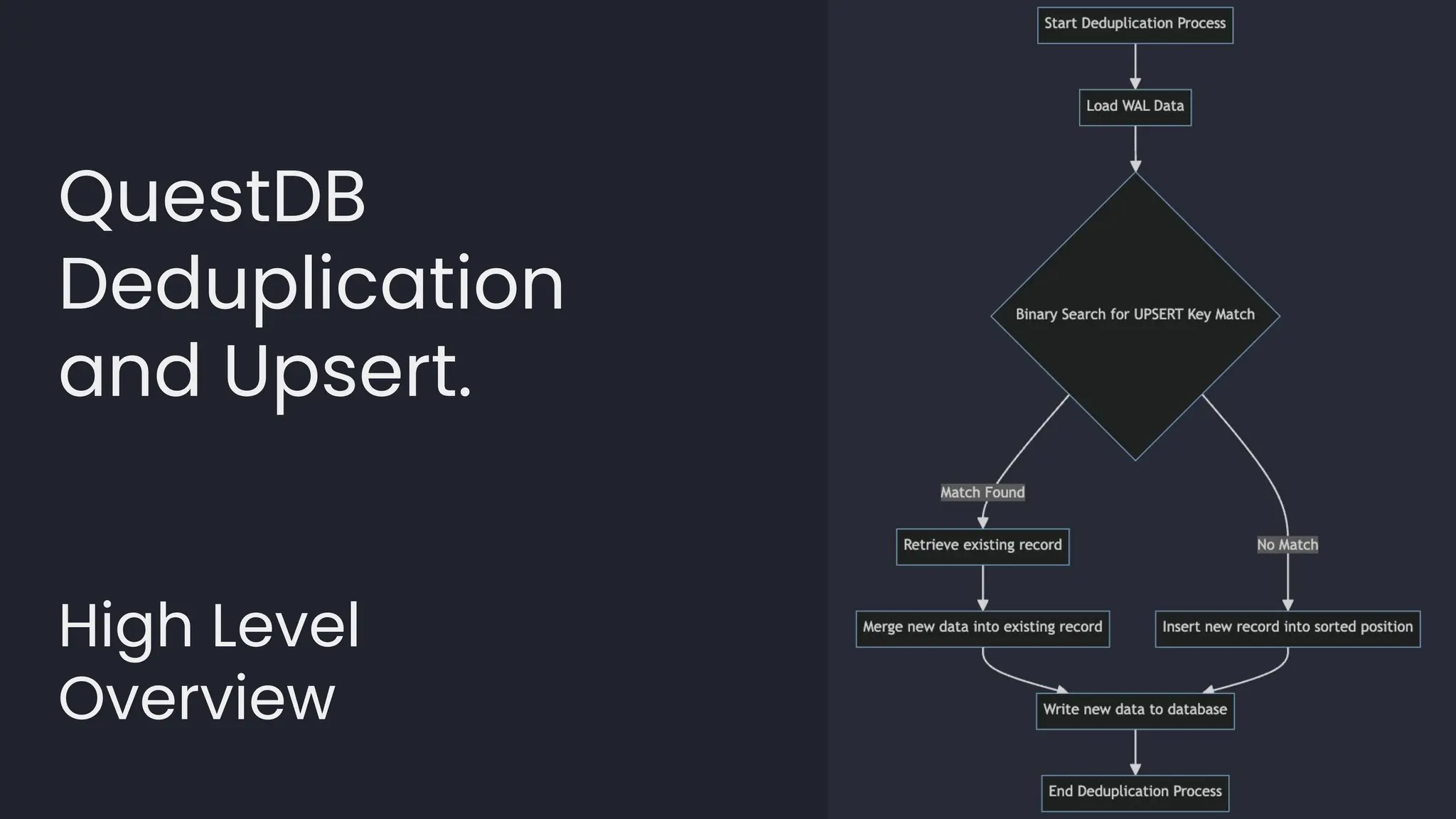

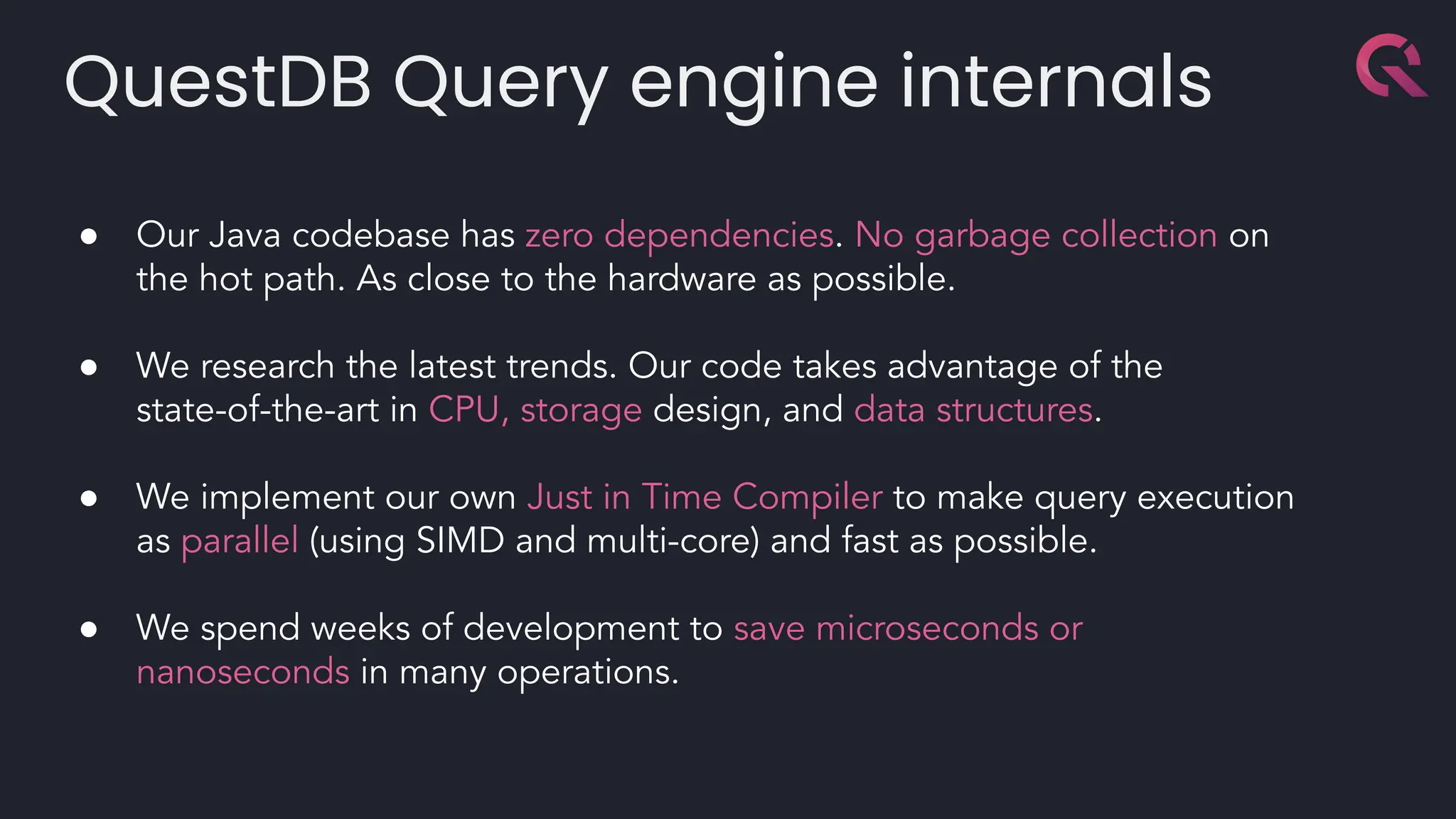

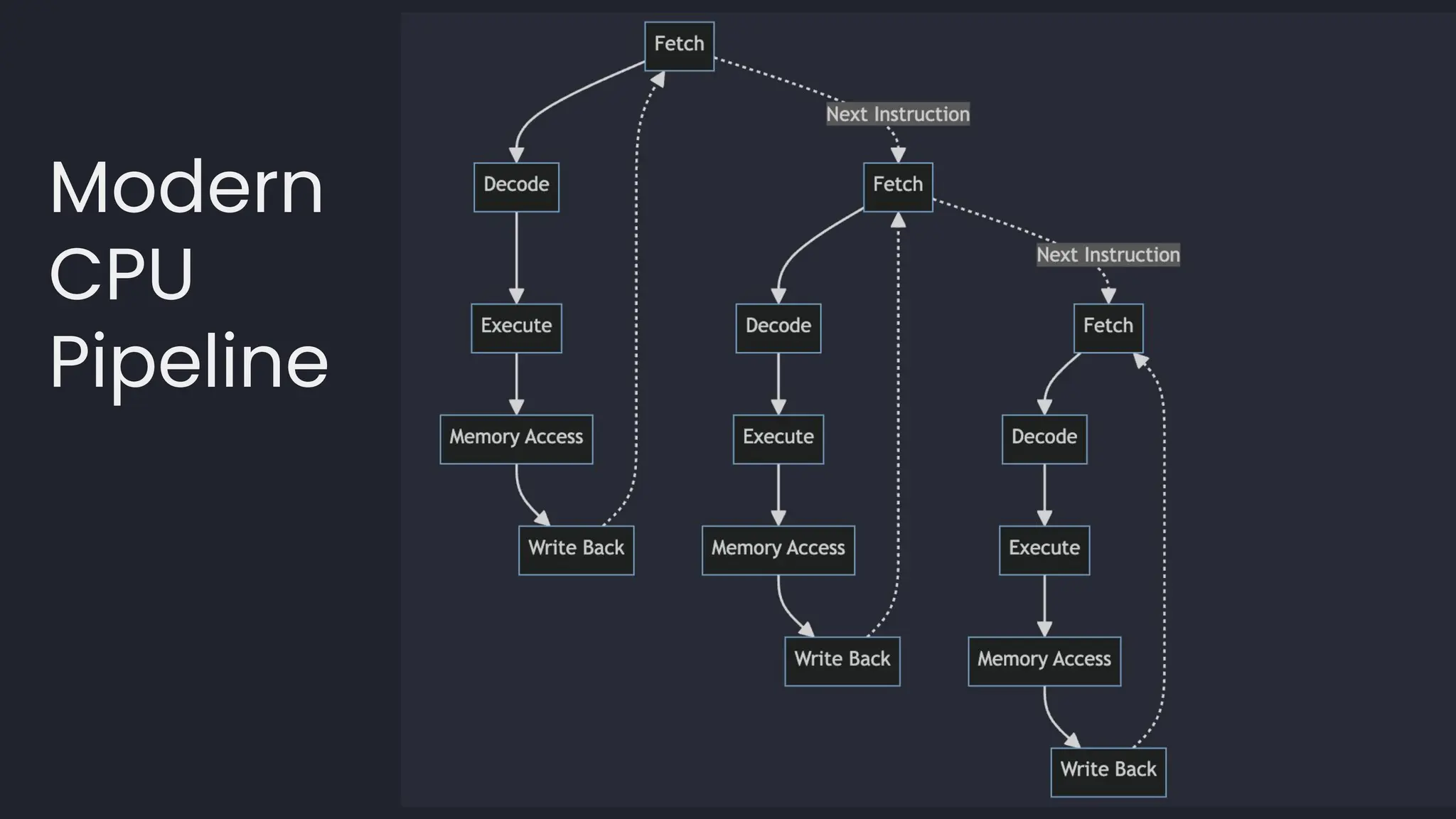

This document details how QuestDB implements 'exactly once' semantics in its high-performance database, addressing challenges associated with streaming data ingestion. It covers the architecture of QuestDB, including its optimized storage and deduplication processes, alongside technical details relevant for developers and potential users. The presentation also emphasizes the database's performance capabilities and open-source nature, alongside various use cases and examples related to data ingestion and processing.

![Parallelism in the Write-Ahead Log

Client Connections C1 C2 C3

ϟ ϟ ϟ

W3

W2

W1

WAL Writers

tx01 tx03 tx04

tx02

tx06

tx11

tx05

tx08

tx12

tx07

tx09

tx10

Sequencer W1[0] W1[1] W3[0]

W2[0] …](https://image.slidesharecdn.com/questexactlyoncecommitconf-240704152526-ea886719/75/Como-hemos-implementado-semantica-de-Exactly-Once-en-nuestra-base-de-datos-de-alto-rendimiento-16-2048.jpg)

![Out-of-order Merge

W3

W2

W1

tx01 tx03 tx04

tx02

tx06

tx11

tx05

tx08

tx12

tx07

tx09

tx10

W1[0] W1[1] W3[0]

W2[0] …

tx01

ts price symbol qty

ts01 178.08 AAPL 1000

ts02 148.66 GOOGL 400

ts03 424.86 MSFT 5000

ts10 178.09 AMZN 100

ts11 505.08 META 2500

ts12 394.14 GS 2000

… … … …

tx02

ts price symbol qty

ts04 192.42 JPM 5000

ts05 288.78 V 300

ts06 156.40 JNJ 6500

ts07 181.62 AMD 7800

ts08 37.33 BAC 1500

ts09 60.83 KO 4000

… … … …](https://image.slidesharecdn.com/questexactlyoncecommitconf-240704152526-ea886719/75/Como-hemos-implementado-semantica-de-Exactly-Once-en-nuestra-base-de-datos-de-alto-rendimiento-17-2048.jpg)

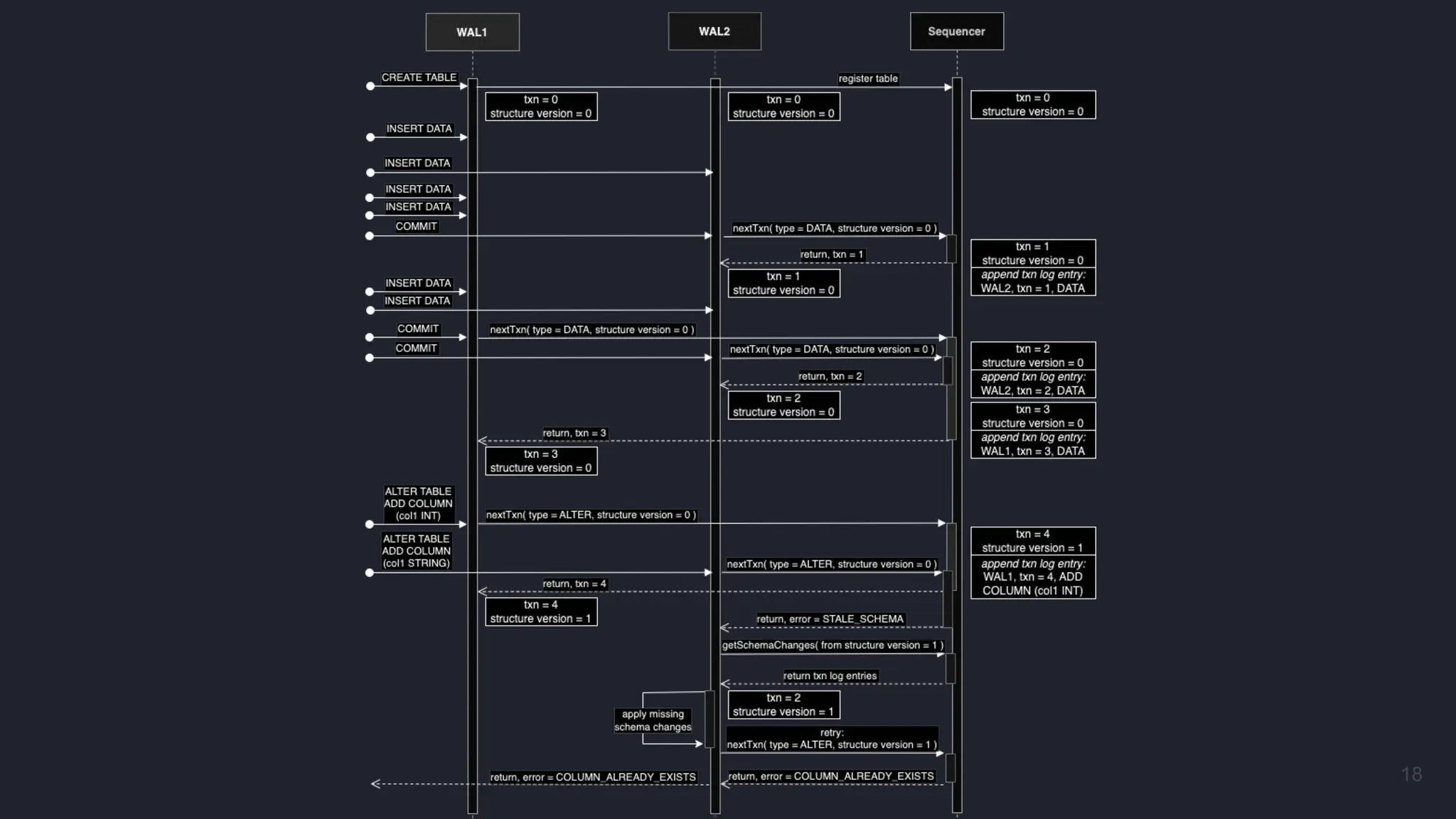

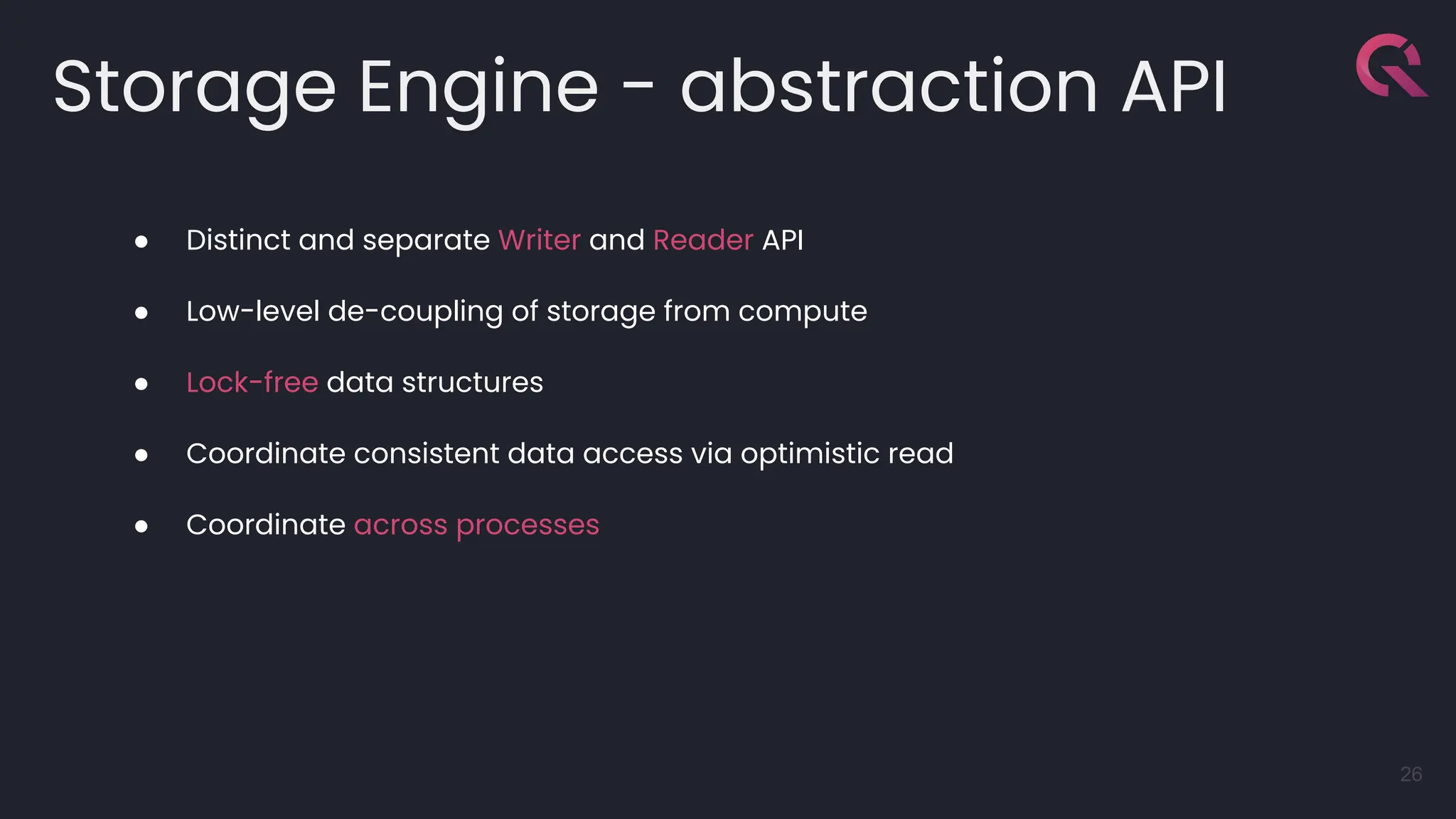

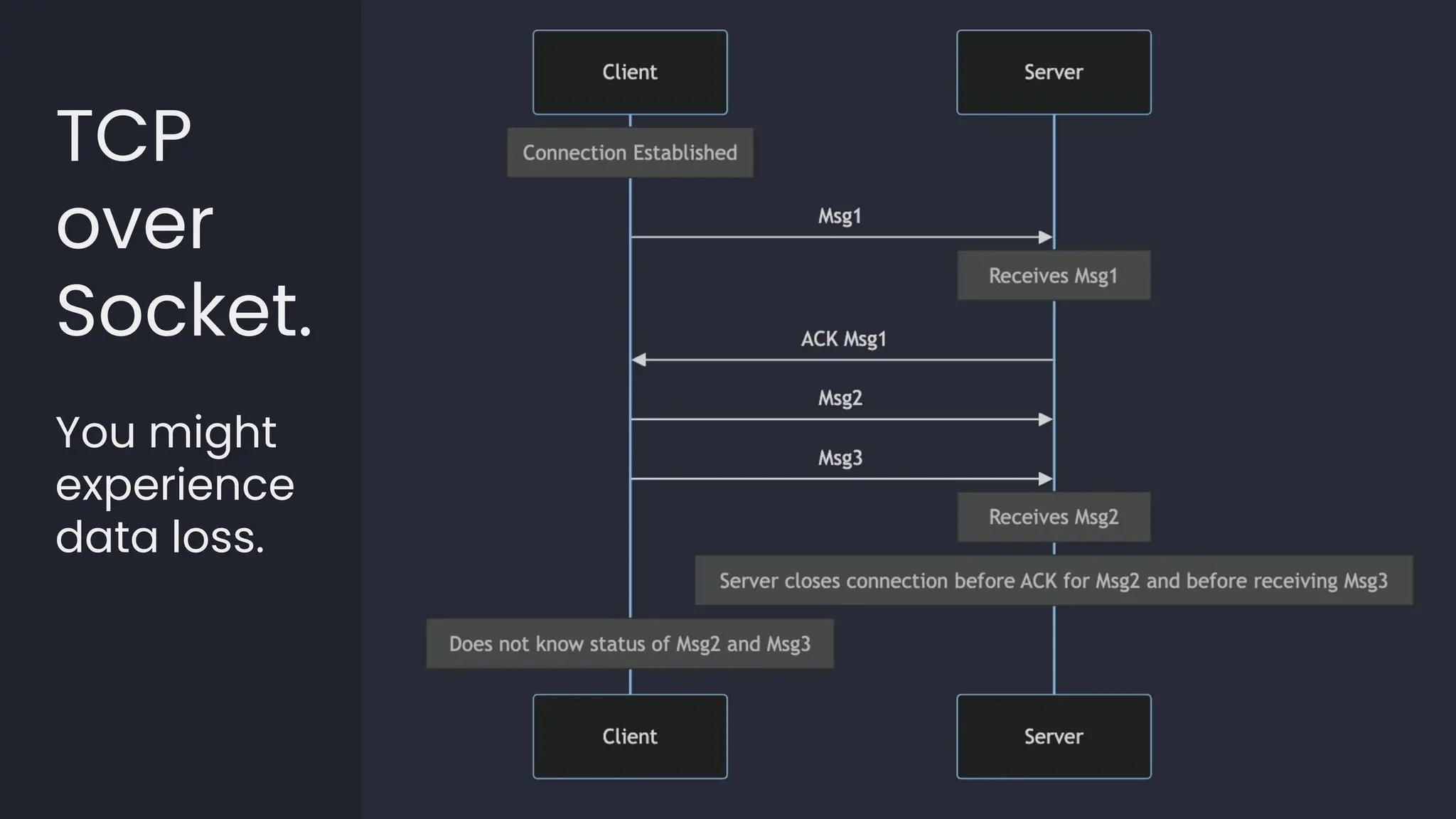

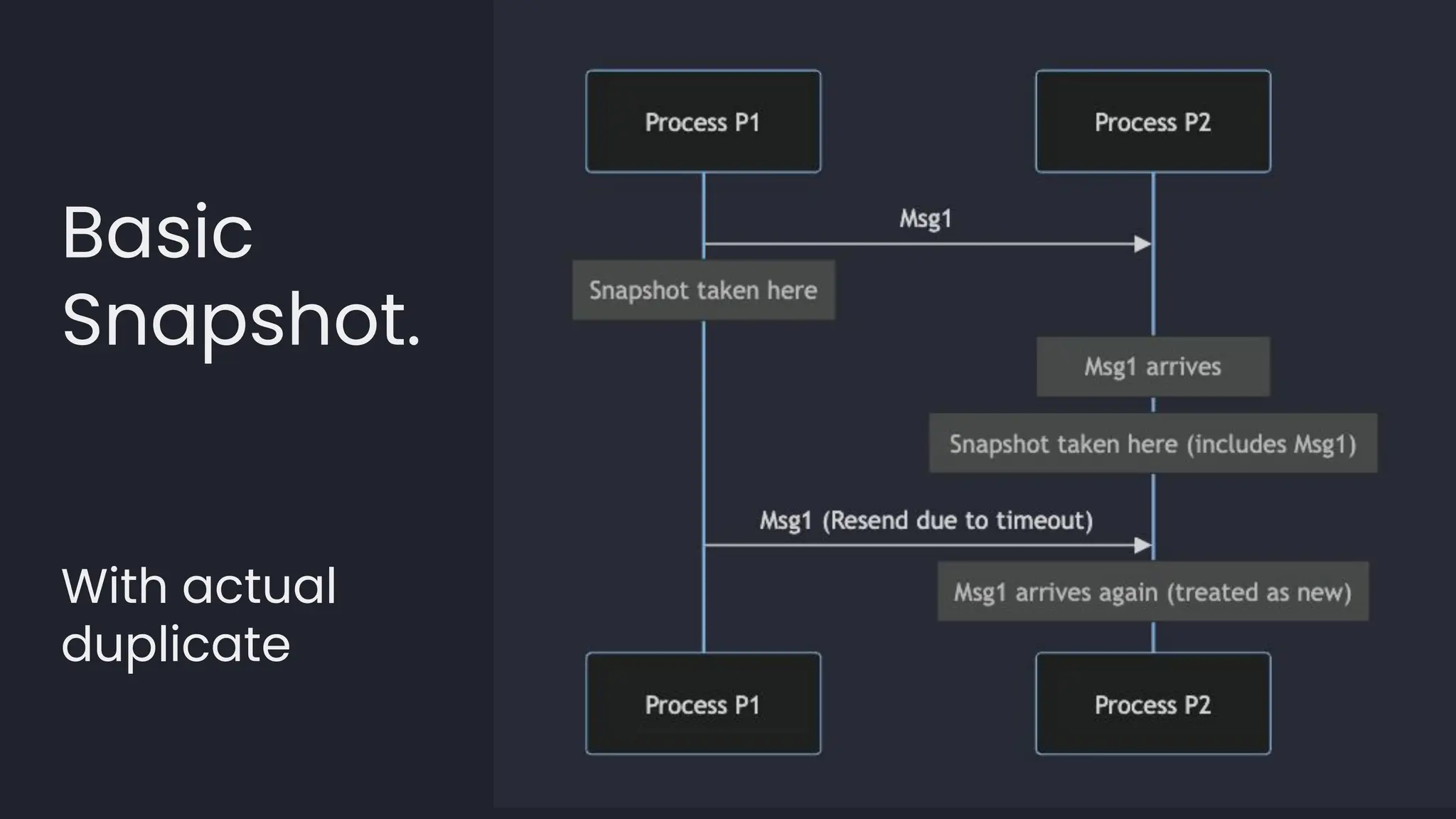

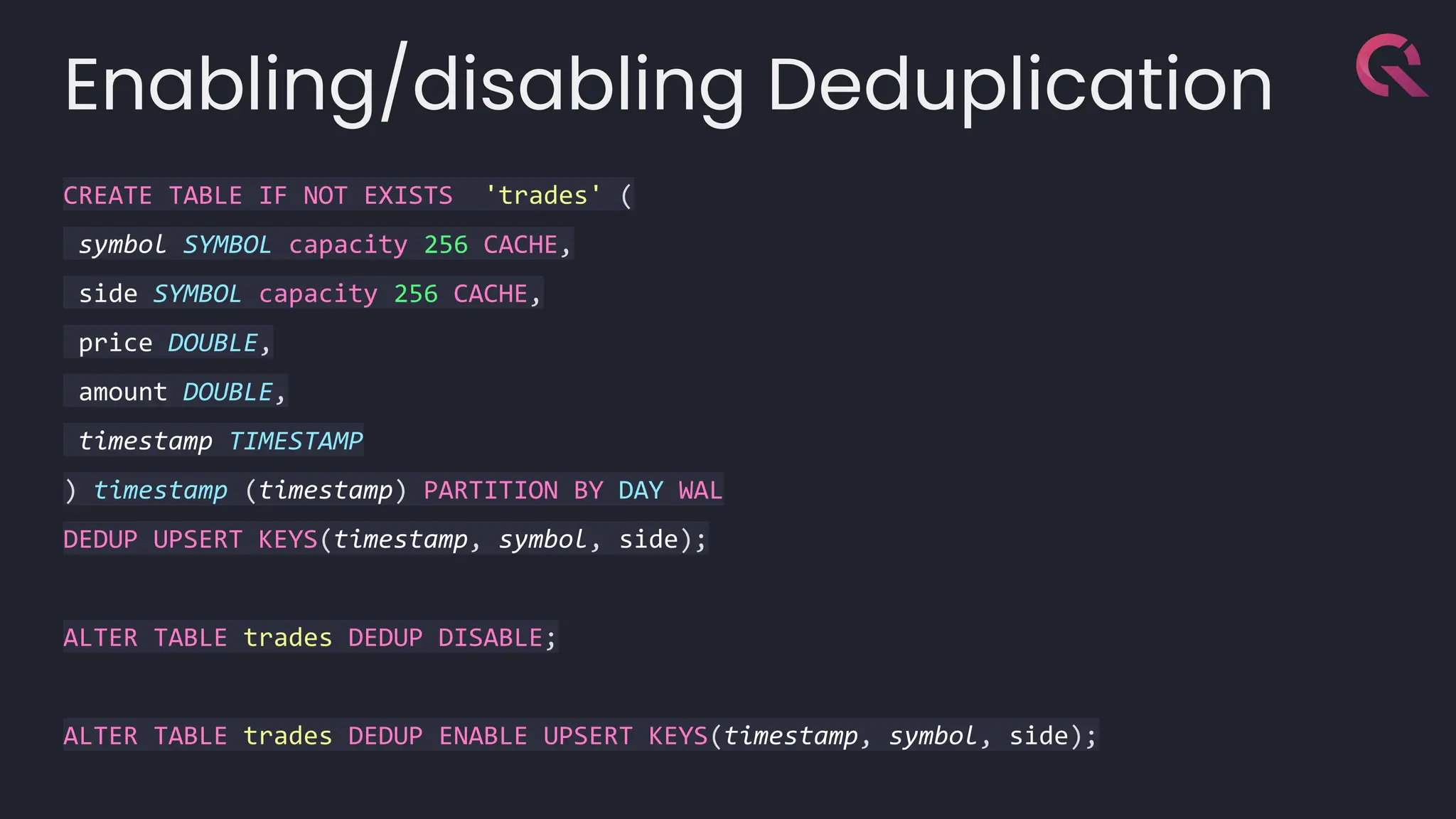

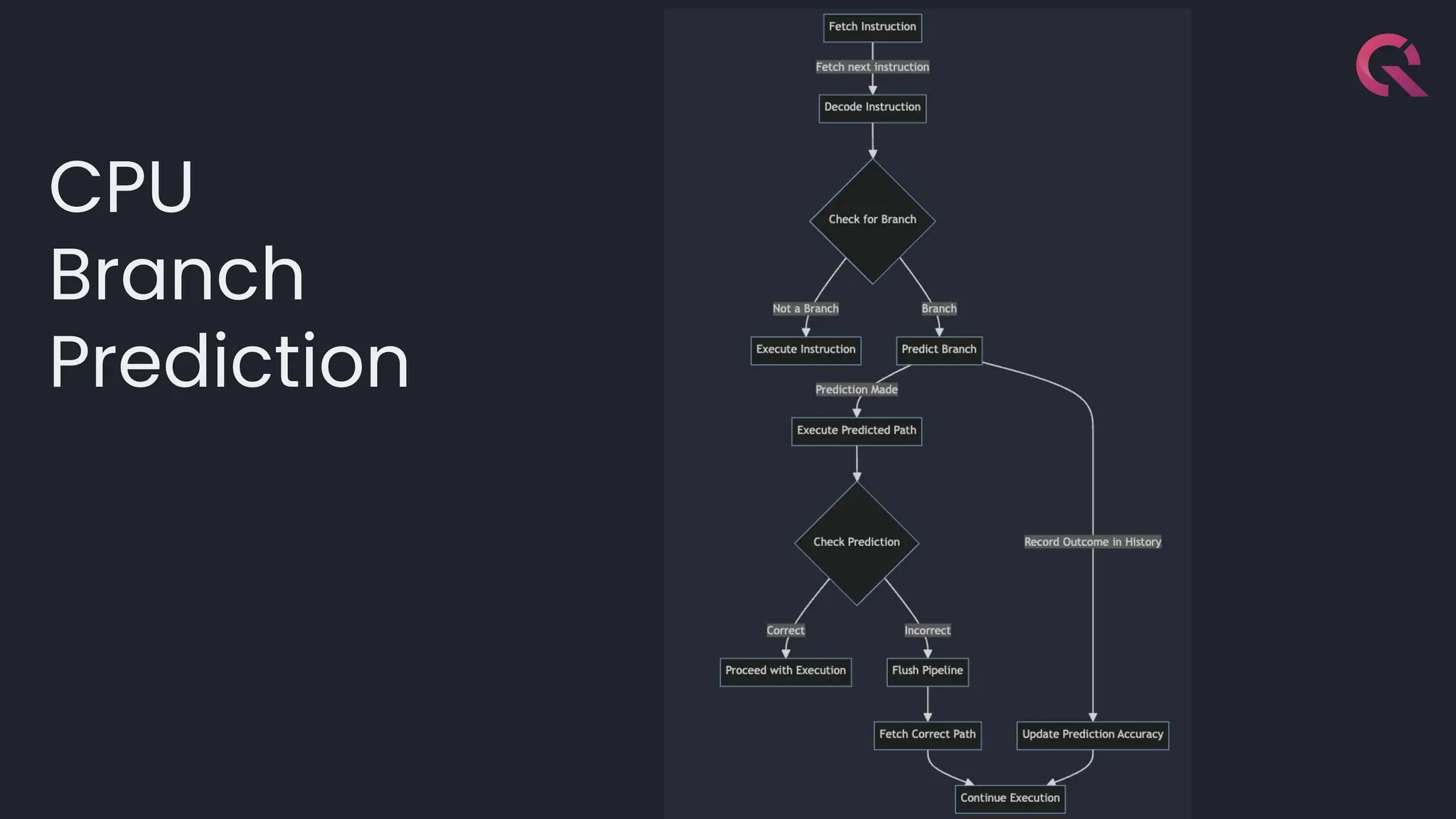

![template<typename LambdaDiff>

inline int64_t conventional_branching_search(const index_t *array, int64_t

count, int64_t value_index, LambdaDiff compare) {

int64_t low = 0;

int64_t high = count - 1;

while (low <= high) {

int64_t mid = (low + high) / 2;

auto diff = compare(value_index, array[mid].i);

if (diff == 0) {

return mid; // Found the element

} else if (diff < 0) {

high = mid - 1; // Search in the left half

} else {

low = mid + 1; // Search in the right half

}

}

return -1; // Element not found

}

Binary search by timestamp (not actual implementation)](https://image.slidesharecdn.com/questexactlyoncecommitconf-240704152526-ea886719/75/Como-hemos-implementado-semantica-de-Exactly-Once-en-nuestra-base-de-datos-de-alto-rendimiento-52-2048.jpg)

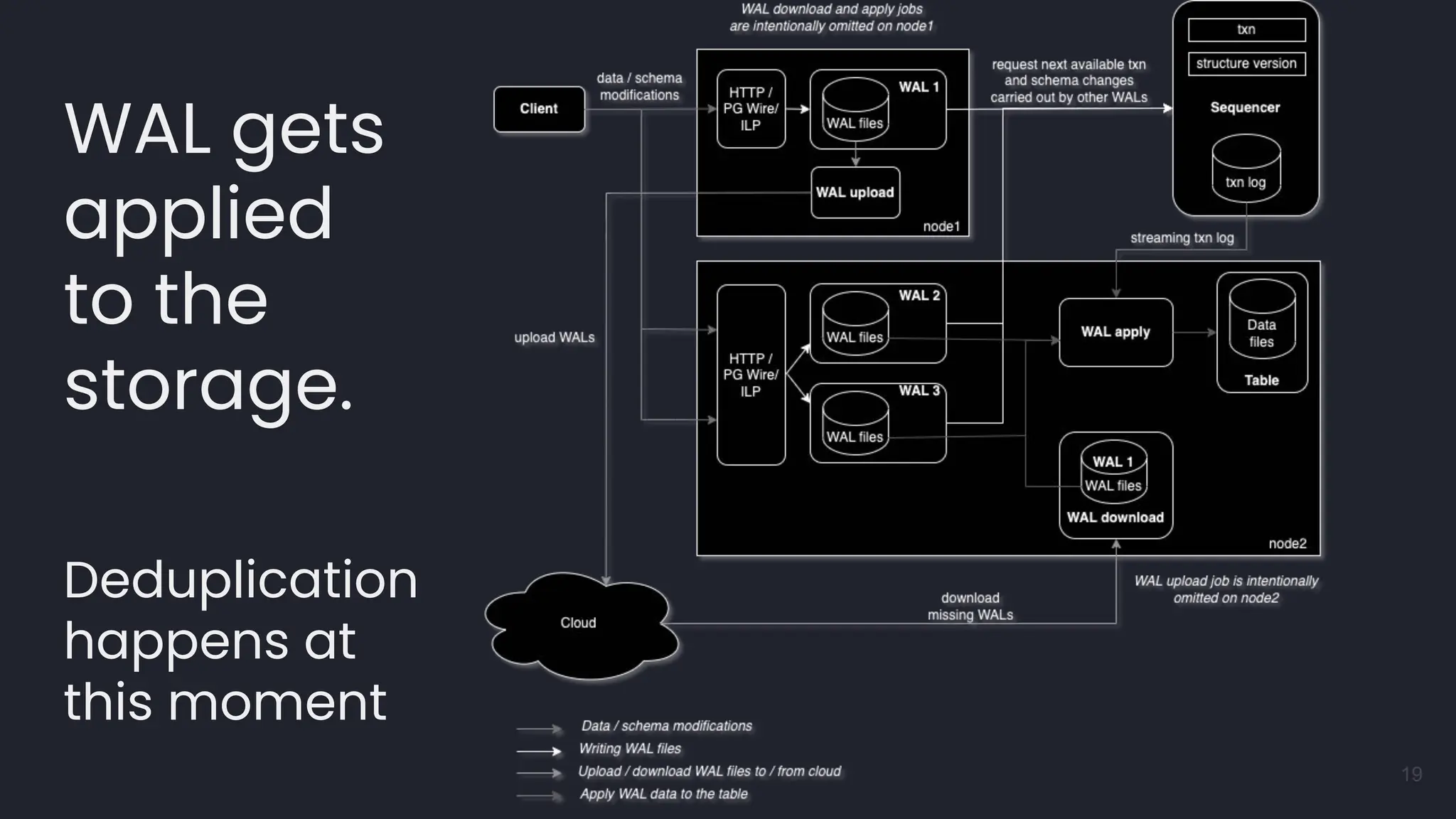

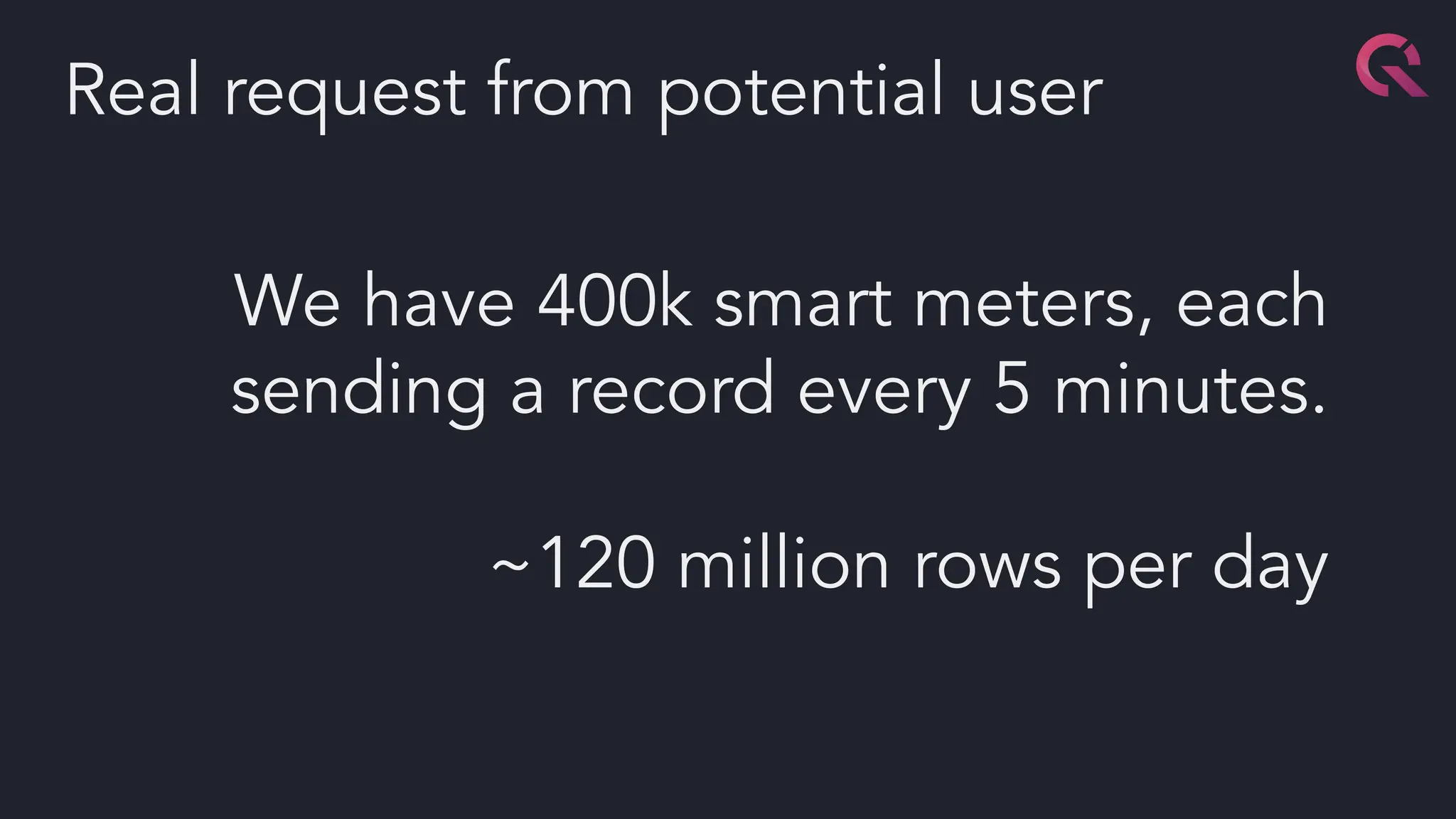

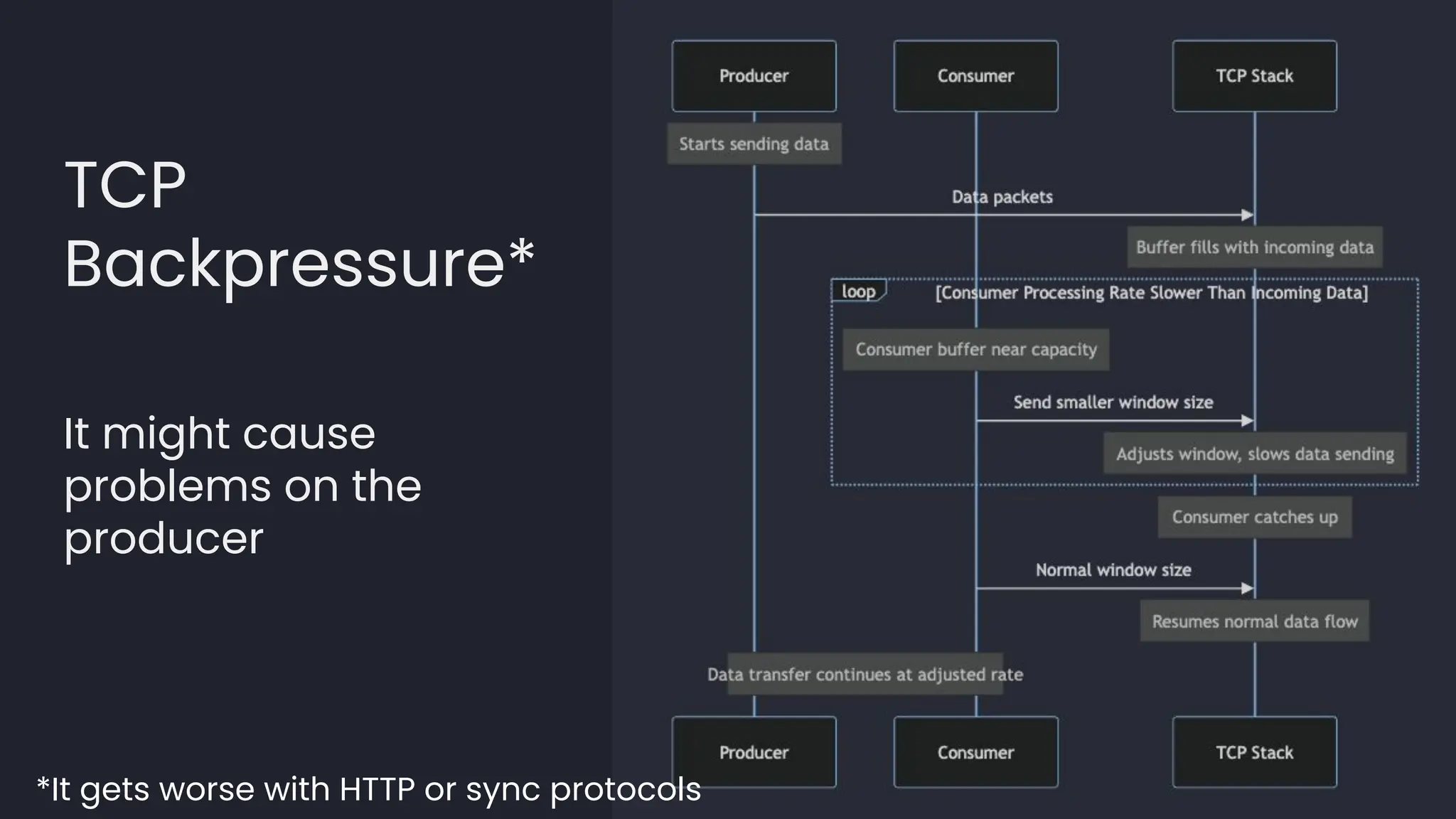

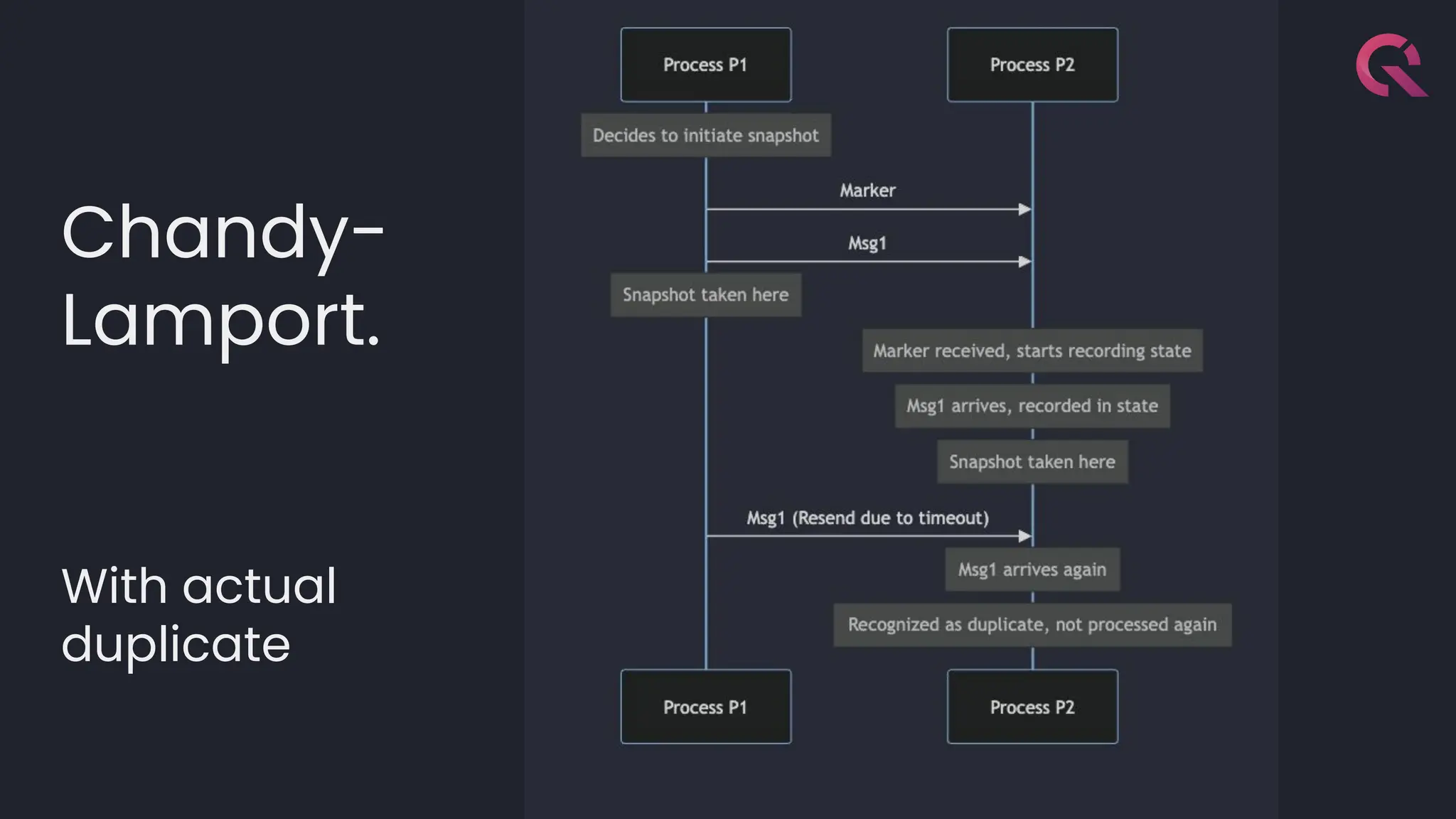

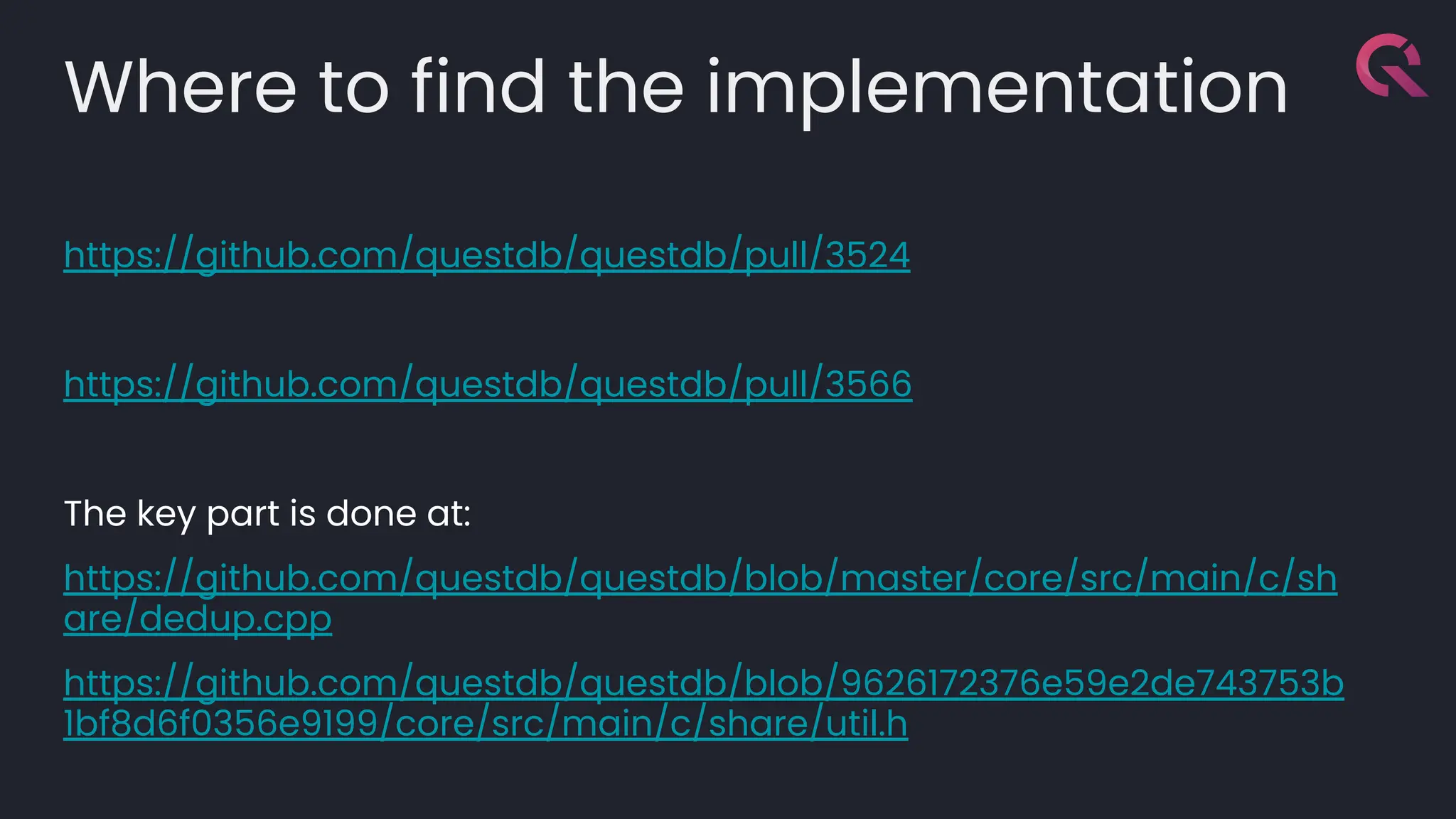

![template<typename LambdaDiff>

inline int64_t branch_free_search(const index_t *array, int64_t count, int64_t

value_index, LambdaDiff compare) {

const index_t *base = array;

int64_t n = count;

while (n > 1) {

int64_t half = n / 2;

MM_PREFETCH_T0(base + half / 2);

MM_PREFETCH_T0(base + half + half / 2);

auto diff = compare(value_index, base[half].i);

base = (diff > 0) ? base + half : base;

n -= half;

}

if (compare(value_index, base[0].i) == 0) {

return base - array;

}

if (base - array + 1 < count && compare(value_index, base[1].i) == 0) {

return base - array + 1;

}

return -1;

}

“Branch-free” Binary search by timestamp](https://image.slidesharecdn.com/questexactlyoncecommitconf-240704152526-ea886719/75/Como-hemos-implementado-semantica-de-Exactly-Once-en-nuestra-base-de-datos-de-alto-rendimiento-56-2048.jpg)