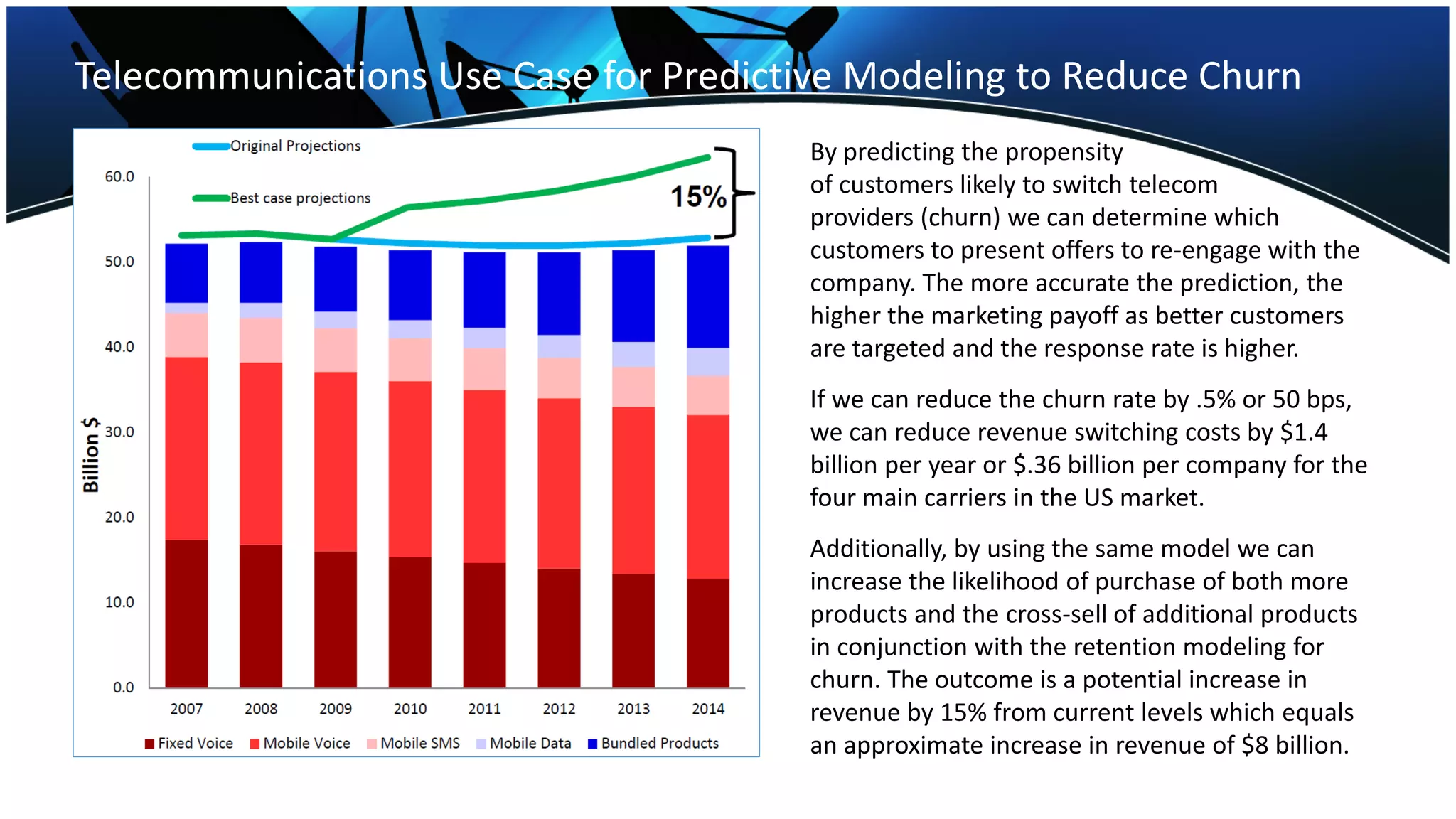

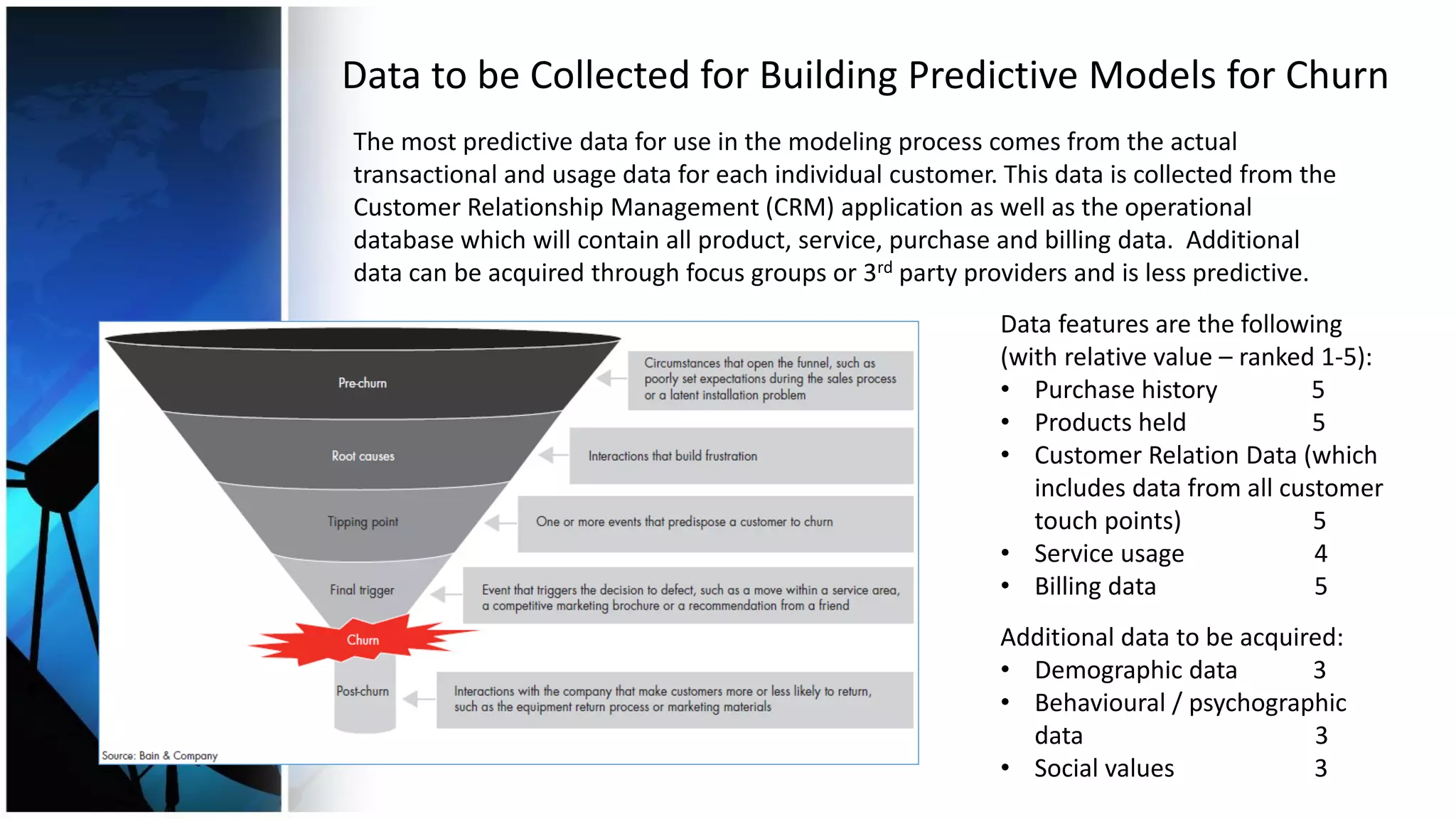

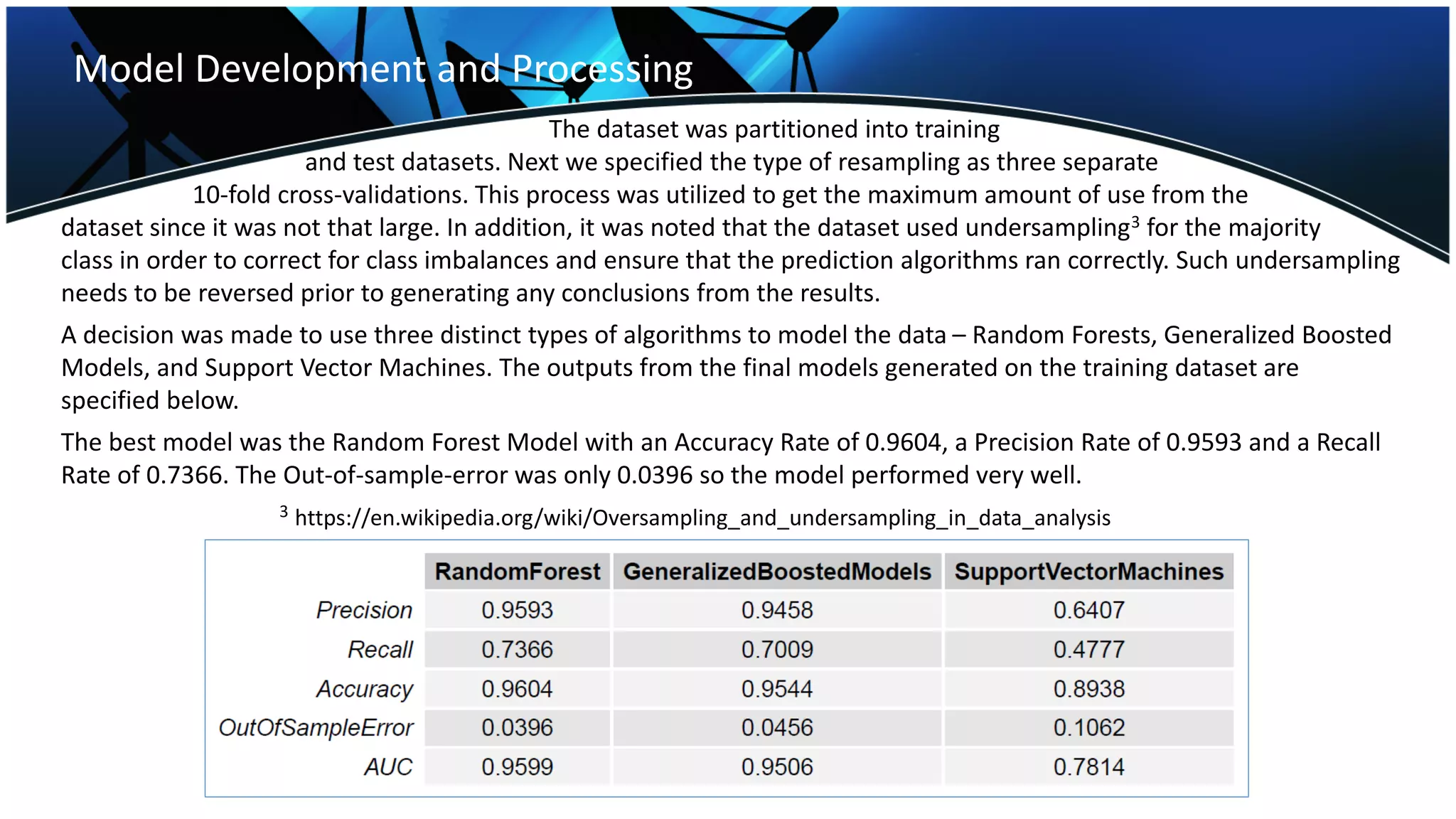

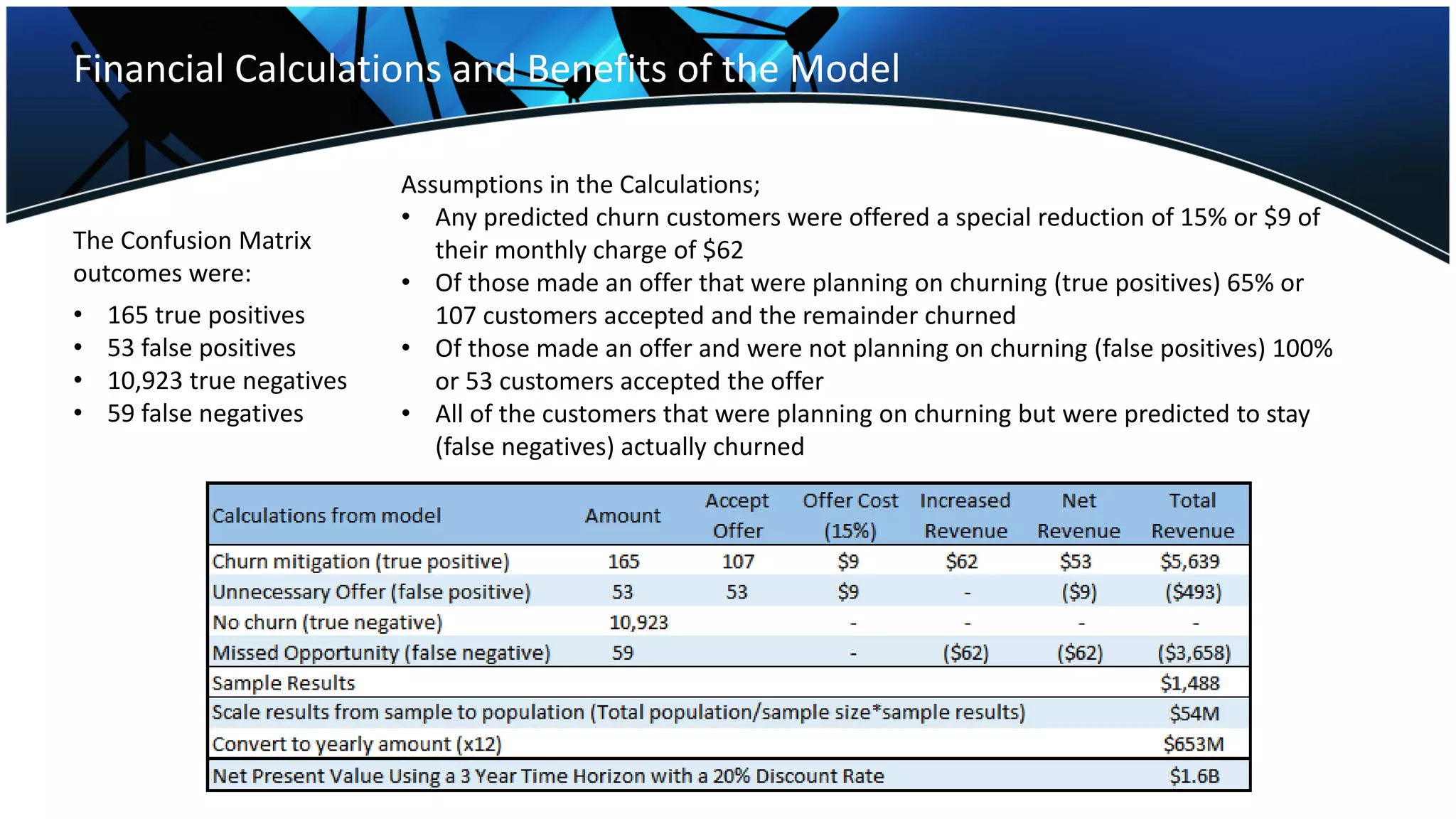

The telecommunications industry faces significant churn, costing companies about $5.7 billion annually due to lost customers, especially as competition increases. Predictive modeling can reduce churn by targeting likely switchers with offers, potentially increasing revenue by $8 billion. Implementing a model that identifies at-risk customers and incentivizes retention could save an estimated $1.6 billion across the industry over three years.