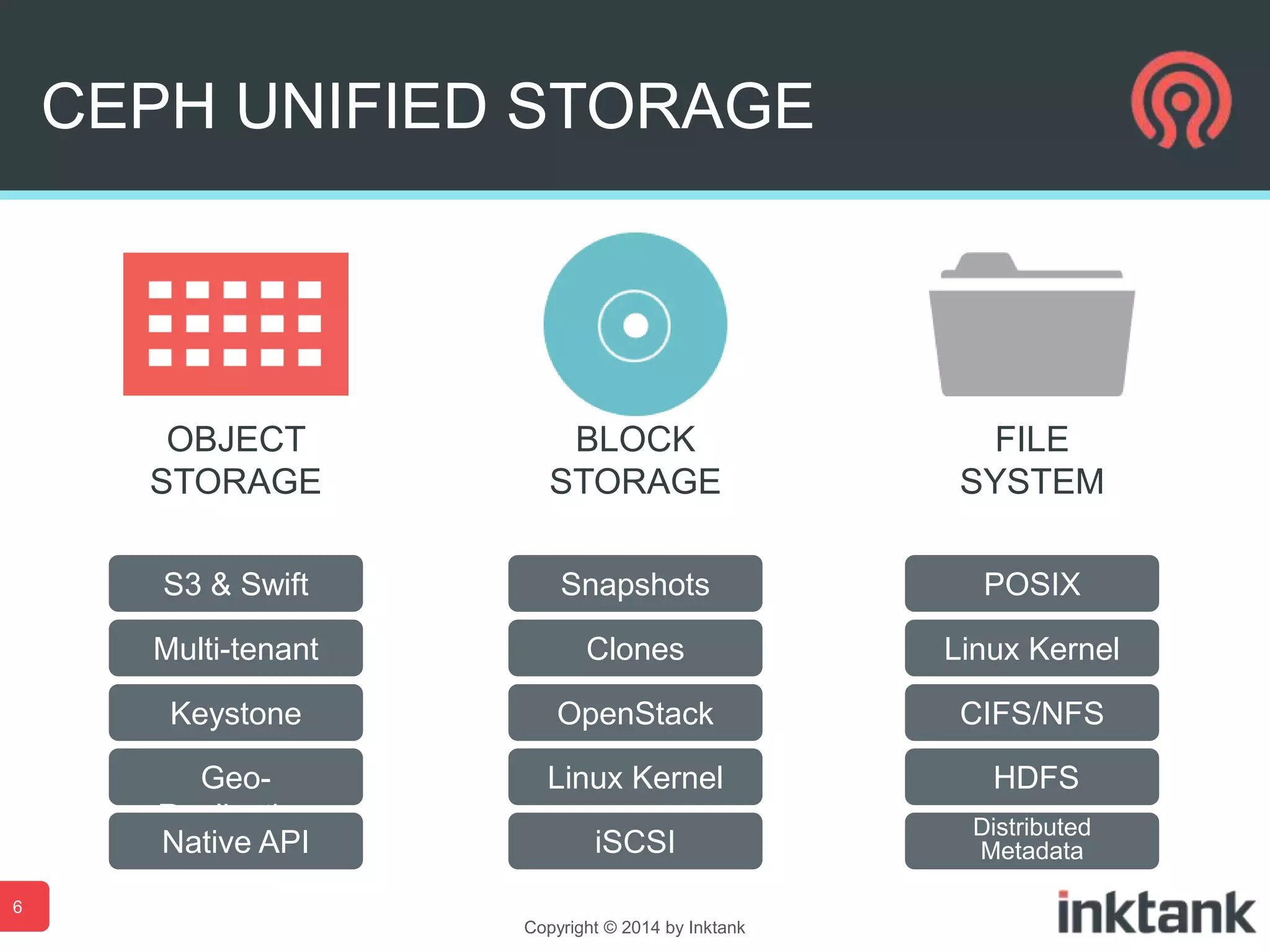

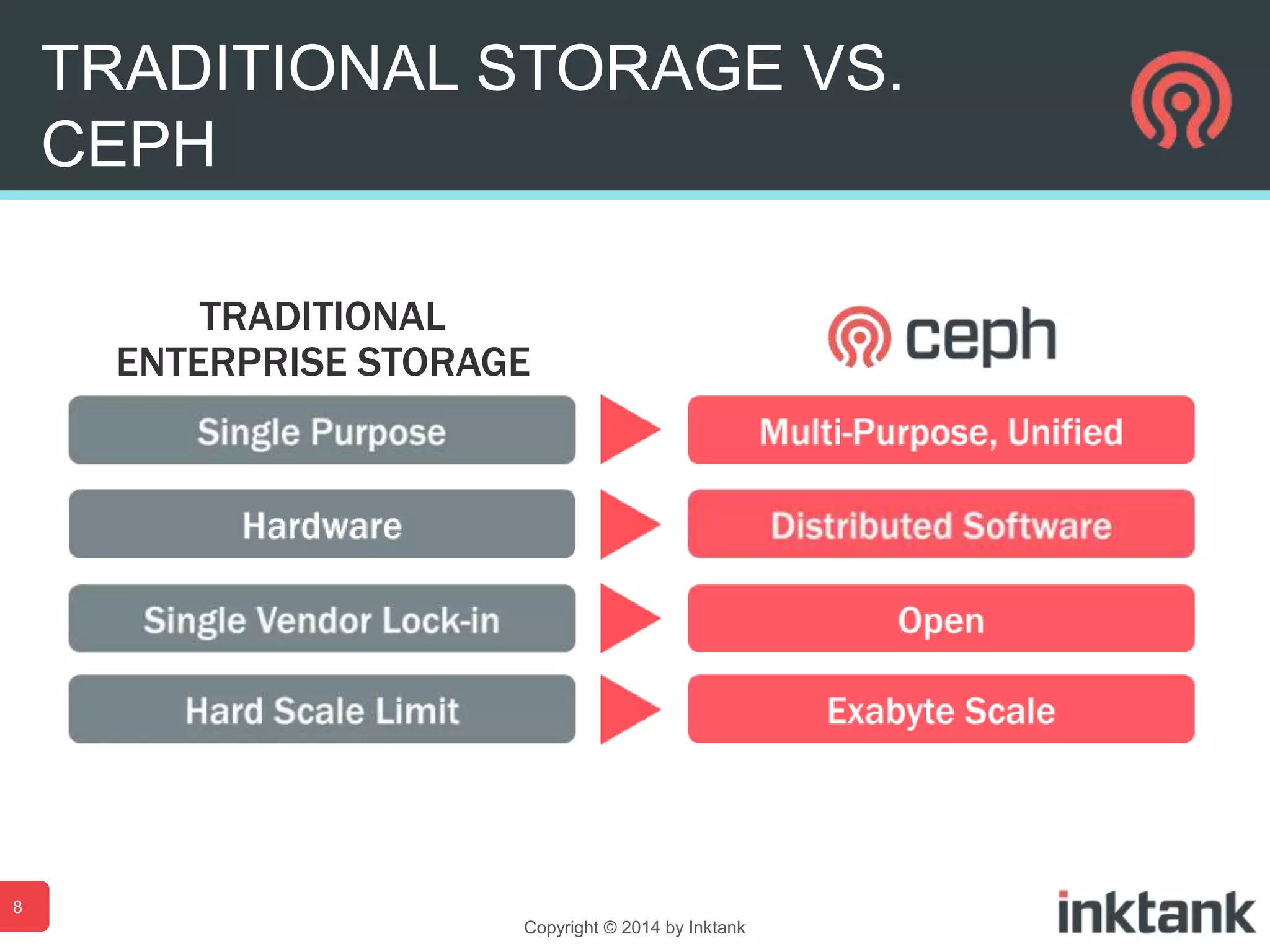

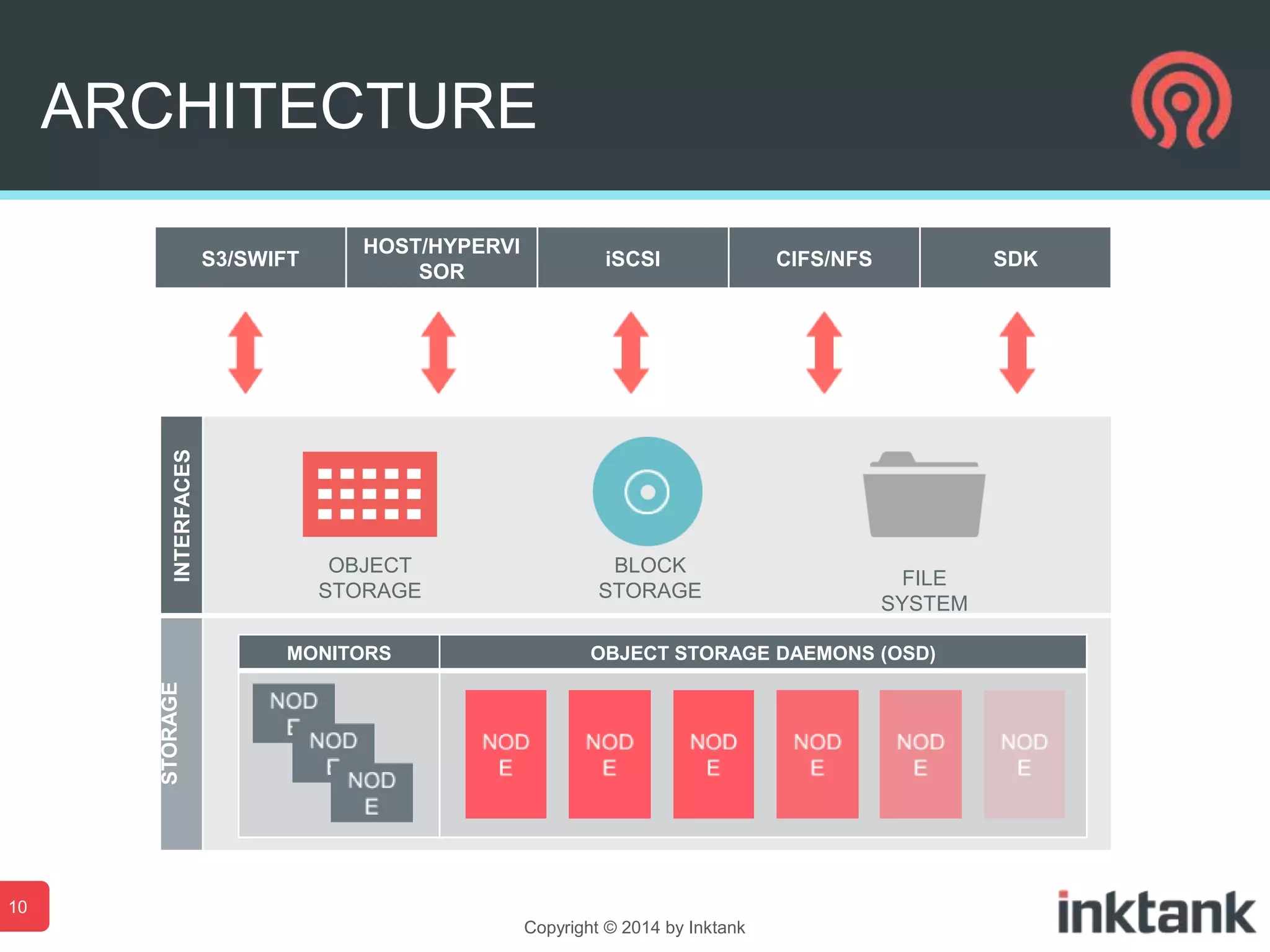

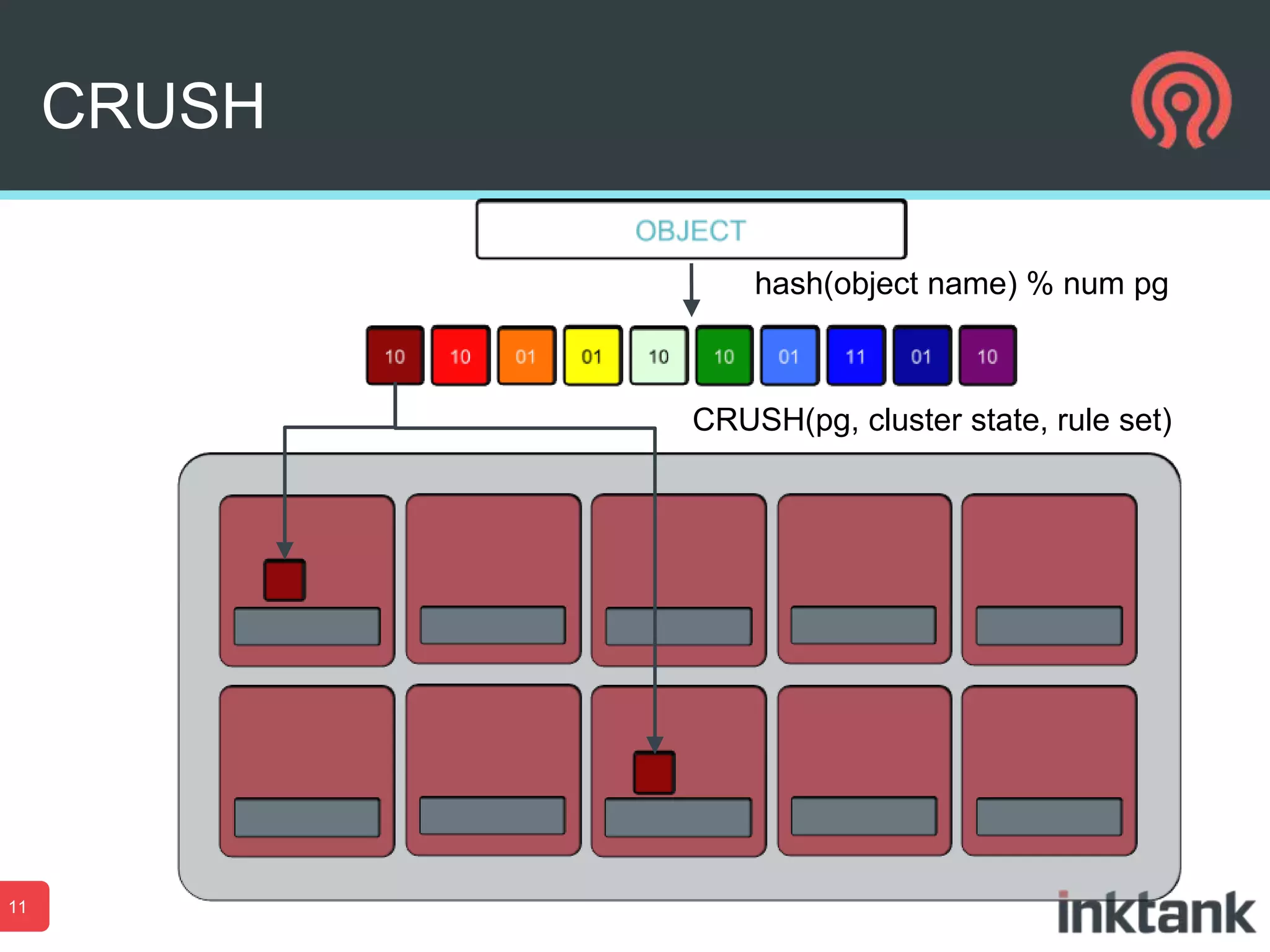

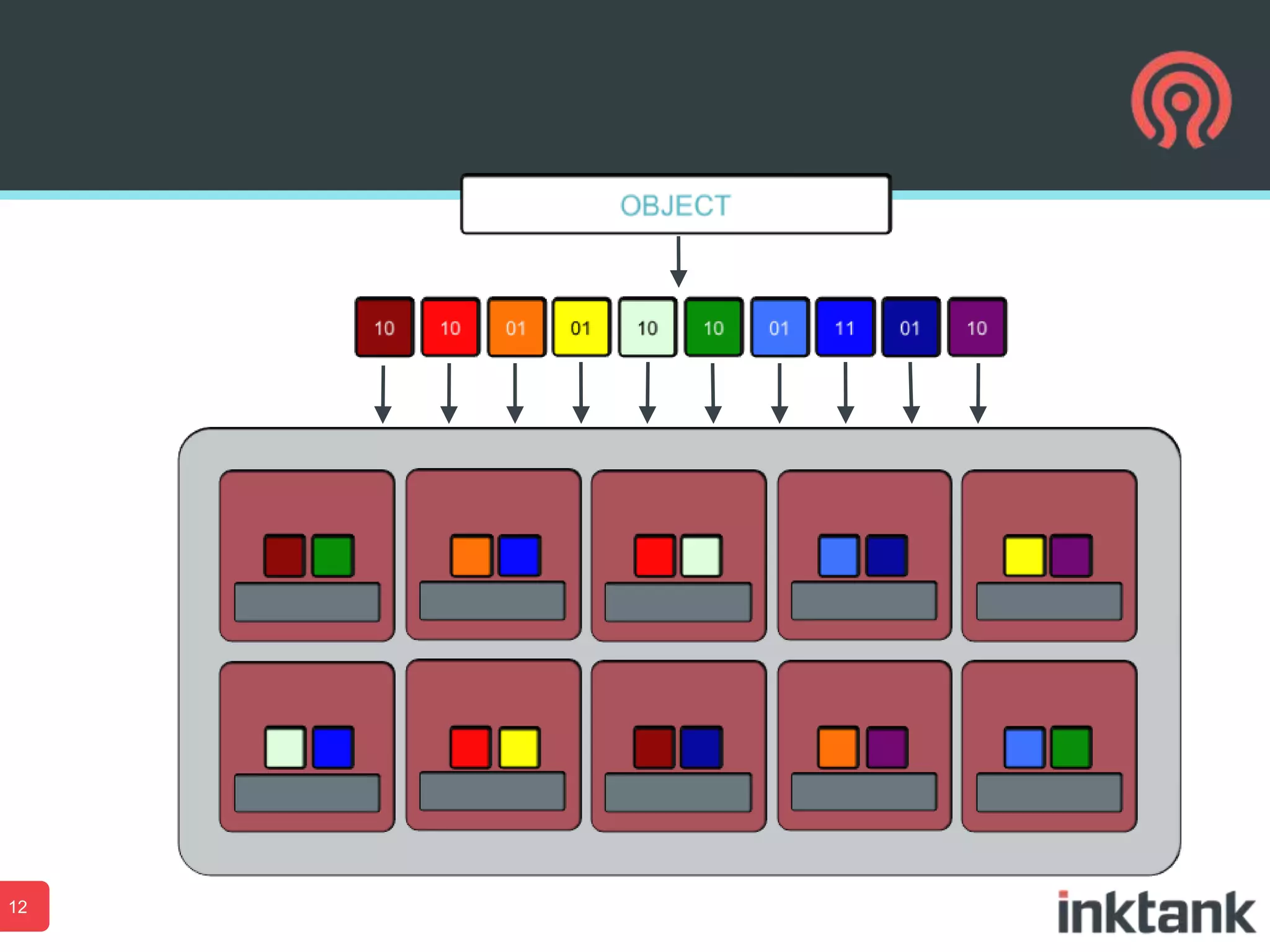

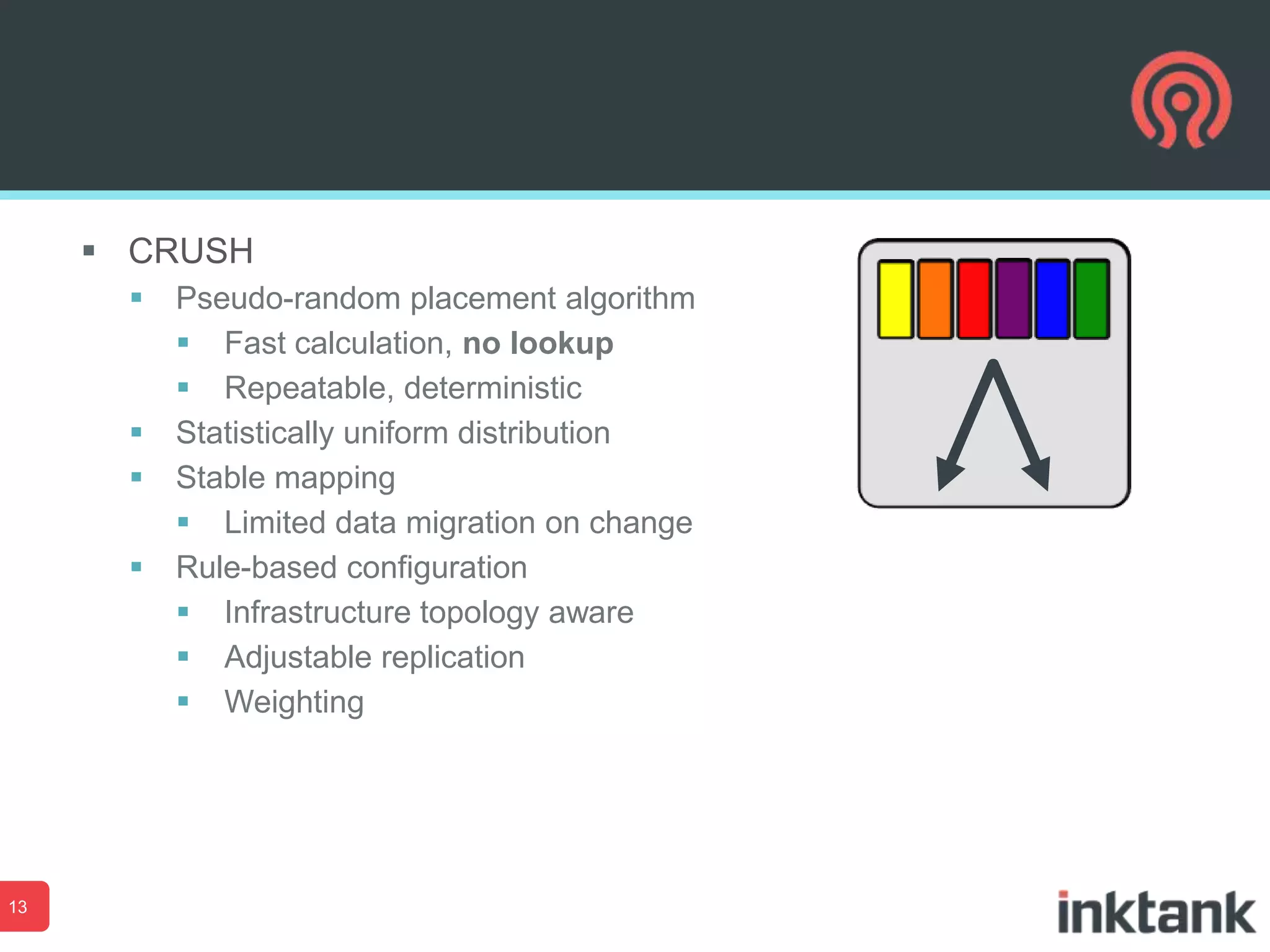

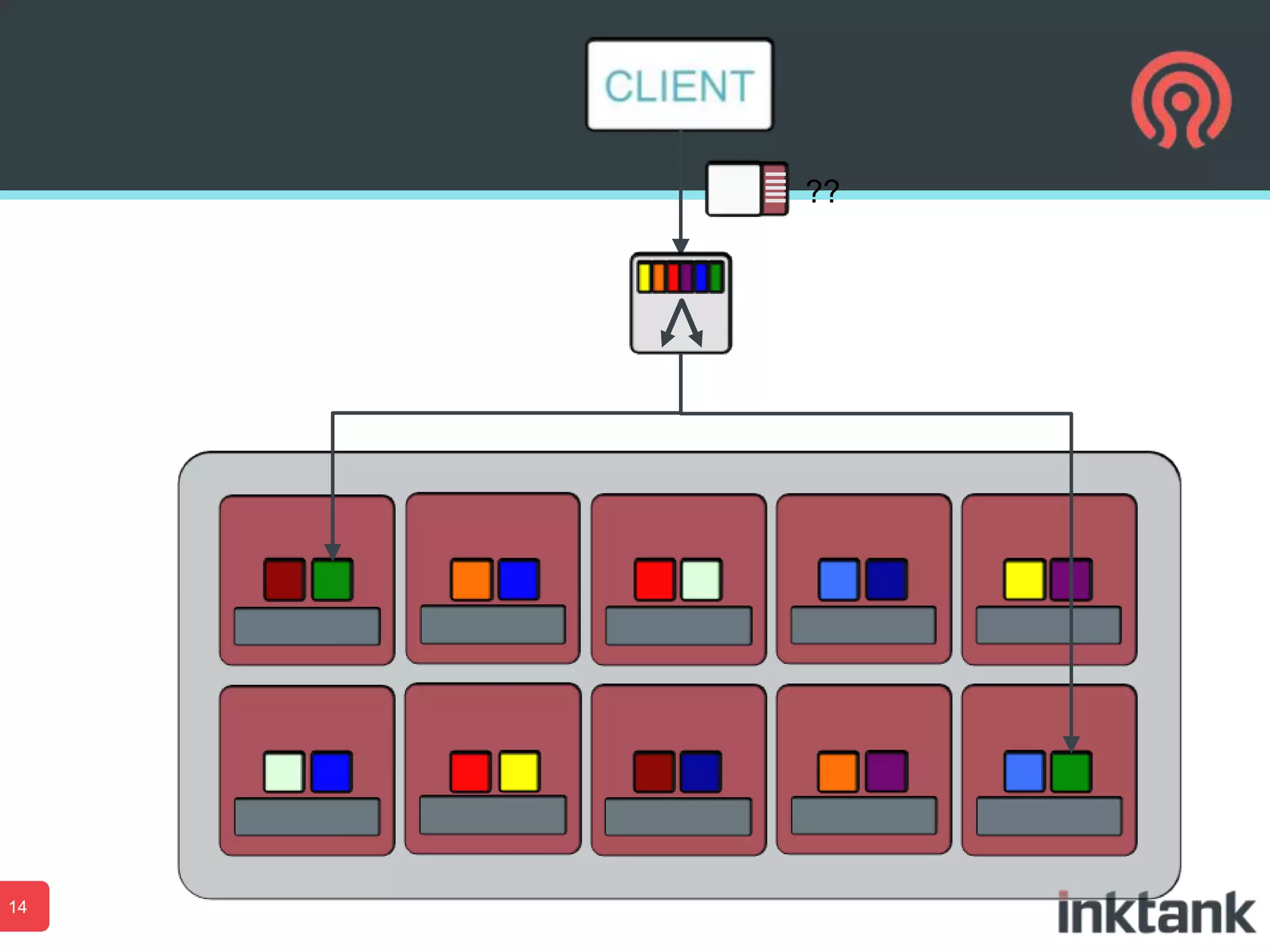

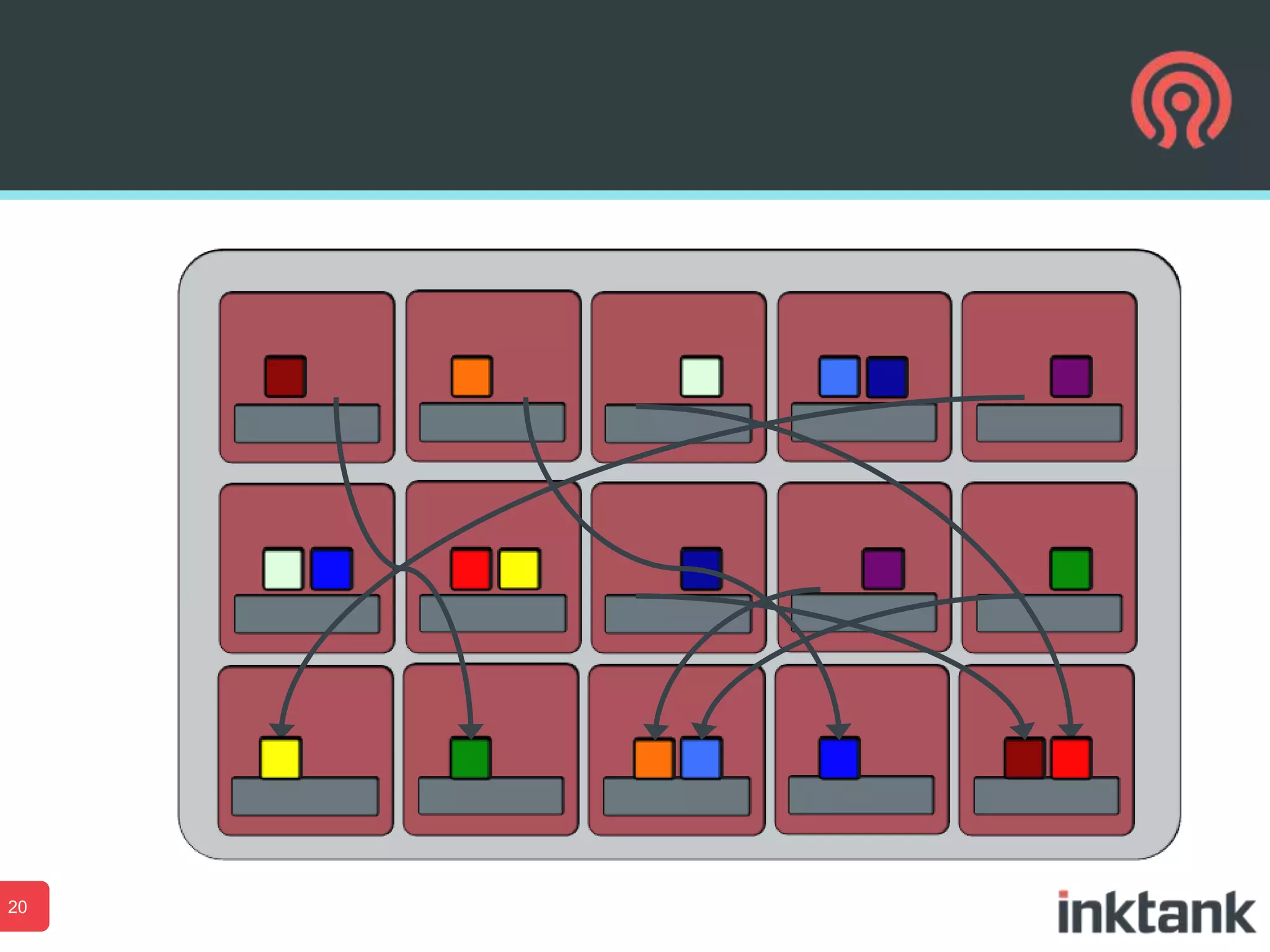

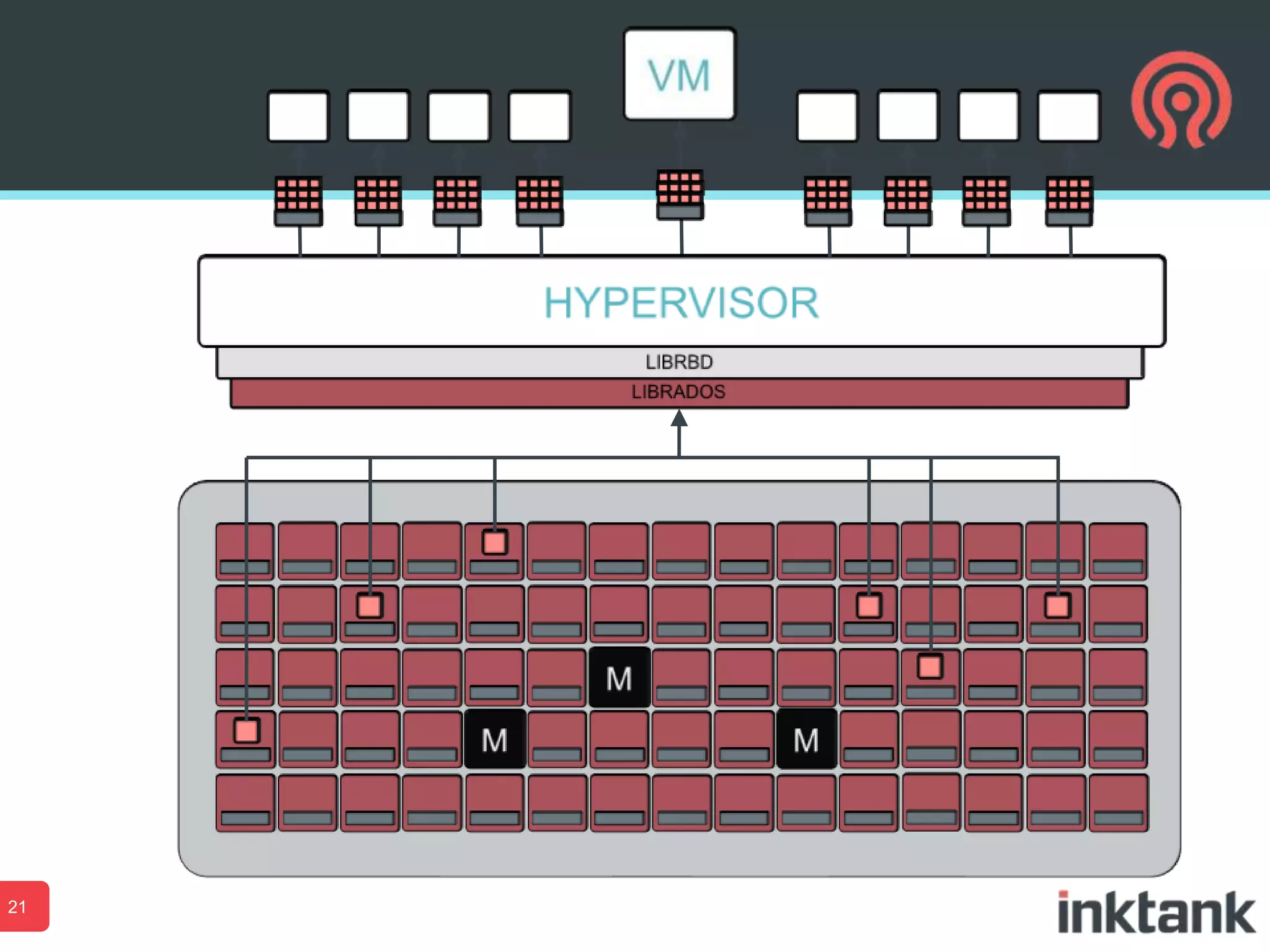

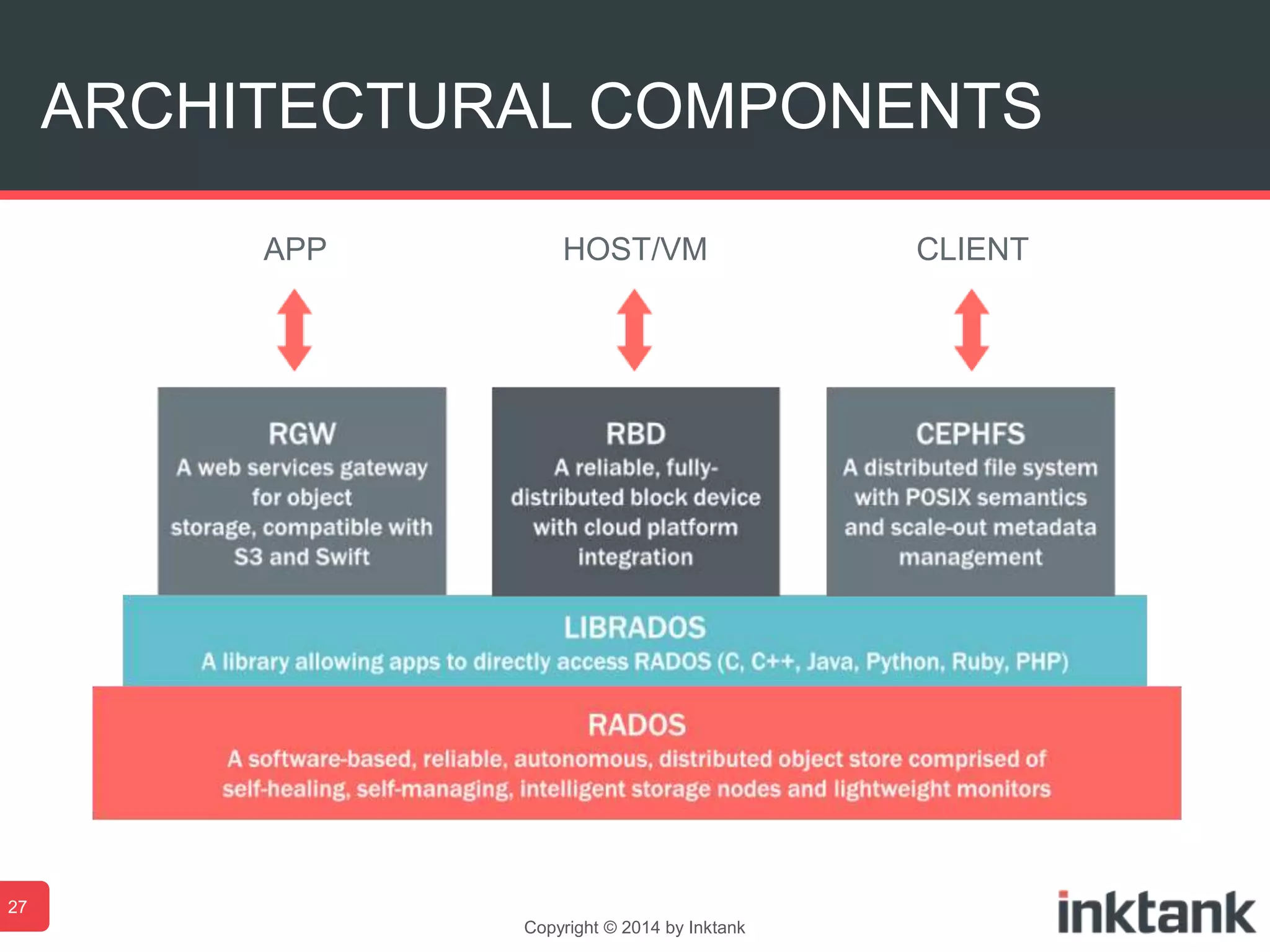

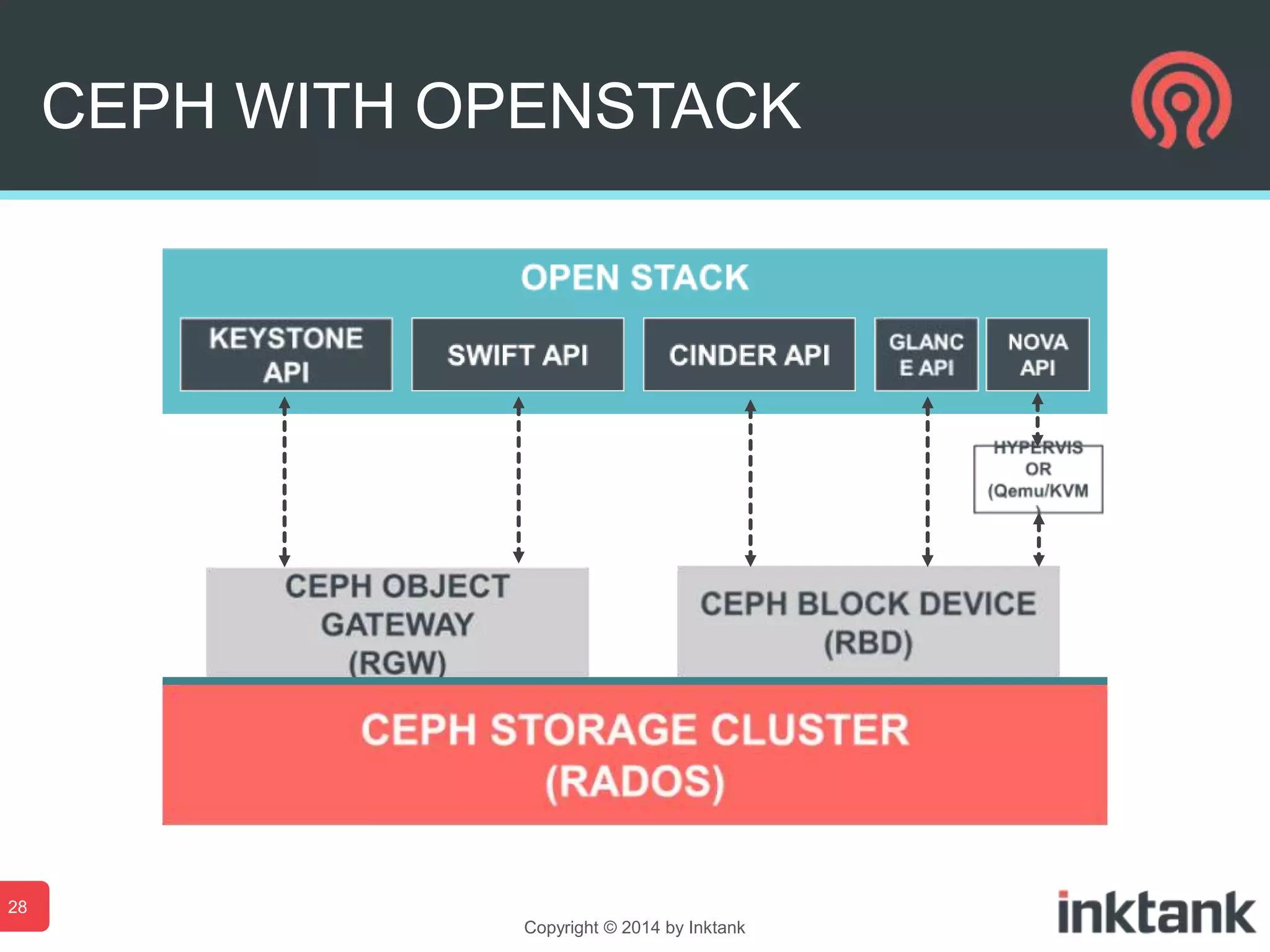

Ceph is an open-source distributed storage system that provides object, block, and file storage in a single unified platform. It has become popular for OpenStack deployments due to its ability to provide scalable storage using commodity hardware. Ceph uses a pseudo-random placement algorithm called CRUSH to distribute data and replicas across its clusters to provide fault tolerance and load balancing.