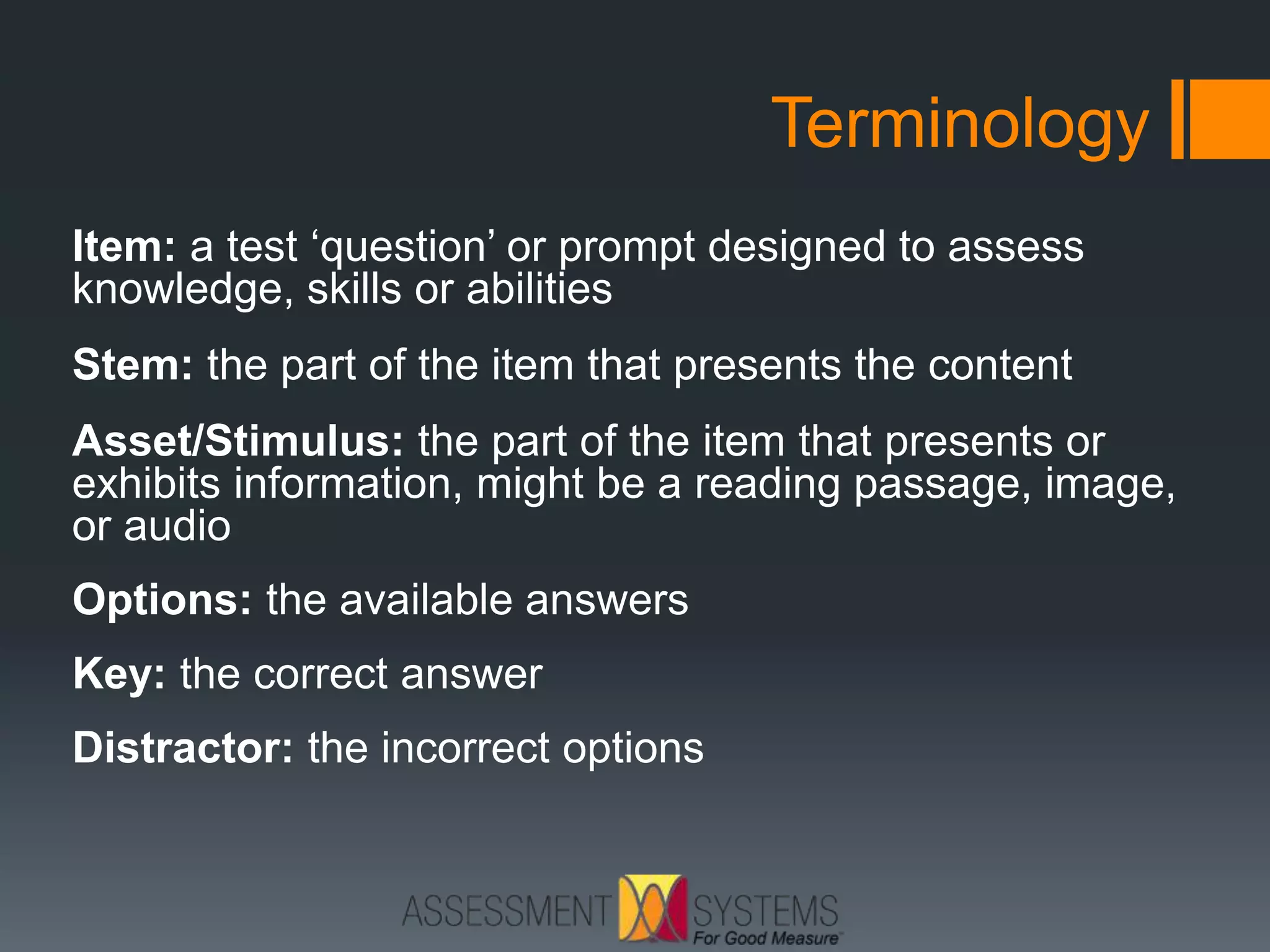

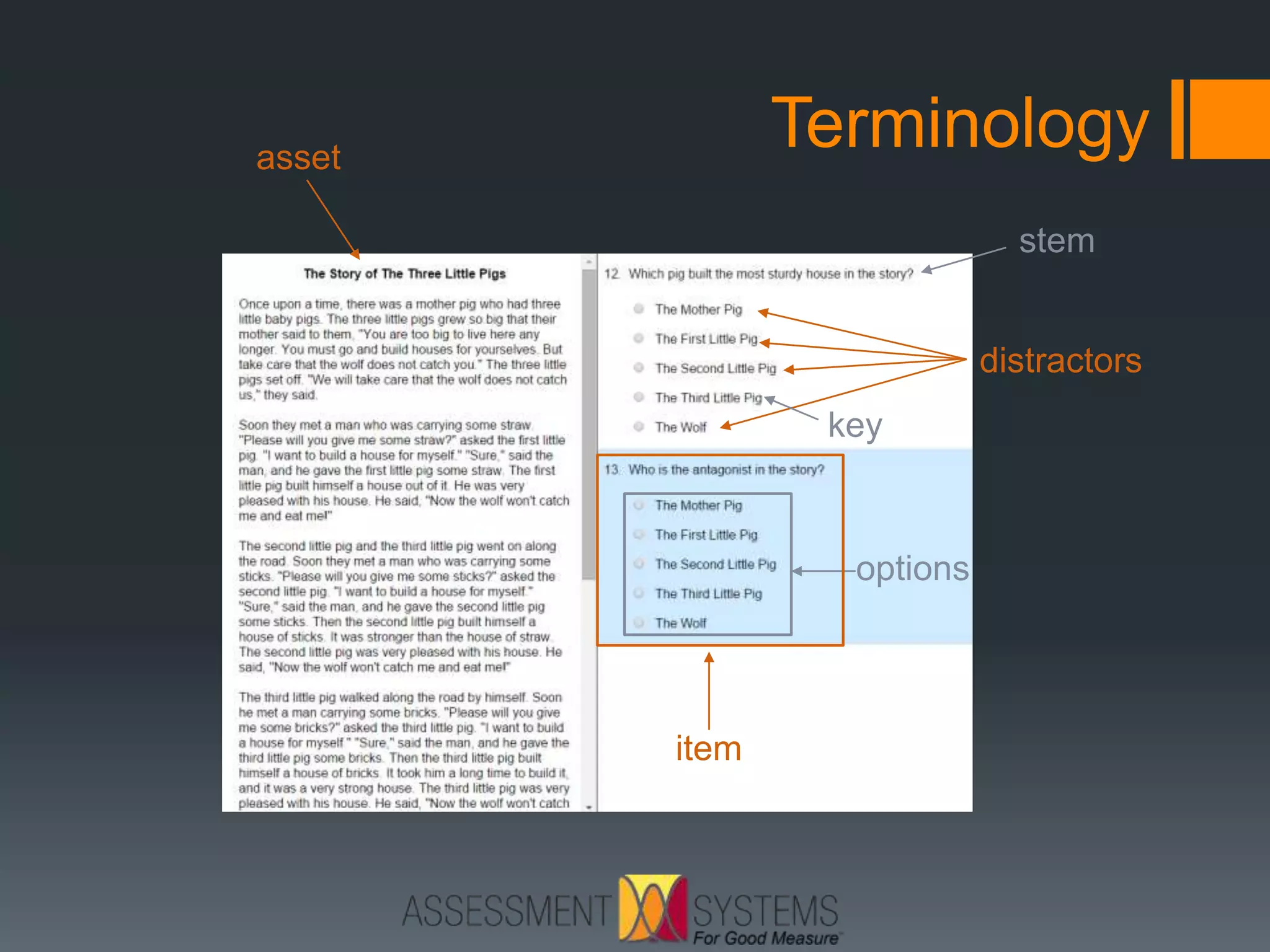

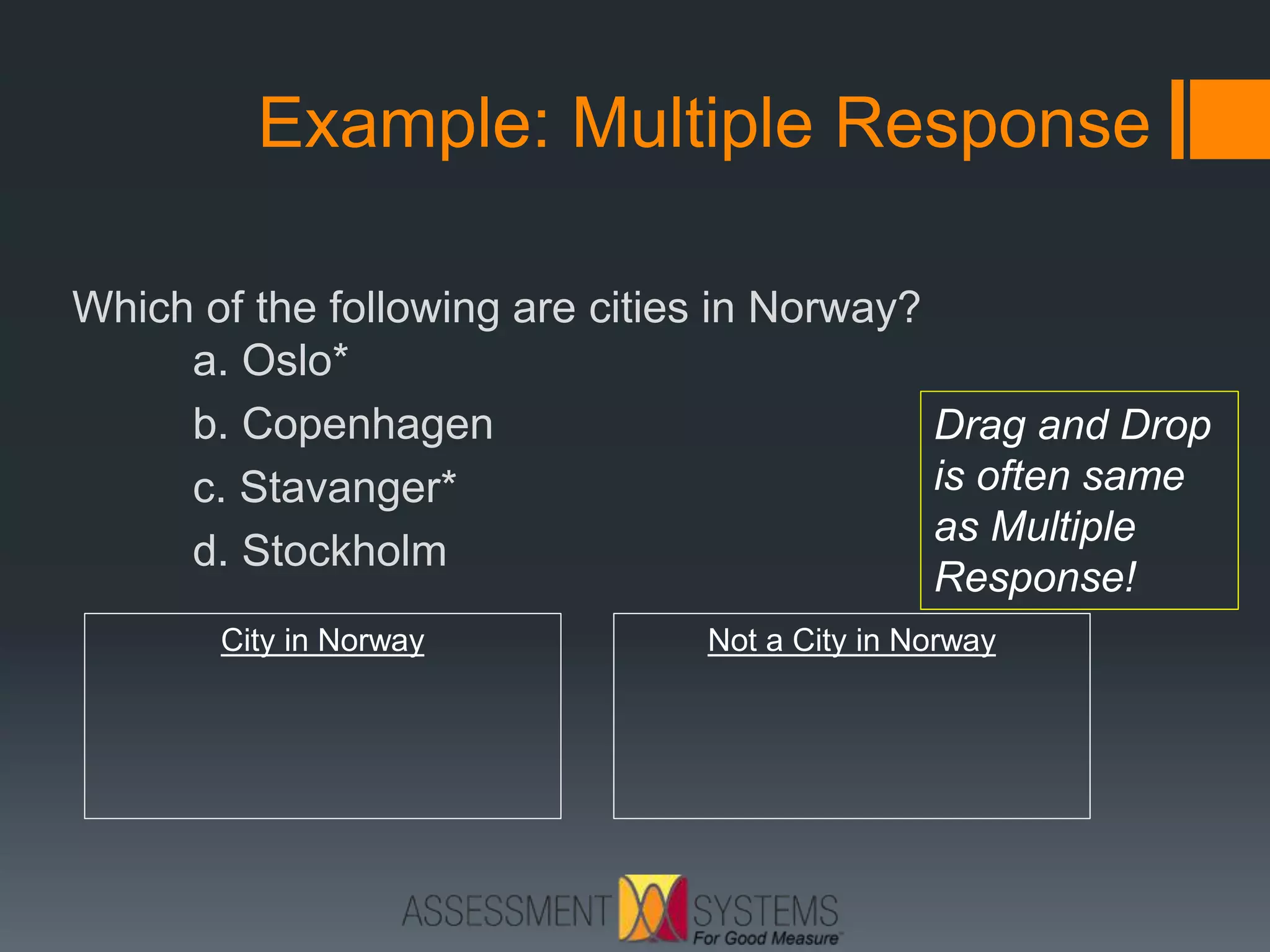

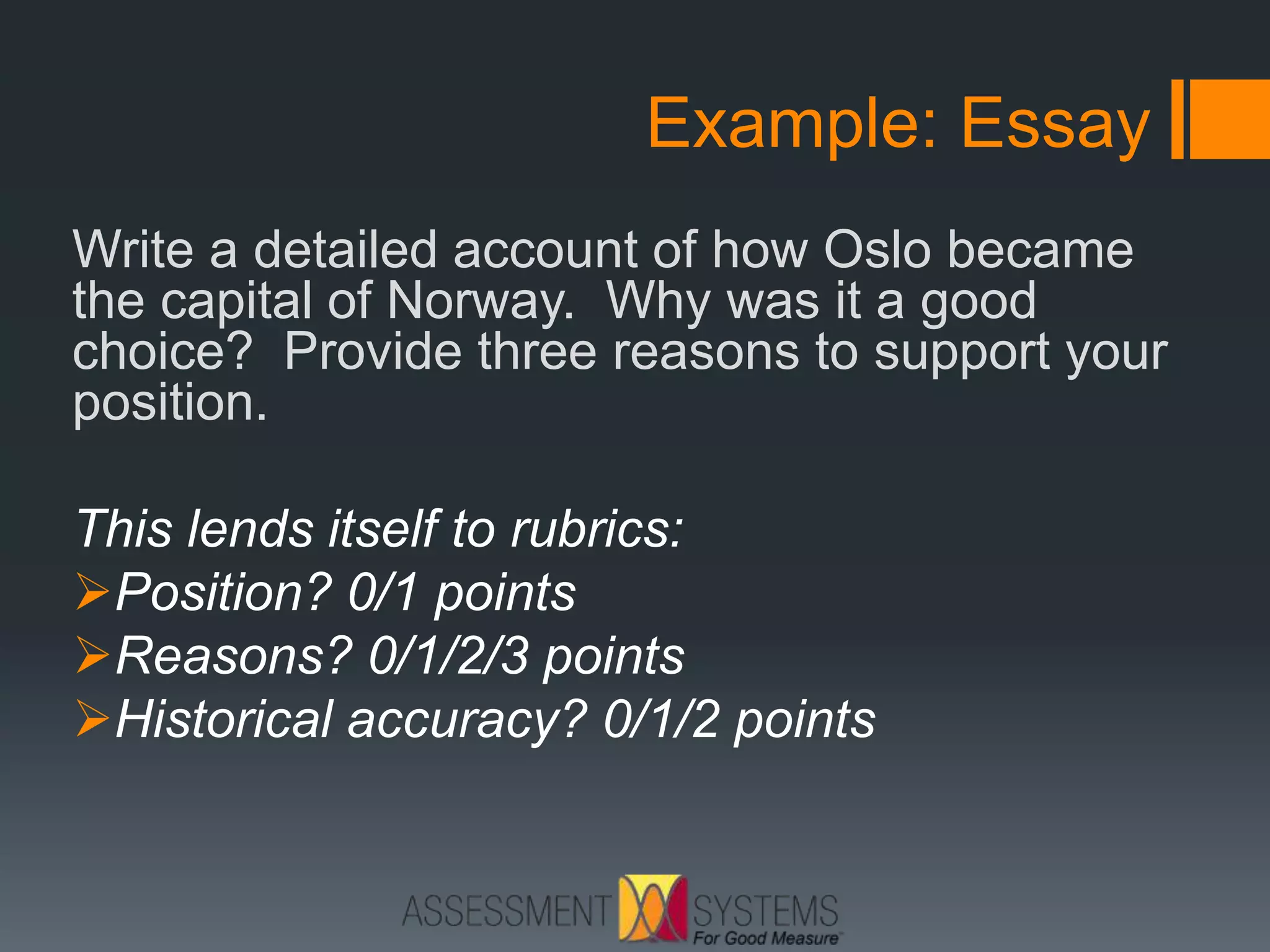

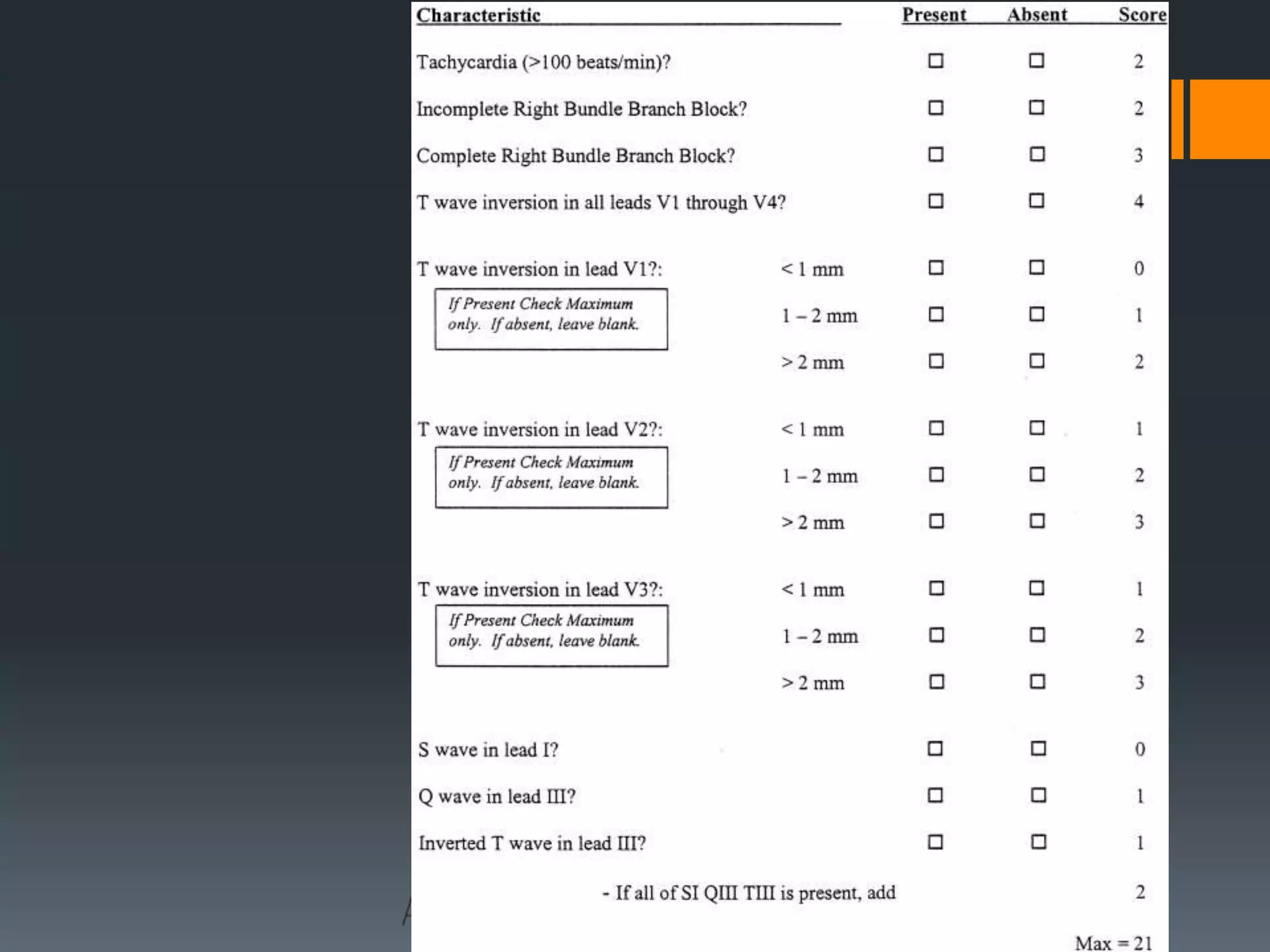

The document discusses item writing objectives for developing high-quality exam items, emphasizing clarity, relevance, and validity in testing. It distinguishes between selected and constructed response item types, providing guidelines for writing effective test questions and scoring methods, including the use of rubrics. It also highlights the importance of minimizing construct-irrelevant variance to ensure scores accurately reflect an examinee's knowledge and abilities.