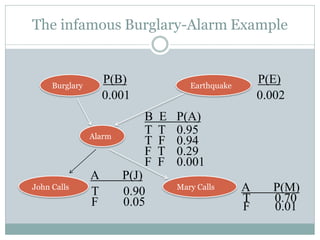

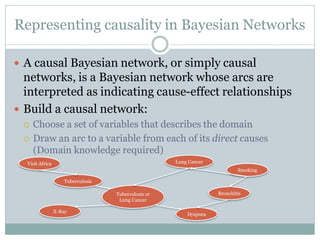

- A Bayesian network is a graphical model that depicts probabilistic relationships among variables. It represents a joint probability distribution over variables in a directed acyclic graph with conditional probability tables.

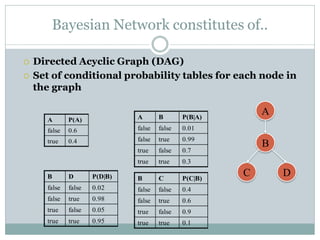

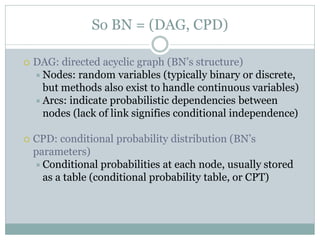

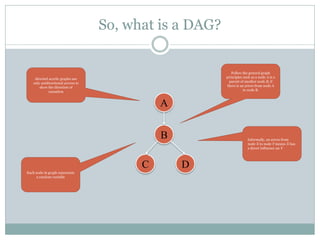

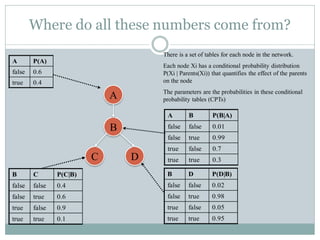

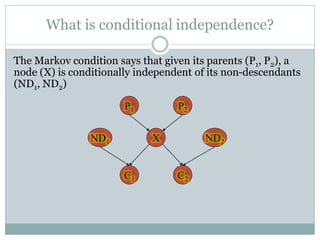

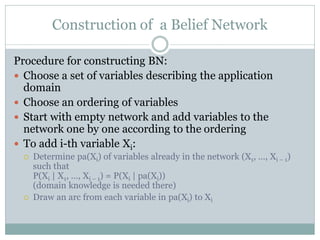

- A Bayesian network consists of a directed acyclic graph whose nodes represent variables and edges represent probabilistic dependencies, along with conditional probability distributions that quantify the relationships.

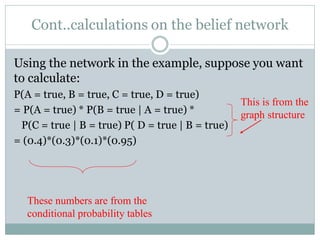

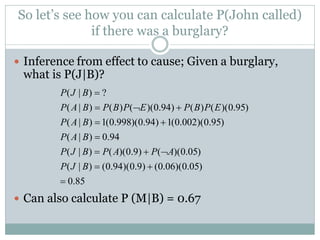

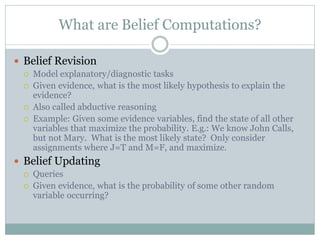

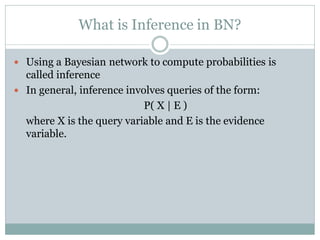

- Inference using a Bayesian network allows computing probabilities like P(X|evidence) by taking into account the graph structure and probability tables.