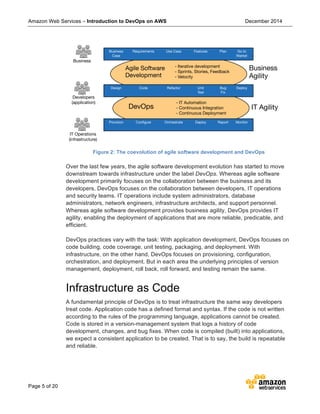

This document provides an introduction to DevOps principles and practices on AWS. It discusses key DevOps concepts like infrastructure as code, continuous deployment, automation, monitoring and security. It describes AWS services that support these concepts such as AWS CloudFormation, AWS CodeDeploy, AWS CodePipeline, AWS CodeCommit, AWS Elastic Beanstalk and AWS OpsWorks. These services help enable practices like infrastructure as code, continuous integration/delivery, automation, monitoring and security in a DevOps workflow.