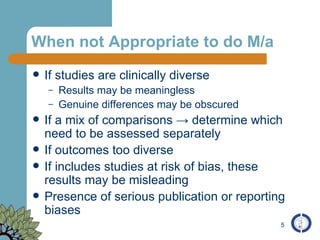

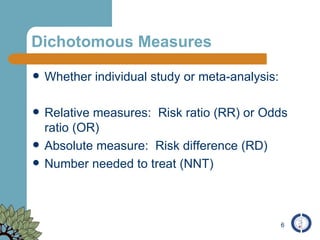

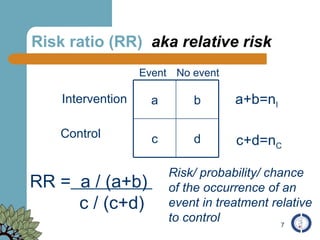

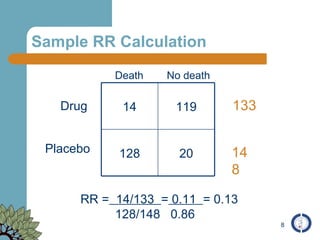

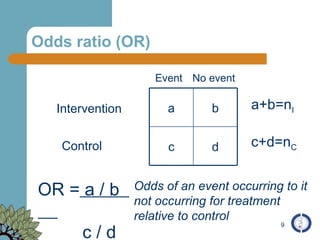

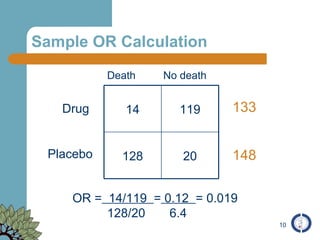

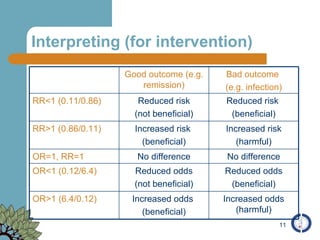

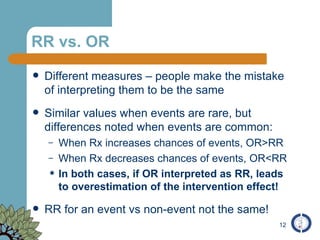

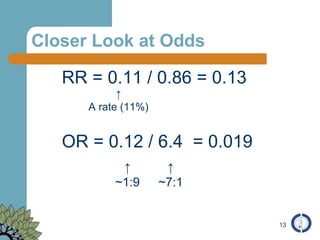

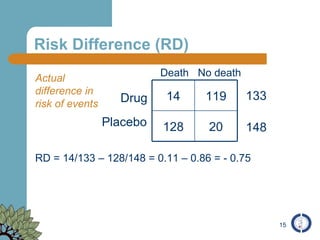

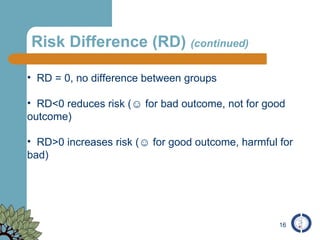

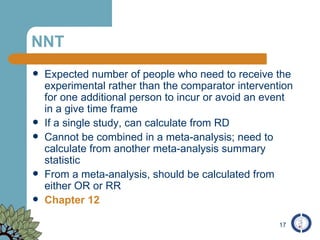

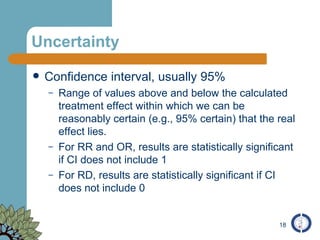

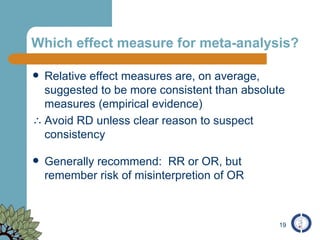

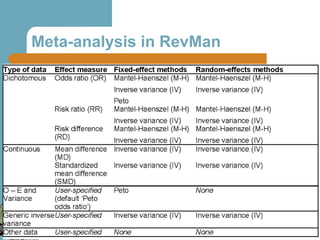

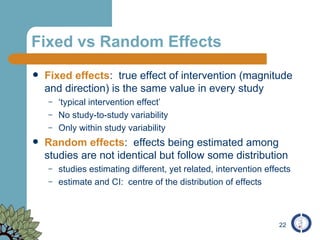

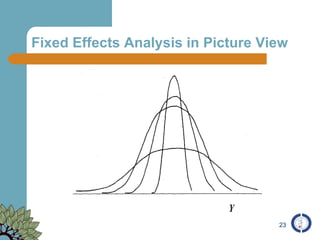

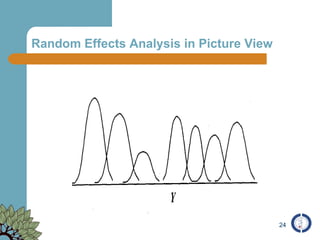

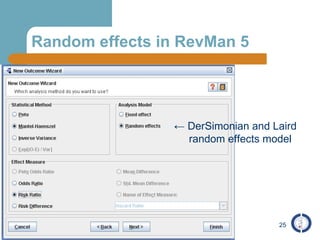

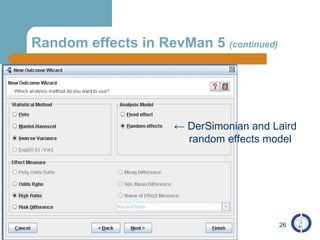

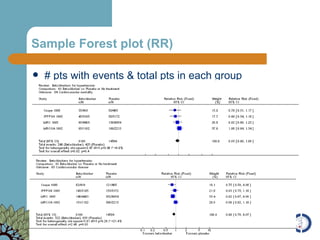

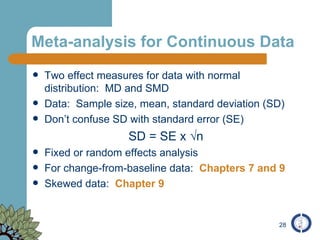

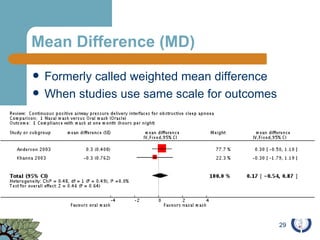

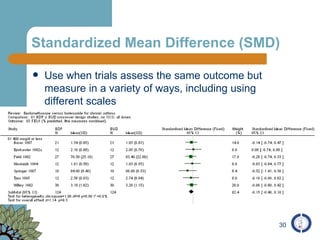

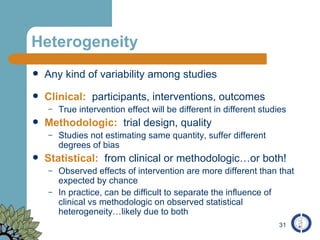

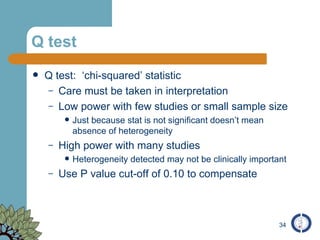

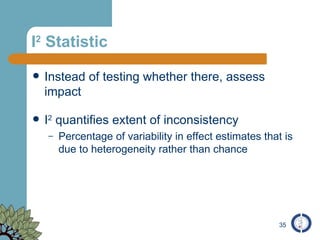

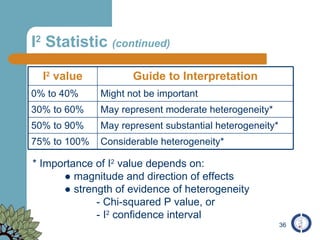

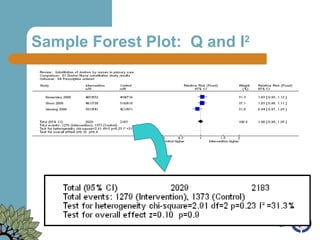

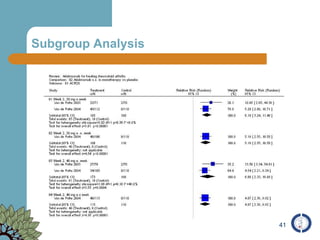

The document provides an overview of meta-analysis, emphasizing its appropriate use in synthesizing quantitative data while addressing issues of study diversity and bias. It outlines the differences between relative (risk ratio, odds ratio) and absolute measures (risk difference, number needed to treat), and discusses the importance of understanding statistical heterogeneity. Additionally, it details methods for analyzing data, including fixed and random effects models, and stresses caution in interpretation of results.