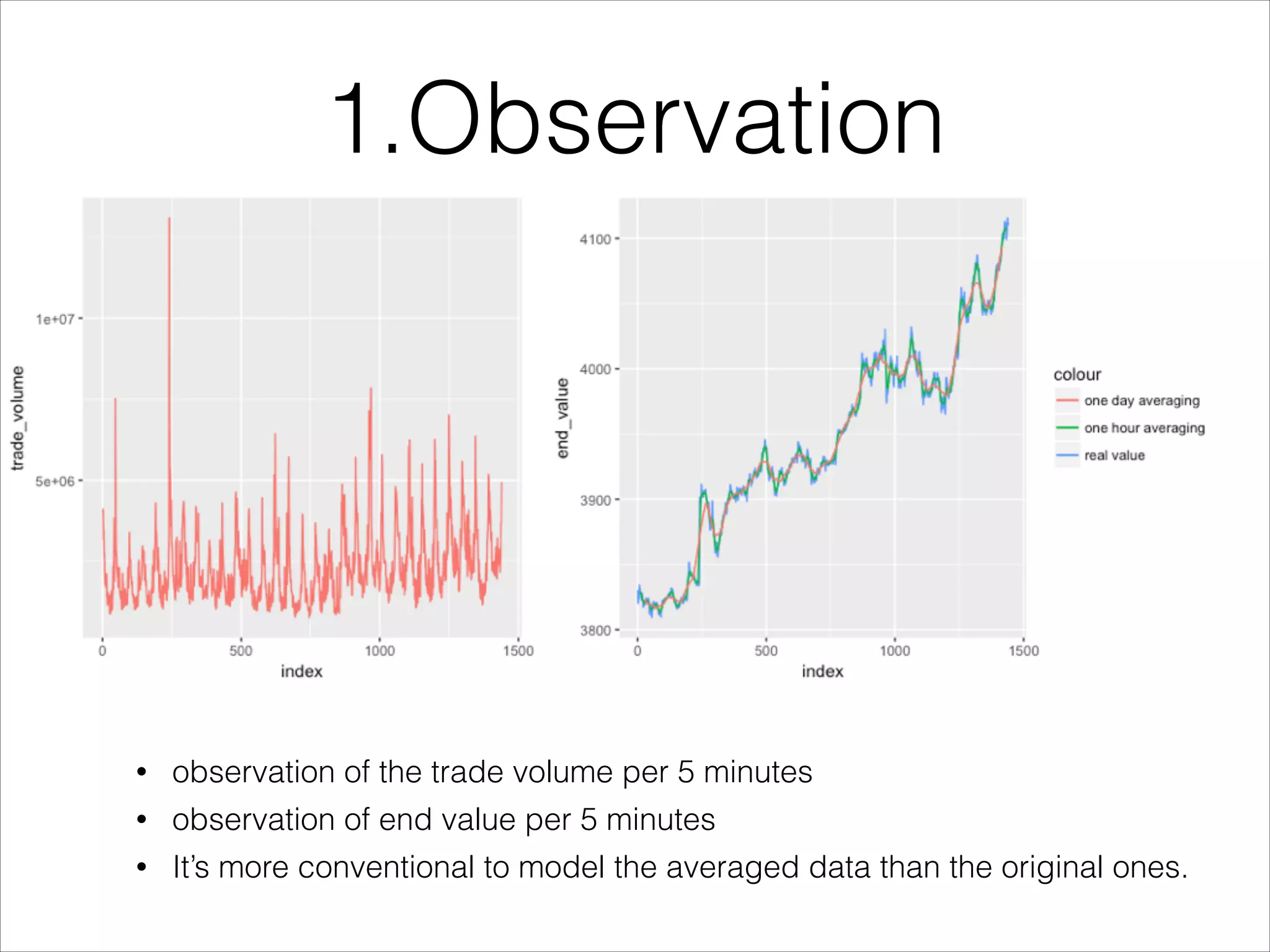

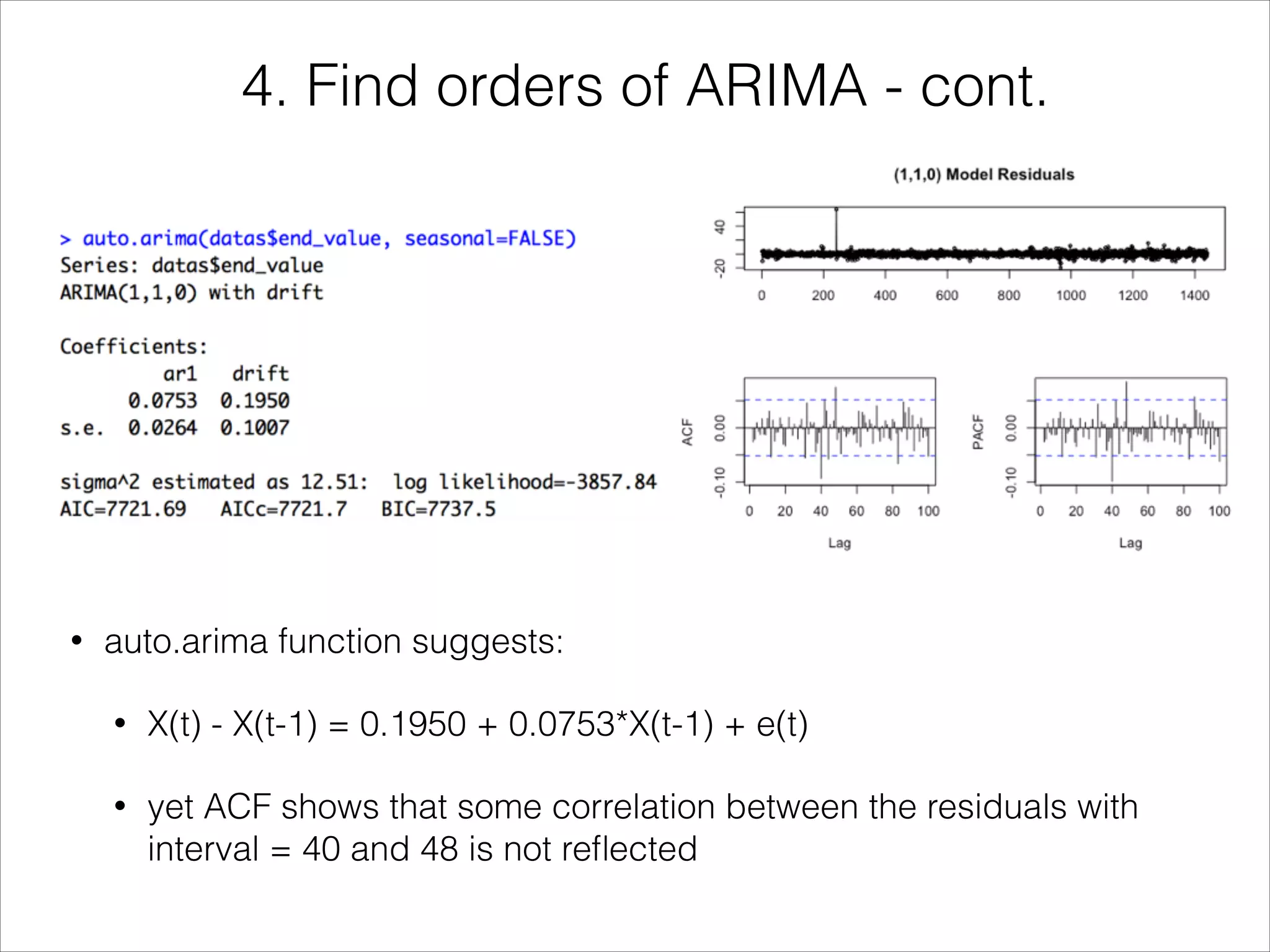

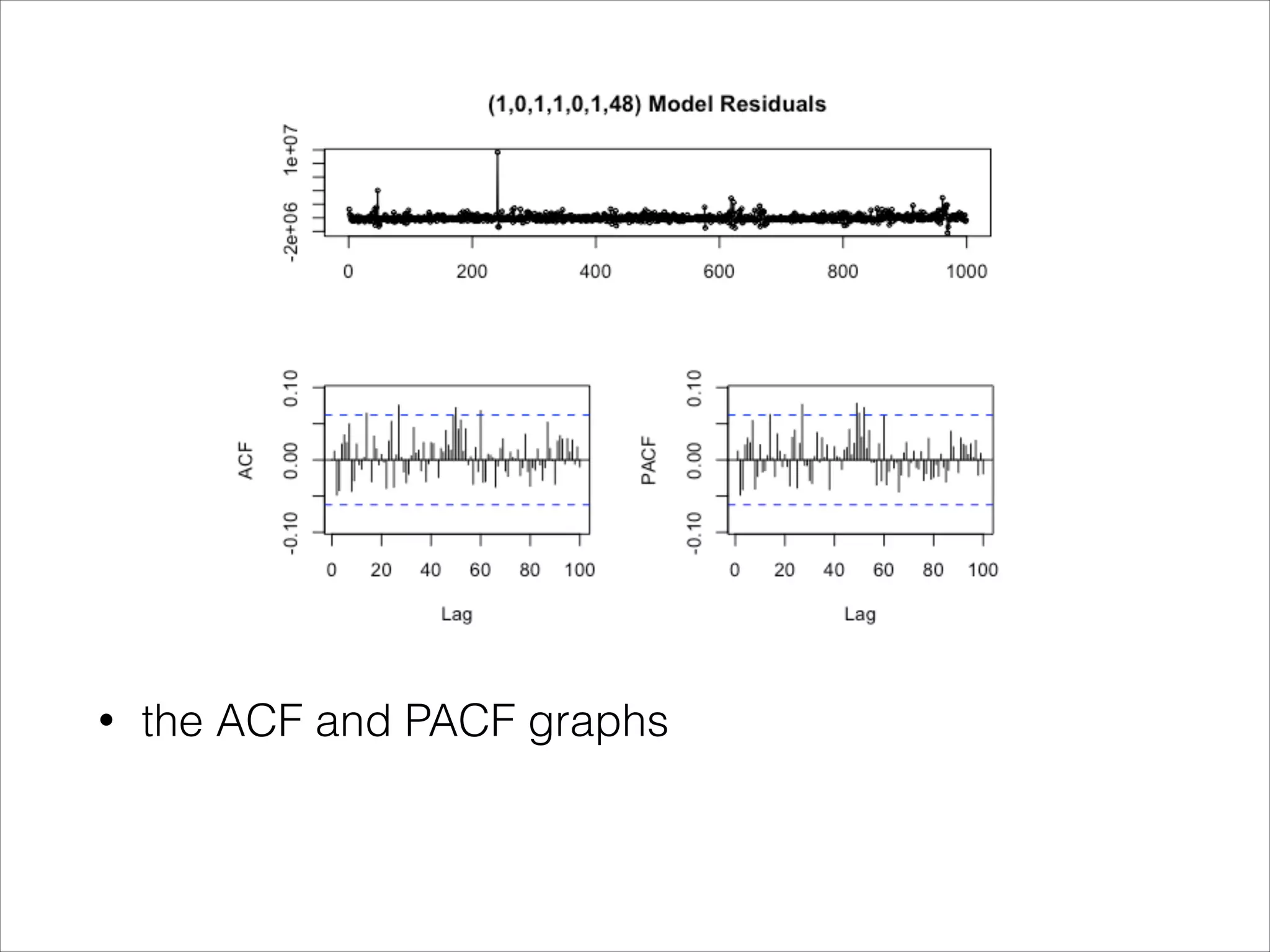

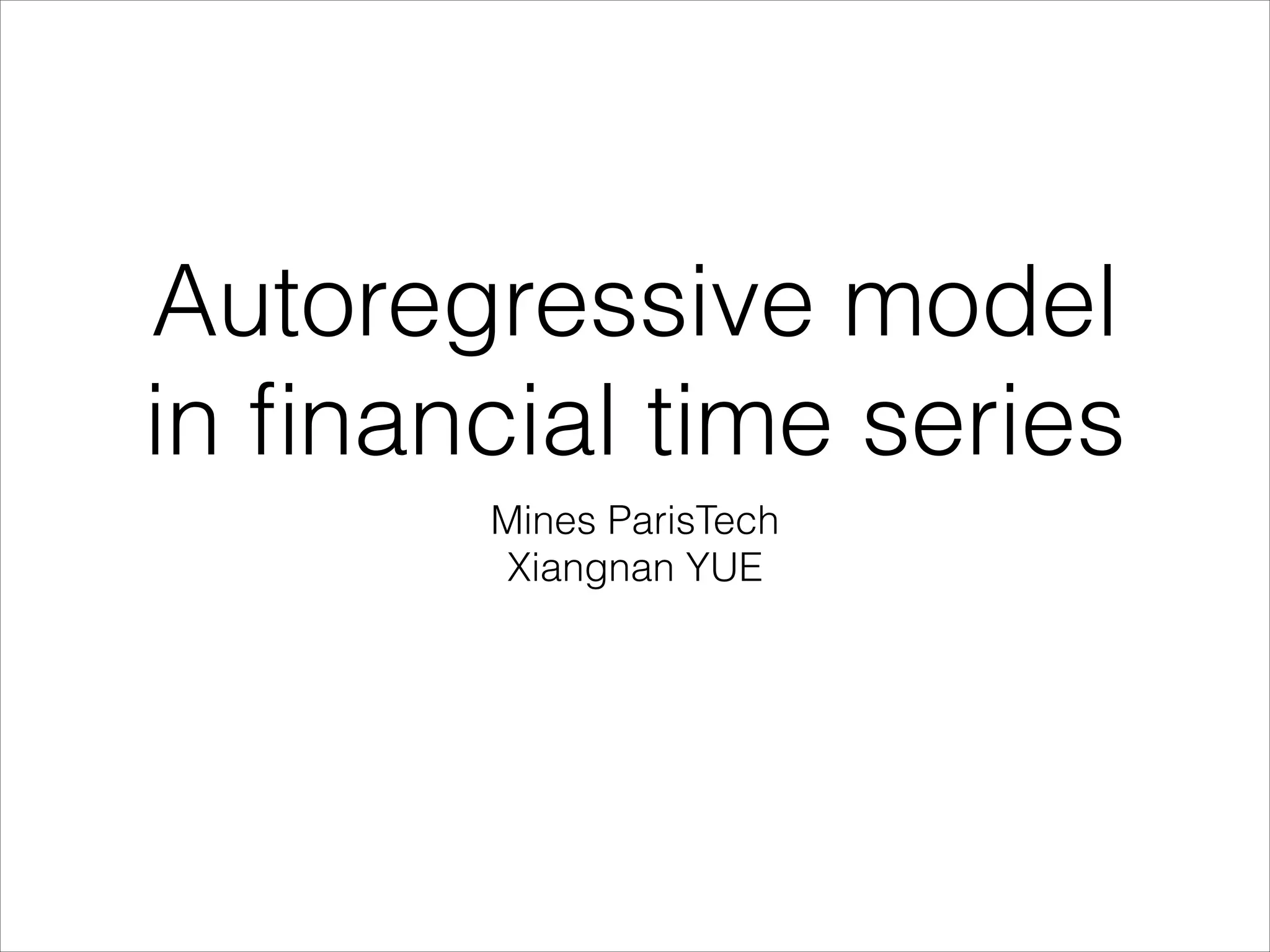

This document discusses autoregressive models for financial time series analysis. It introduces autoregressive (AR) and moving average (MA) processes. The autoregressive integrated moving average (ARIMA) model is presented as a way to fit time series data that accounts for correlation between observations. The document outlines the Box-Jenkins methodology for identifying and fitting an ARIMA model to time series data, including checking for stationarity, identifying orders using autocorrelation and partial autocorrelation functions, and selecting the best model. It applies this process to Shanghai Stock Exchange index data, finding that an ARIMA(48,1,0) model provided the best fit.

![Introduction

• Moving-average and autoregression are two ways

to introduce correlation and smoothness

• use 1/20 for [w(t-9),..,w(t),..,w(t +10)]](https://image.slidesharecdn.com/arima-171117214436/75/a-brief-introduction-to-Arima-4-2048.jpg)

![Introduction

• sometimes we see also the PACF, which is used to

remove the influence of intermedia variables.

• for example phi(h) is defined as

• If we remember that E[X(h)|X(h-1)] can be seen as the

projection of X(h) to the X(h-1) plane, this PACF(h) can be

explained in the following graph](https://image.slidesharecdn.com/arima-171117214436/75/a-brief-introduction-to-Arima-7-2048.jpg)

![Introduction

• Yet with only one realization at each time t, to check

cov(x(t), x(t-k)) becomes feasible if we have the

weak stationary assumption.

• Weak stationary demands that

• the mean function E[X(t)]

• the auto-covariance function Cov(X(t+k), X(t))

• stay the same for different t.](https://image.slidesharecdn.com/arima-171117214436/75/a-brief-introduction-to-Arima-9-2048.jpg)