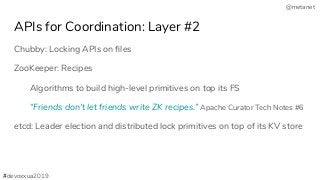

The evolution of distributed coordination tools shows that high-level APIs ease implementation of coordination tasks, such as leader election, locking, synchronized actions. For instance, the Chubby paper highlights familiarity of lock-based interfaces. Similarly, Apache Curator hides complexity of ZooKeeper recipes behind Java APIs, while etcd and Consul implement concurrency primitives on their own.

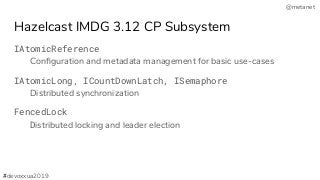

A different path in this journey would be extending the long-lasting java.util.concurrent APIs, such as Lock, Semaphore, etc. Simplicity of these APIs makes them very useful in distributed coordination use cases.

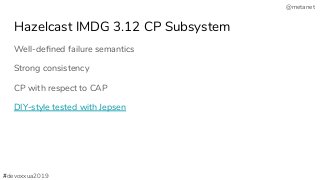

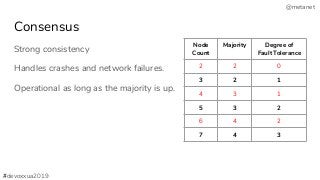

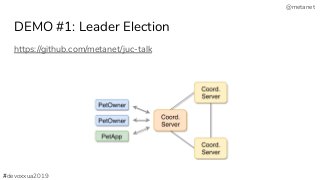

Join this talk to explore Hazelcast’s brand new implementation of java.util.concurrent APIs on top of the Raft consensus algorithm. I will walk through code samples to demonstrate how Java locks, semaphores, etc. can be used in distributed environments that involve partial failures. I will also share our experience of how we coped with several challenges we faced while developing and testing our Raft implementation.