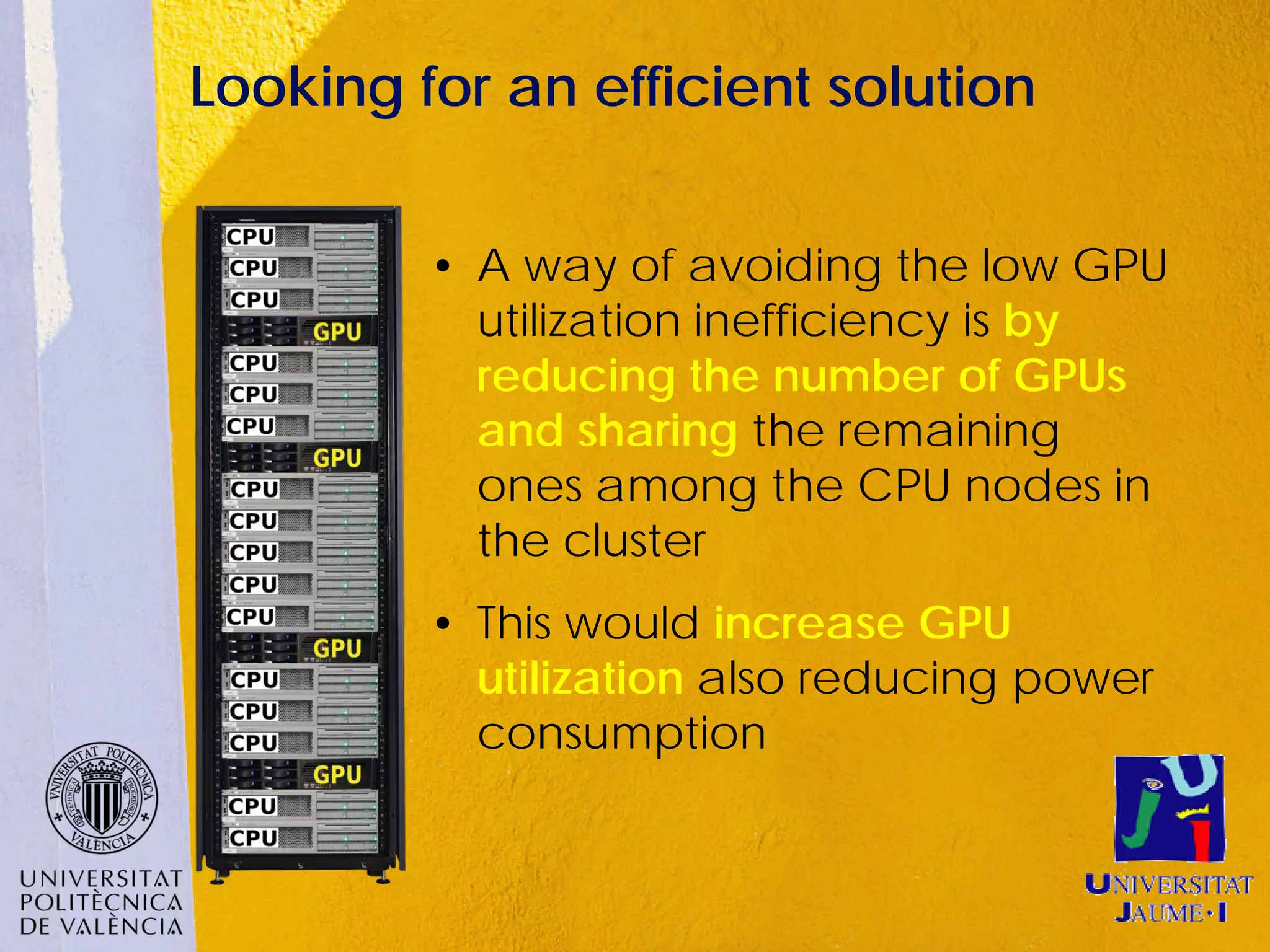

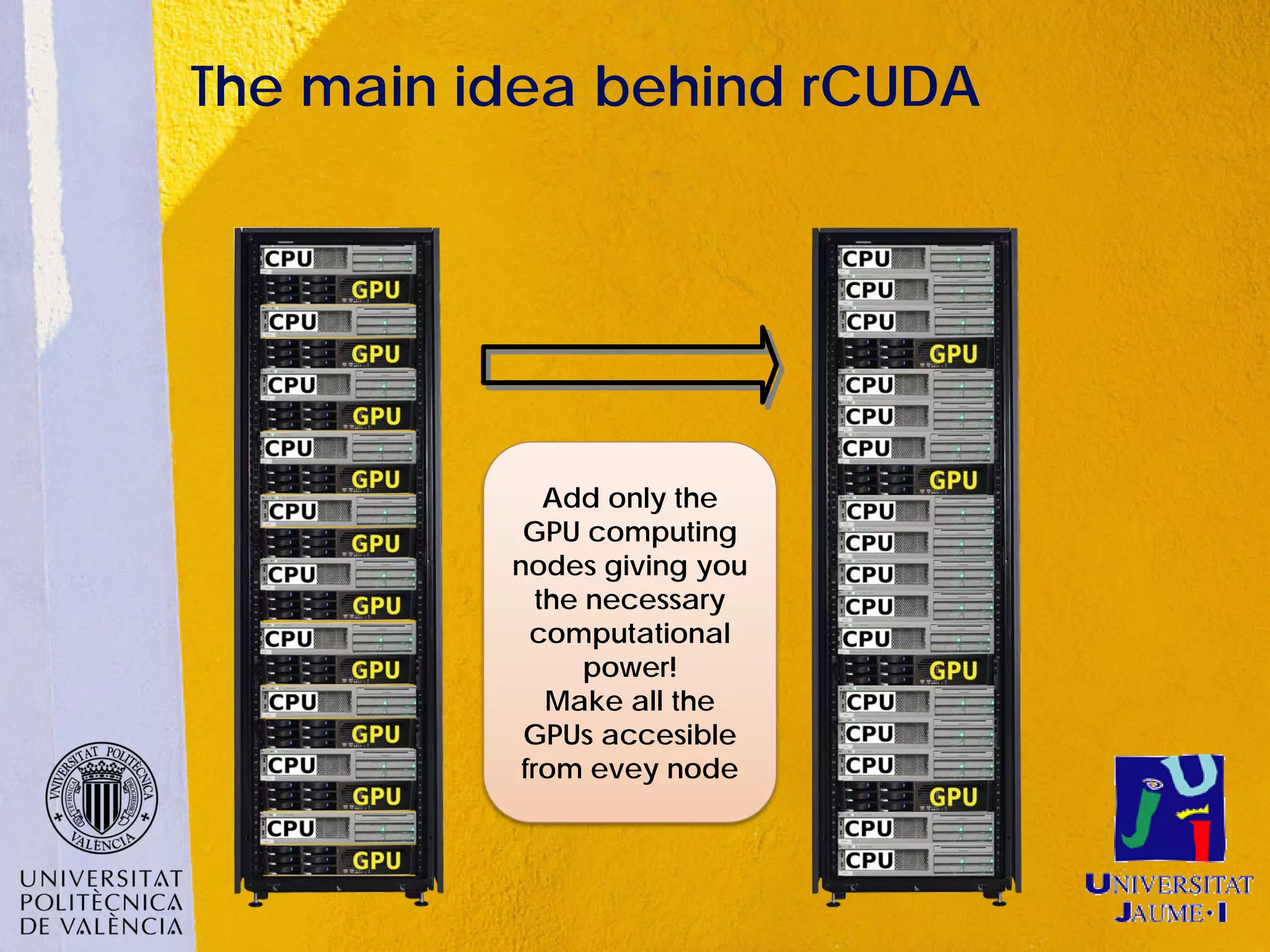

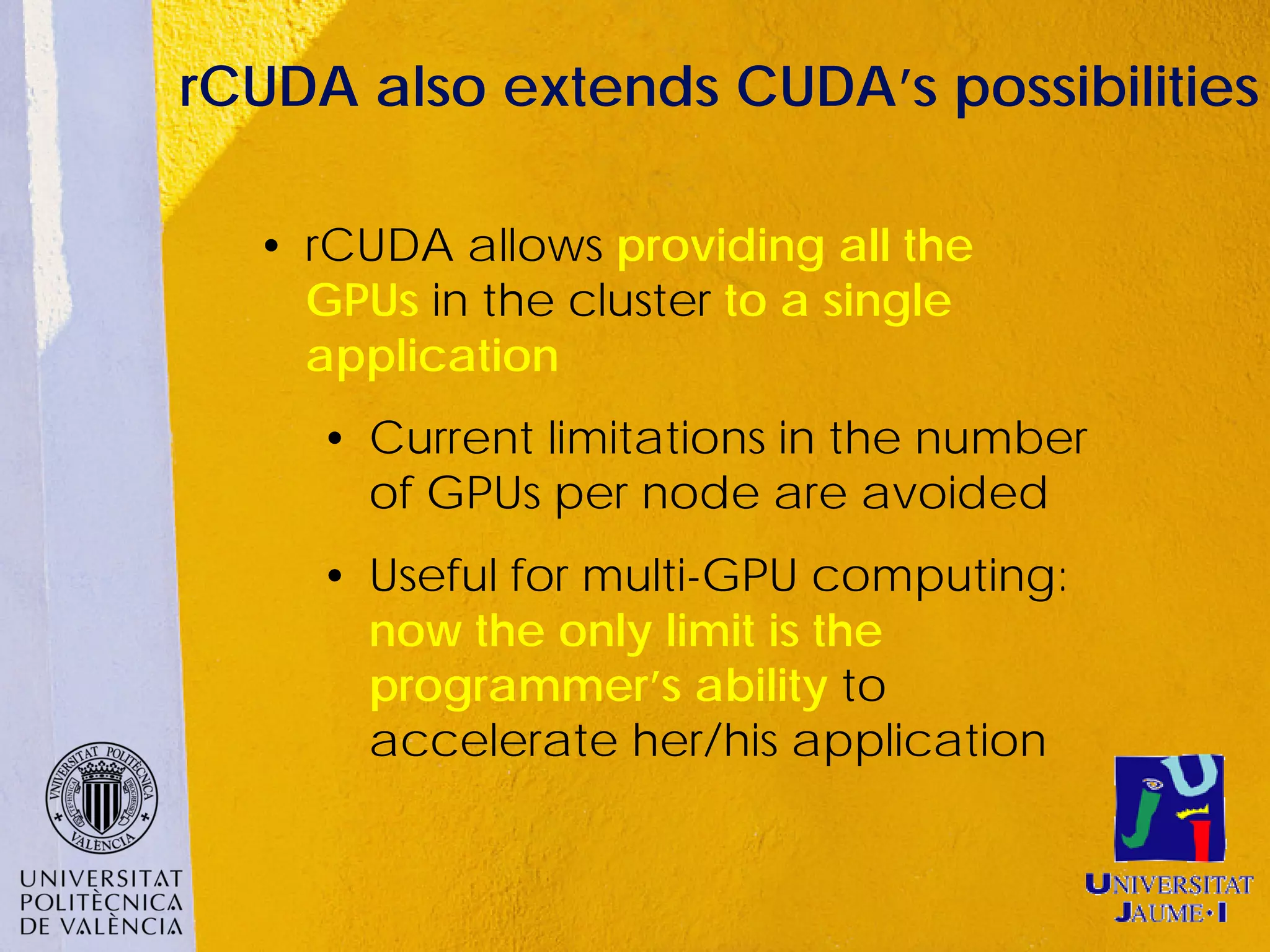

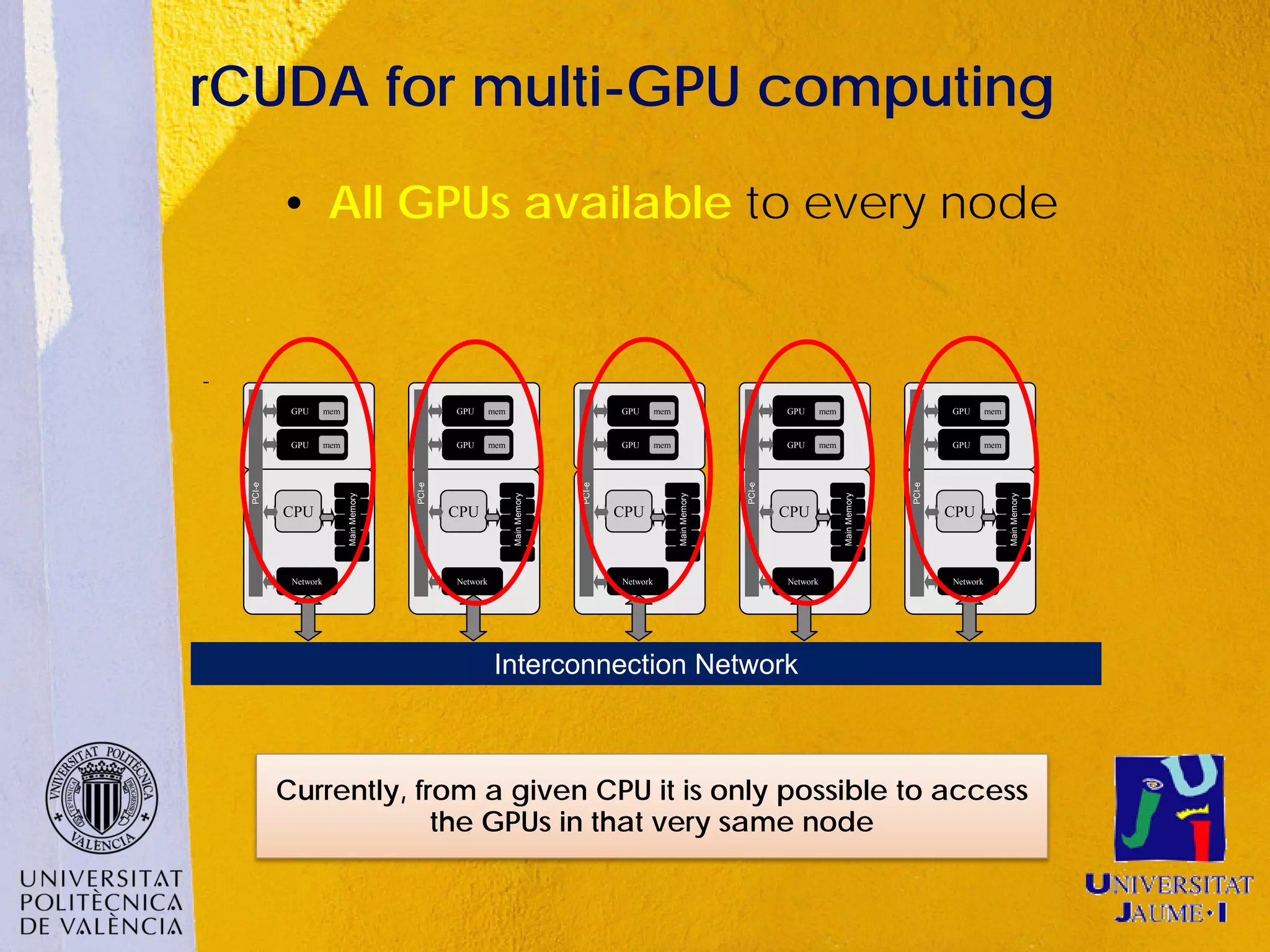

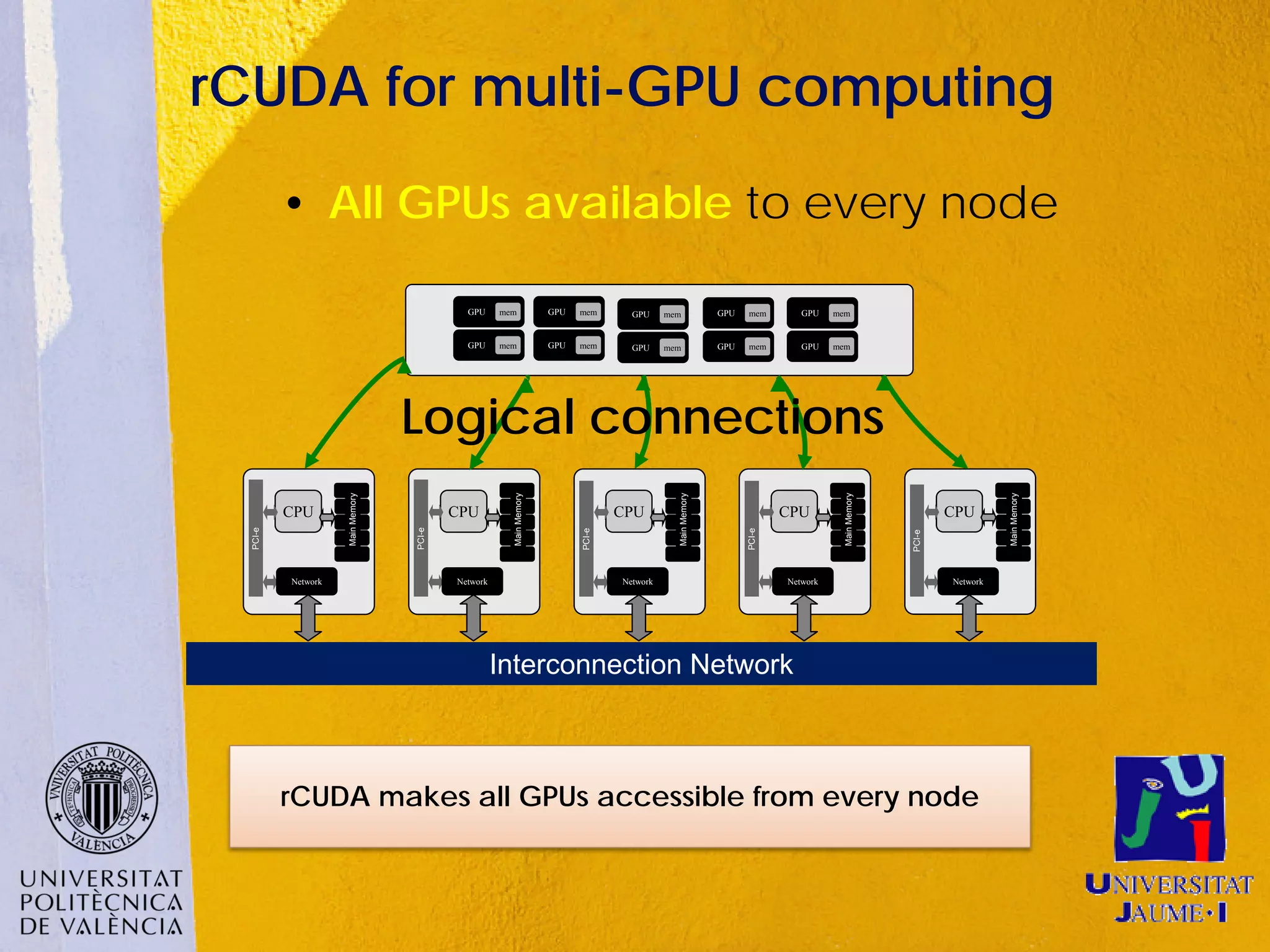

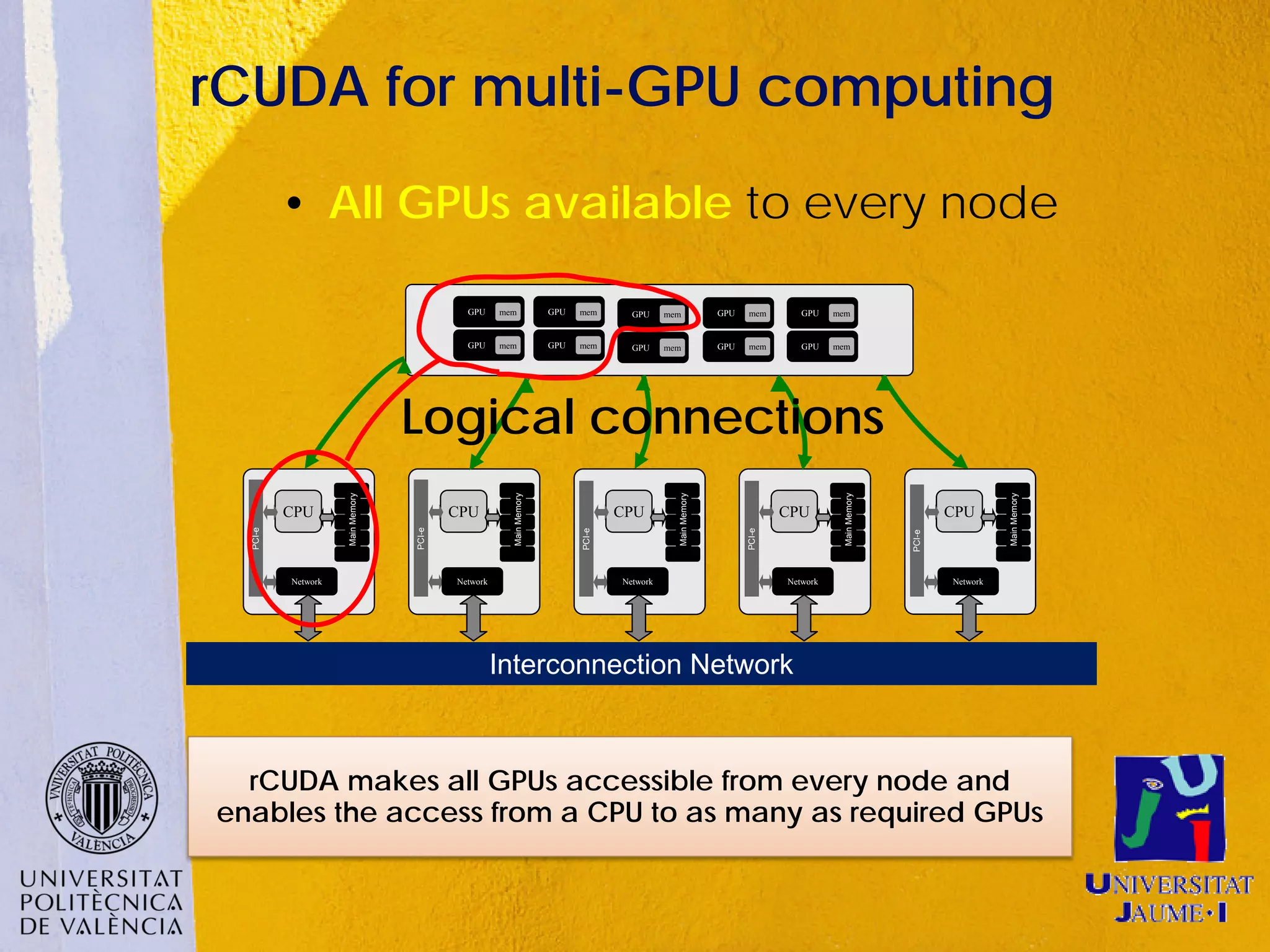

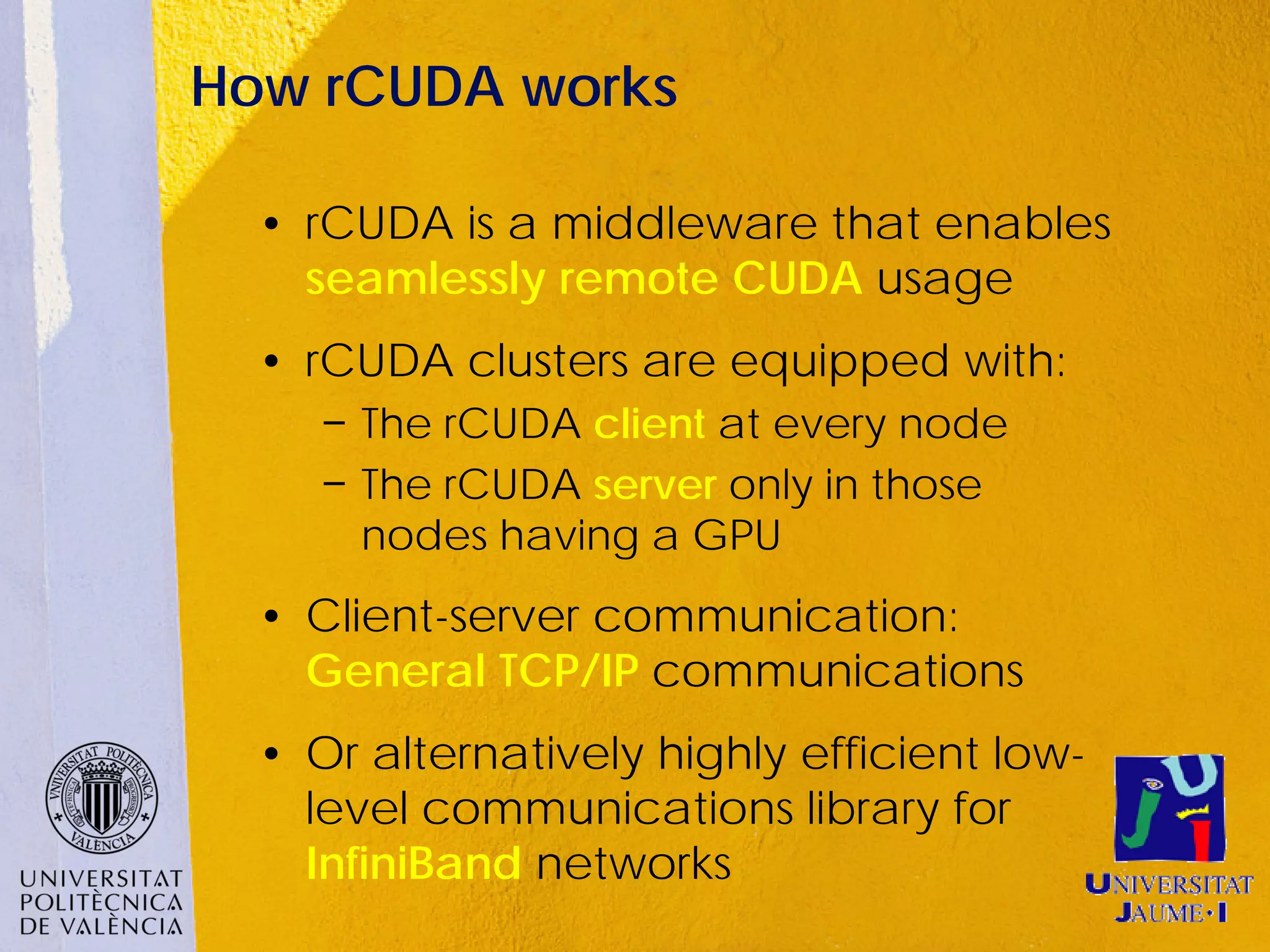

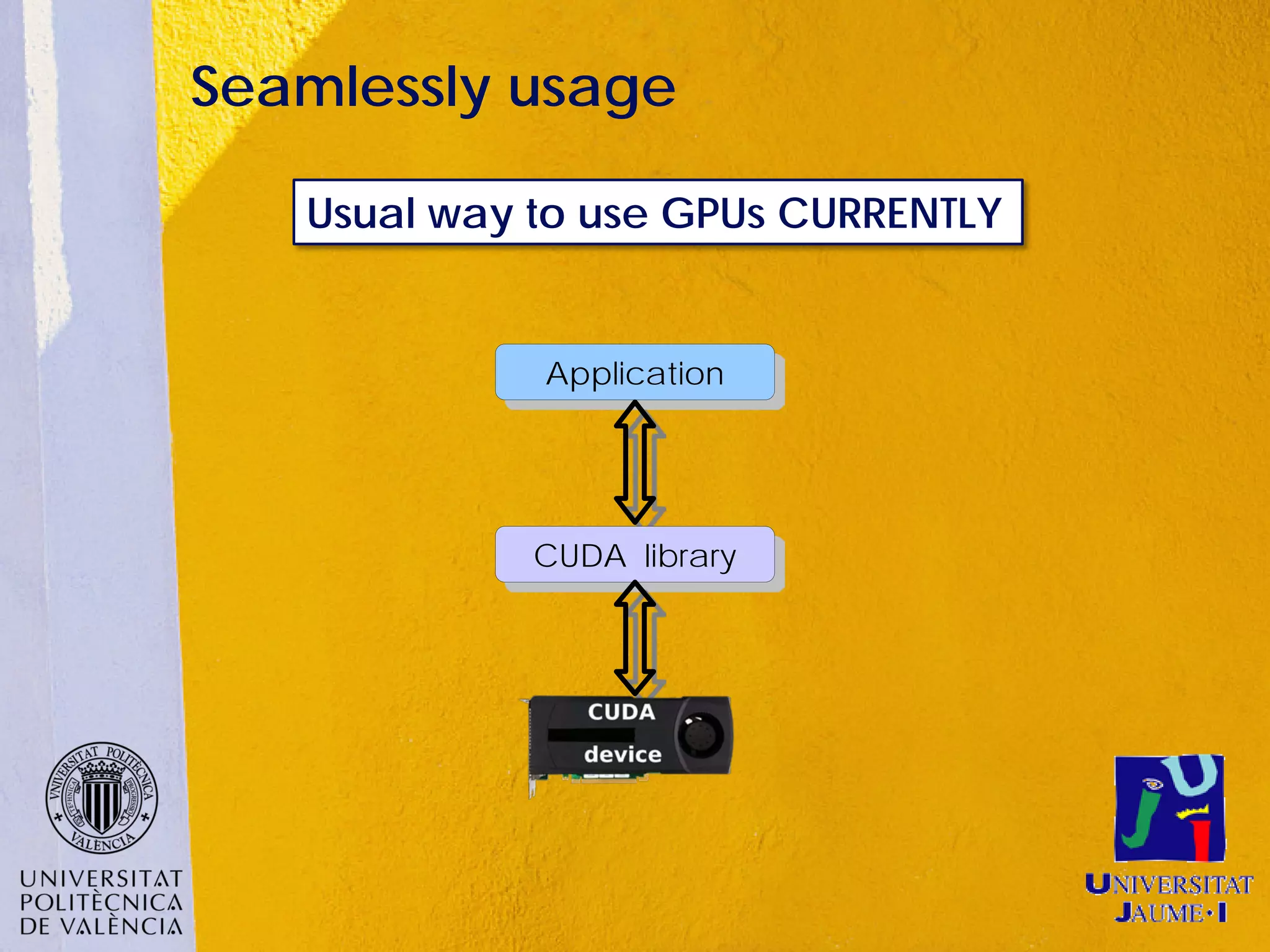

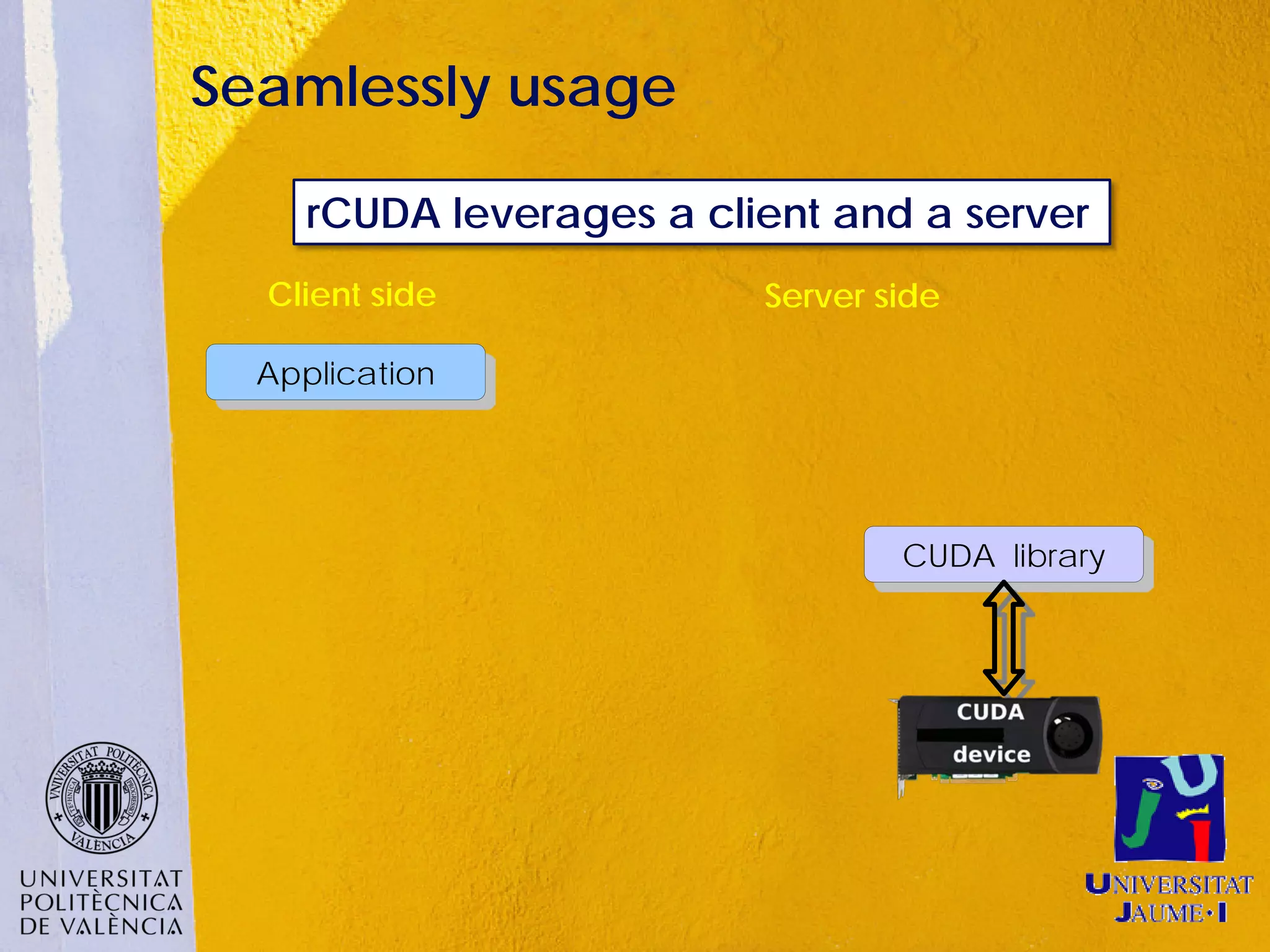

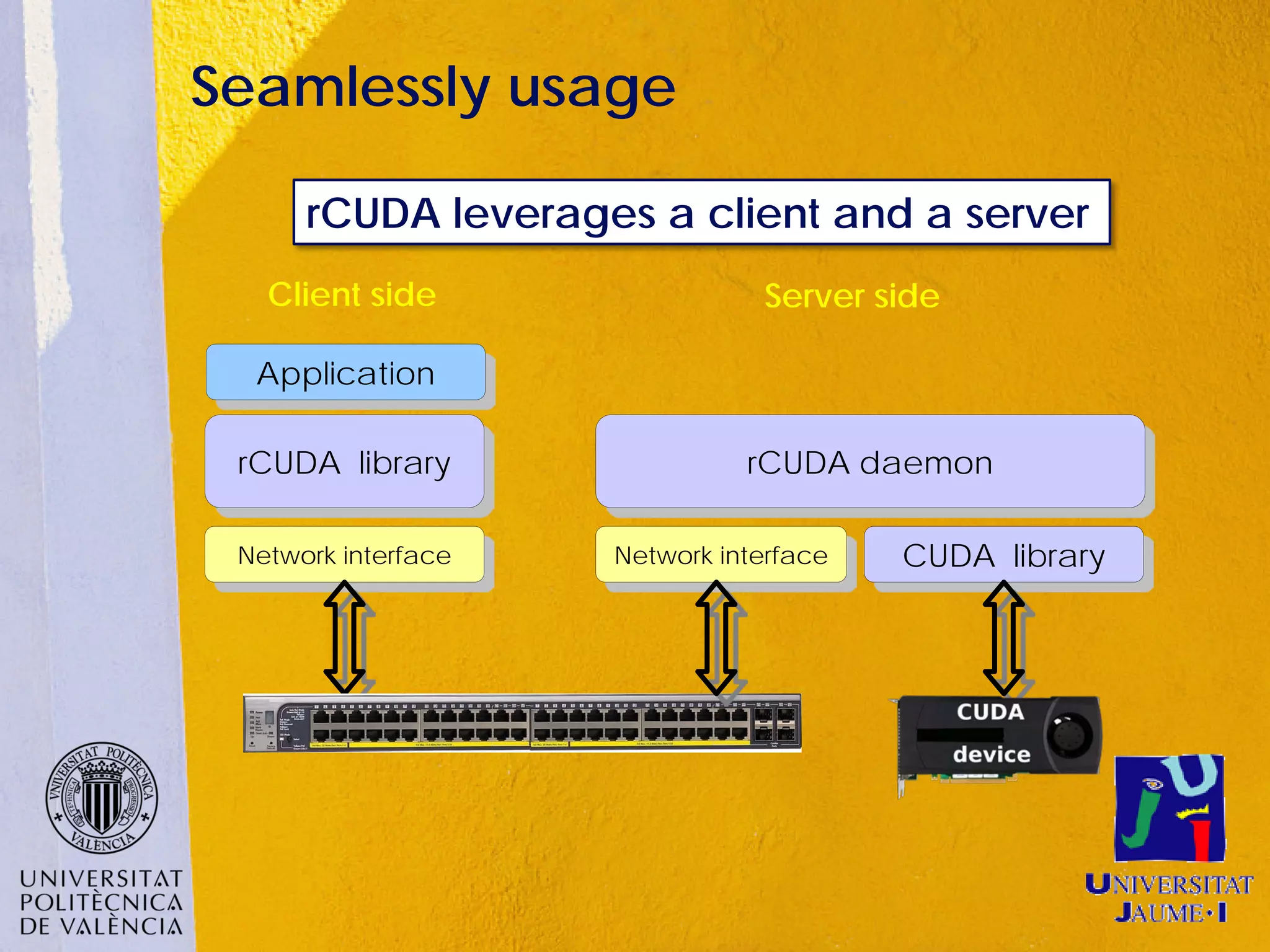

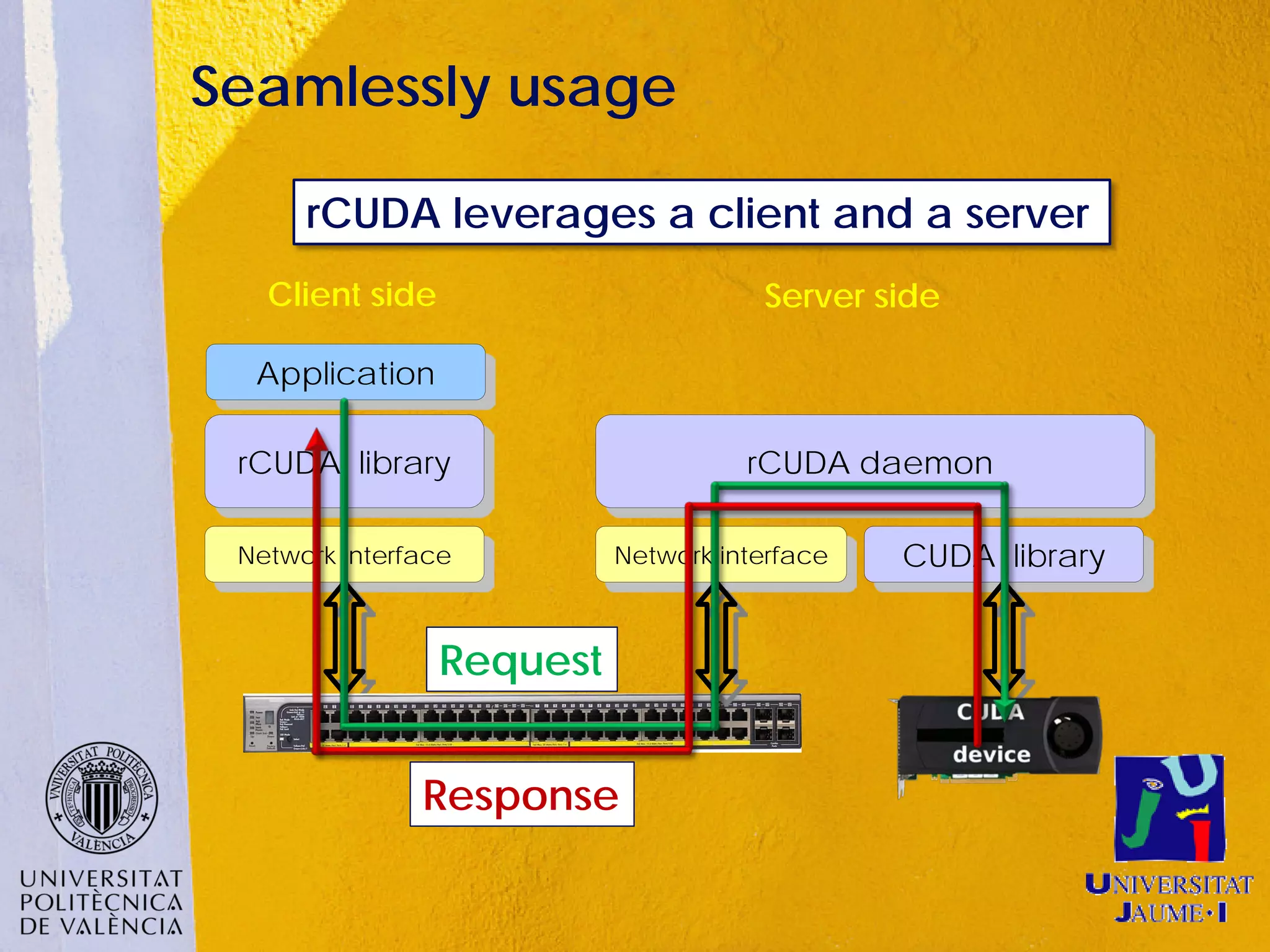

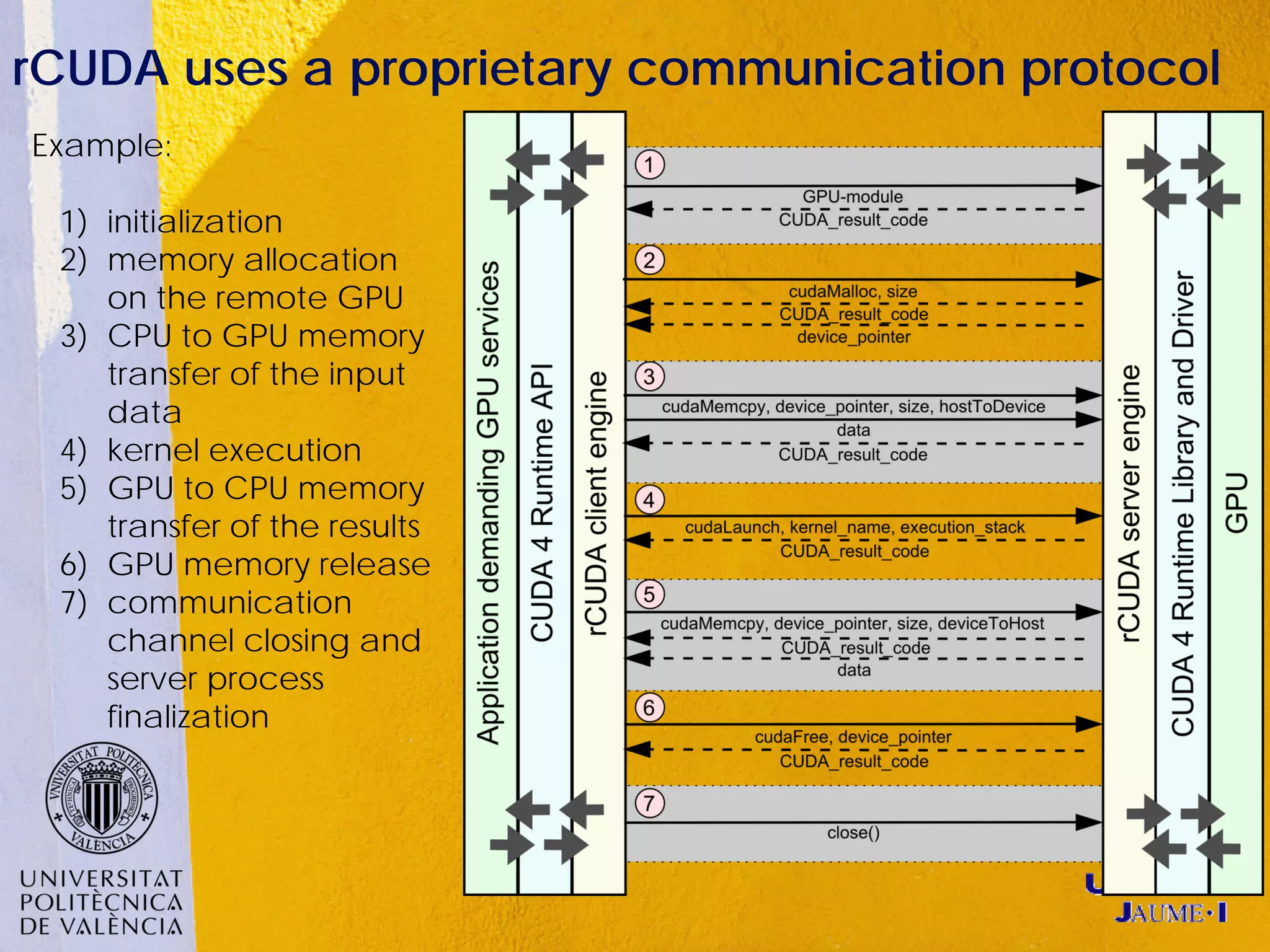

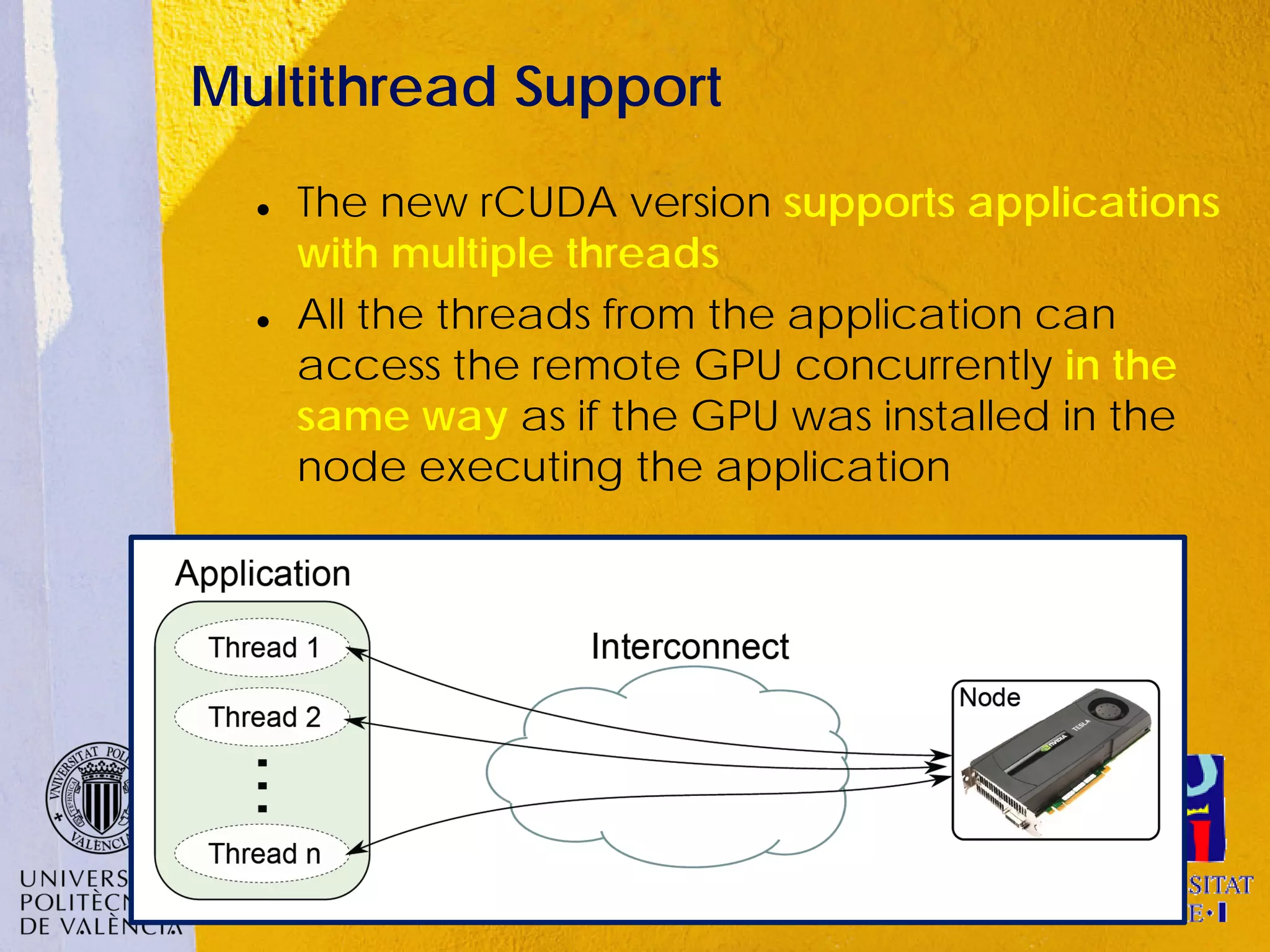

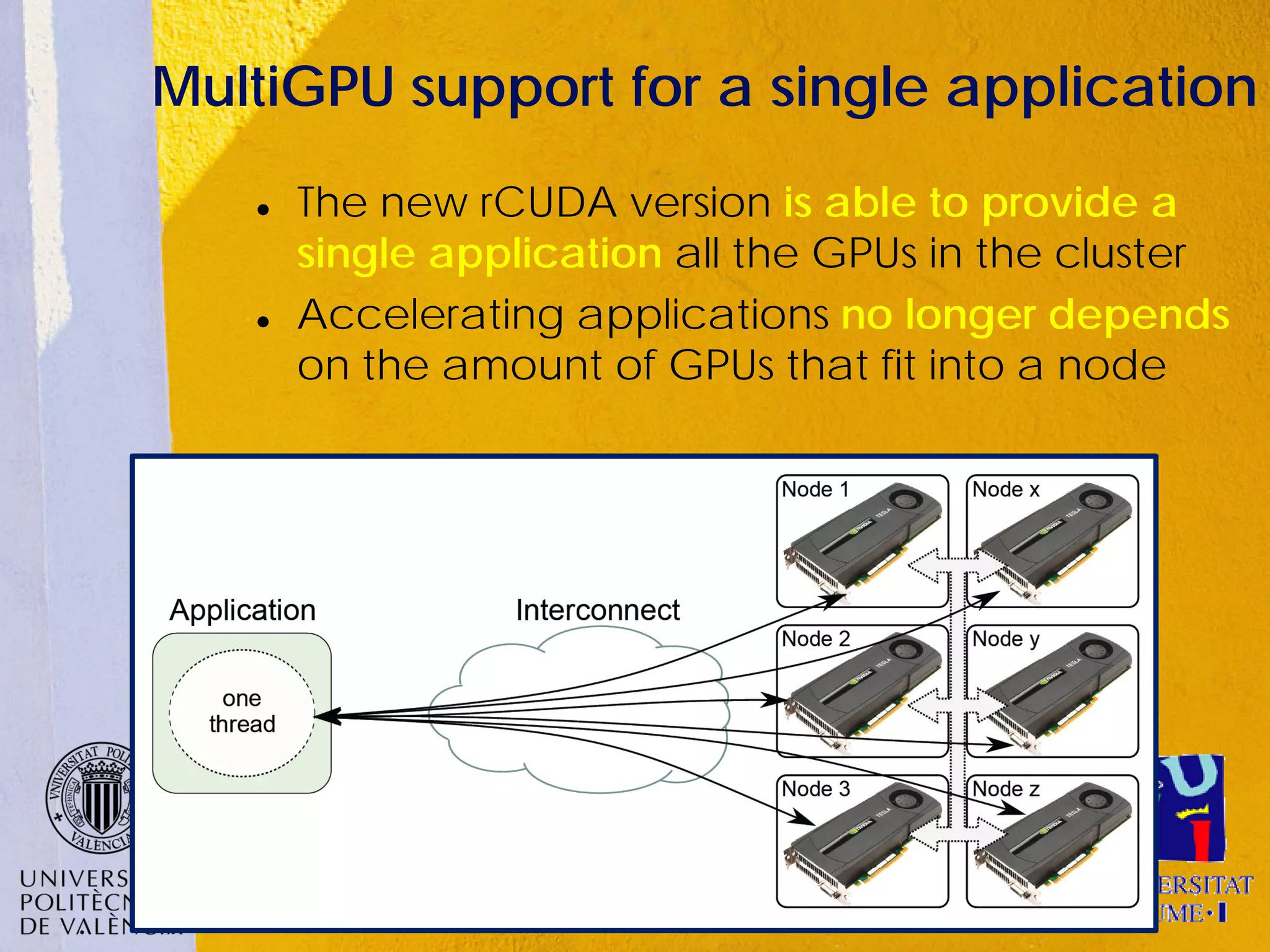

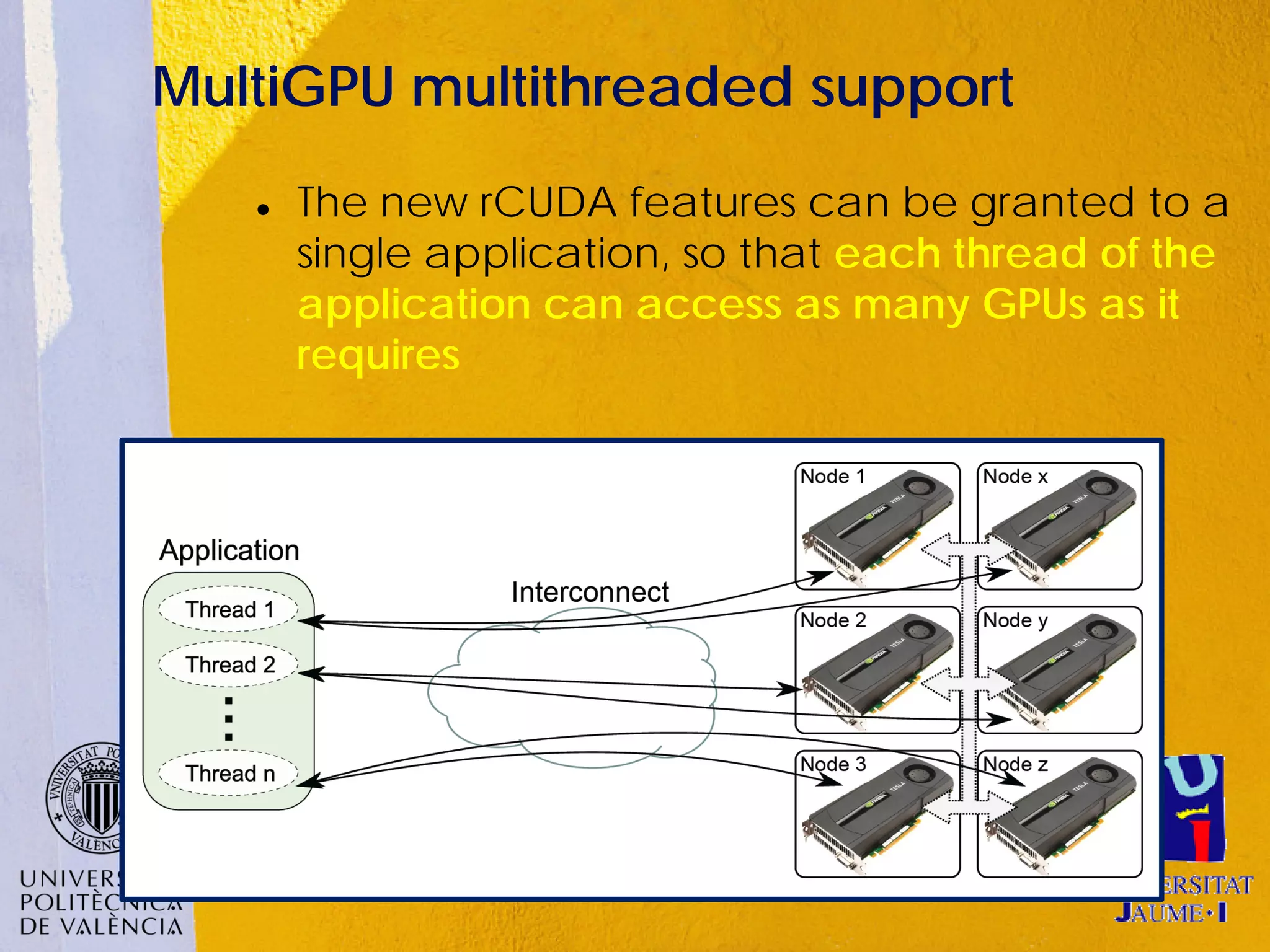

This document discusses sharing GPUs across a cluster using rCUDA. rCUDA allows nodes in a cluster to access GPUs remotely, improving GPU utilization and reducing costs by needing fewer total GPUs. It extends CUDA's capabilities by making all GPUs in the cluster accessible to applications running on any node. This "GPU as a service" approach can increase performance for multi-GPU applications by providing access to more GPUs than are locally present in a node.

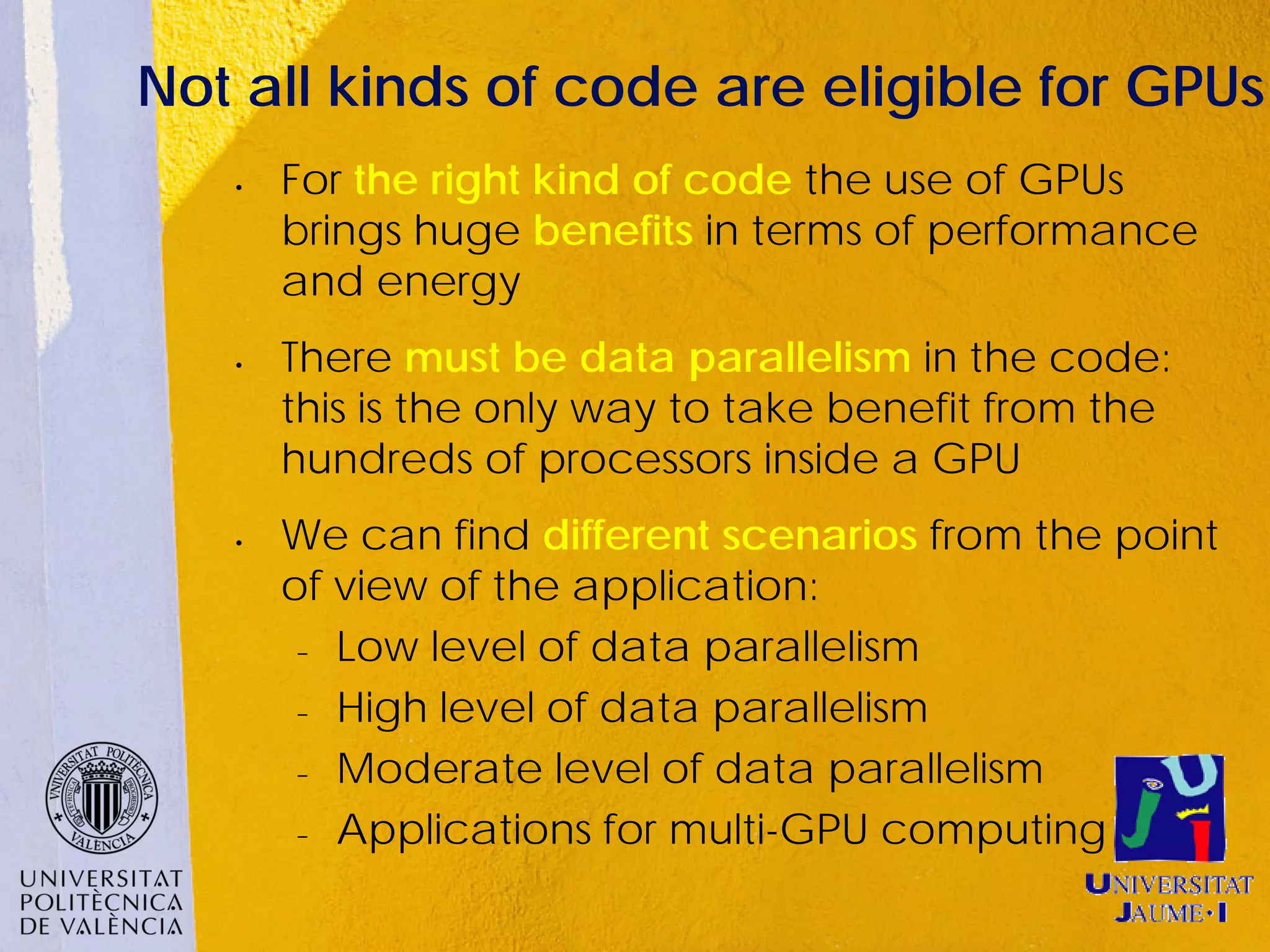

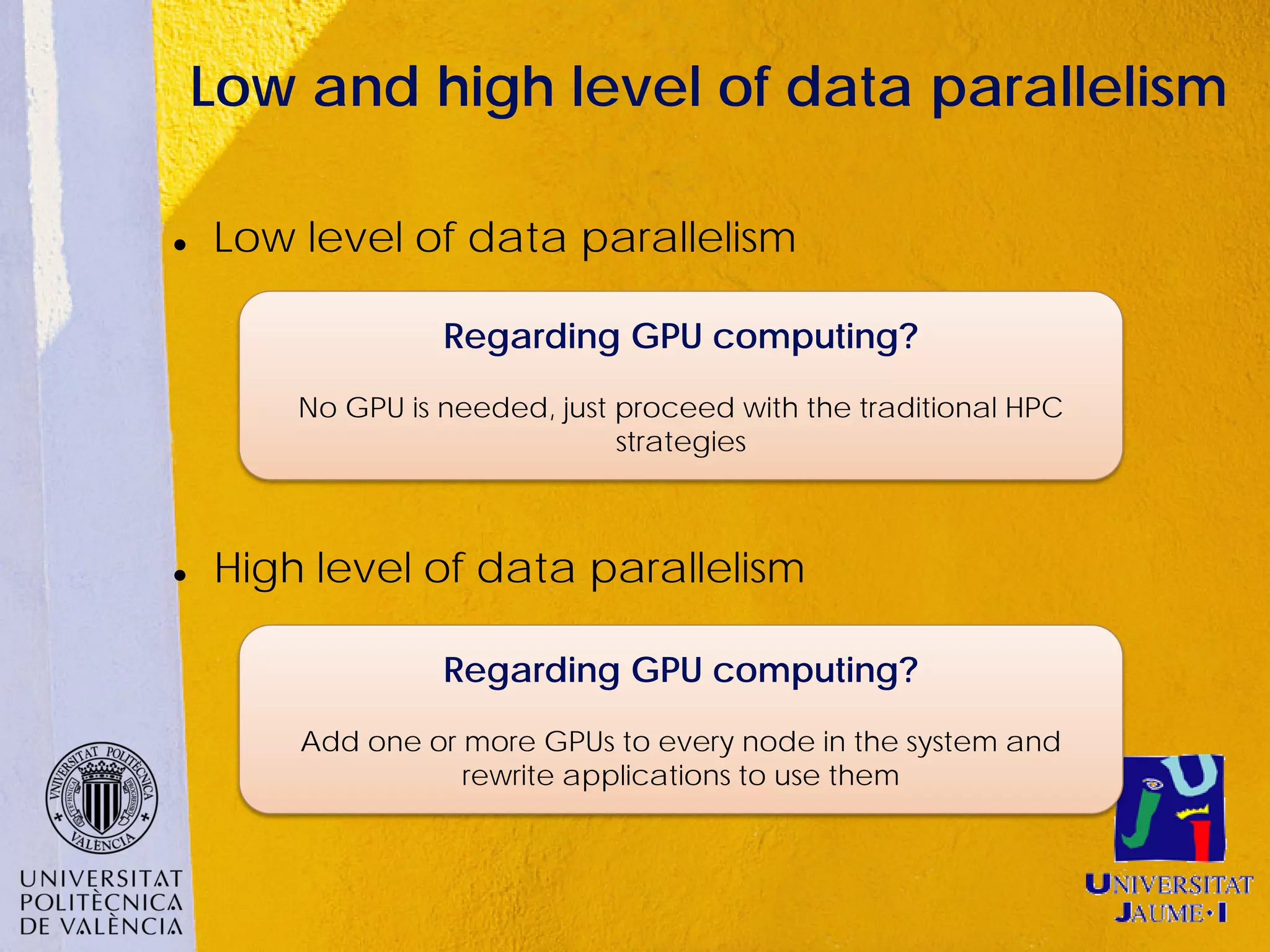

![Moderate level of data parallelism

Application presents a data parallelism

around [40%-80%]. This is the common case

Regarding GPU computing?

The GPUs in the system are used only for some parts of the

application, remaining idle the rest of the time](https://image.slidesharecdn.com/rcudapresentationibfeatures120704-120705112335-phpapp01/75/R-cuda-presentation_ib_features_120704-8-2048.jpg)