Hadoop Streaming: Programming Hadoop without Java

- 1. Hadoop Streaming Programming Hadoop without Java ! Glenn K. Lockwood, Ph.D. ! User Services Group ! San Diego Supercomputer Center ! University of California San Diego ! November 8, 2013 ! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 2. Hadoop Streaming! HADOOP ARCHITECTURE RECAP" SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 3. Map/Reduce Parallelism " task 5! Data! task 4! Data! task 0! Data! task 3! Data! task 1! Data! task 2! Data! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 4. Magic of HDFS " SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 5. Hadoop Workflow " SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 6. Hadoop Processing Pipeline " 1. Map – convert raw input into key/value pairs on each node! 2. Shuffle/Sort – Send all key/value pairs with the same key to the same reducer node! 3. Reduce – For each unique key, do something with all the corresponding values! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 7. Hadoop Streaming! WORDCOUNT EXAMPLES" SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 8. Hadoop and Python " • Hadoop streaming w/ Python mappers/reducers! • portable! • most difficult (or least difficult) to use! • you are the glue between Python and Hadoop! • mrjob (or others: hadoopy, dumbo, etc)! • • • • comprehensive integration! Python interface to Hadoop streaming! Analogous interface libraries exist in R, Perl! Can interface directly with Amazon! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 9. Wordcount Example " SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 10. Hadoop Streaming with Python " • "Simplest" (most portable) method! • Uses raw Python, Hadoop – you are the glue! cat input.txt | mapper.py | sort | reducer.py > output.txt provide these two scripts; Hadoop does the rest! • generalizable to any language you want (Perl, R, etc)! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

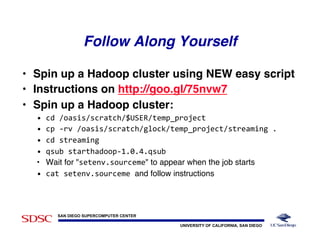

- 11. HANDS ON – Hadoop Streaming " Located in streaming/streaming/:! • wordcount-streaming-mapper.py We'll look at this first! • wordcount-streaming-reducer.py We'll look at this second! • run-wordcount.sh All of the Hadoop commands needed to run this example. Run the script (./run-‐wordcount.sh) or paste each command line-by-line! • pg2701.txt The full text of Melville's Moby Dick! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 12. Wordcount: Hadoop streaming mapper " #!/usr/bin/env python import sys for line in sys.stdin: line = line.strip() keys = line.split() for key in keys: value = 1 print( '%st%d' % (key, value) ) ...! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 13. What One Mapper Does " line = Call me Ishmael. Some years ago—never mind how long! keys = Call! me! Ishmael.! Some!years! ago--never! mind! how! long! emit.keyval(key,value) ... Call! years! 1! Ishmael.! 1! 1! me! mind! long! 1! 1! to the reducers! ago--never! 1! how! 1! 1! Some!1! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 14. Reducer Loop " • If this key is the same as the previous key,! • add this key's value to our running total.! • Otherwise,! • • • • print out the previous key's name and the running total,! reset our running total to 0,! add this key's value to the running total, and! "this key" is now considered the "previous key"! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 15. Wordcount: Streaming Reducer (1/2) " #!/usr/bin/env python import sys last_key = None running_total = 0 for input_line in sys.stdin: input_line = input_line.strip() this_key, value = input_line.split("t", 1) value = int(value) (to be continued...) SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 16. Wordcount: Streaming Reducer (2/2) " if last_key == this_key: running_total += value else: if last_key: print( "%st%d" % (last_key, running_total) ) running_total = value last_key = this_key if last_key == this_key: print( "%st%d" % (last_key, running_total) ) SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 17. Testing Mappers/Reducers " • Debugging Hadoop is not fun! $ head -‐n100 pg2701.txt | ./wordcount-‐streaming-‐mapper.py | sort | ./wordcount-‐streaming-‐reducer.py ... with 5 word, 1 world. 1 www.gutenberg.org 1 you 3 You 1 SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 18. Launching Hadoop Streaming " $ hadoop dfs -‐copyFromLocal ./pg2701.txt mobydick.txt $ hadoop jar /opt/hadoop/contrib/streaming/hadoop-‐streaming-‐1.1.1.jar -‐D mapred.reduce.tasks=2 -‐mapper "$(which python) $PWD/wordcount-‐streaming-‐mapper.py" -‐reducer "$(which python) $PWD/wordcount-‐streaming-‐reducer.py" -‐input mobydick.txt -‐output output $ hadoop dfs -‐cat output/part-‐* > ./output.txt SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 19. Hadoop with Python - mrjob " • • • • • Mapper, reducer written as functions! Can serialize (Pickle) objects to use as values! Presents a single key + all values at once! Extracts map/reduce errors from Hadoop for you! Hadoop runs entirely through Python:! $ ./wordcount-‐mrjob.py -‐-‐jobconf mapred.reduce.tasks=2 –r hadoop hdfs:///user/glock/mobydick.txt -‐-‐output-‐dir hdfs:///user/glock/output SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 20. HANDS ON - mrjob " Located in streaming/mrjob:! • wordcount-mrjob.py Contains both mapper and reducer code! • run-wordcount-mrjob.sh All of the hadoop commands needed to run this example. Run the script (./run-‐wordcount-‐mrjob.sh) or paste each command line-by-line! • pg2701.txt The full text of Melville's Moby Dick! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 21. mrjob - Mapper " #!/usr/bin/env python from mrjob.job import MRJob class MRwordcount(MRJob): def mapper(self, _, line): for line in sys.stdin: line = line.strip() line = line.strip() keys = line.split() keys = line.split() for key in keys: for key in keys: value = 1 value = 1 print('%st%d' % (key, value)) yield key, value def reducer(self, key, values): yield key, sum(values) if __name__ == '__main__': MRwordcount.run() SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 22. mrjob - Reducer " def mapper(self, _, line): line = line.strip() keys = line.split() for key in keys: value = 1 yield key, value def reducer(self, key, values): yield key, sum(values) if __name__ == '__main__': MRwordcount.run() • Reducer gets one key and ALL values ! • No need to loop through key/value pairs! • Use list methods/ iterators to deal with keys! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 23. mrjob – Job Launch " Run as a python script like any other! can pass Hadoop parameters (and many more!) in through Python! $ ./wordcount-‐mrjob.py -‐-‐jobconf mapred.reduce.tasks=2 –r hadoop hdfs:///user/glock/mobydick.txt -‐-‐output-‐dir hdfs:///user/glock/output Default file locations are NOT on HDFS—copying to/ from HDFS is done automatically! Default output action is to print results to your screen! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 24. Hadoop Streaming! VCF PARSING: A REAL EXAMPLE" SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 25. VCF Parsing Problem" • Variant Calling Files (VCFs) are a standard in bioinformatics! • Large files (> 10 GB), semi-structured! • Format is a moving target BUT parsing libraries exist (PyVCF, VCFtools)! • Large VCFs still take too long to process serially! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 26. VCF File Format " ##fileformat=VCFv4.1 Structure ##FILTER=<ID=LowQual,Description="Low quality"> of entire header must remain intact to ##FORMAT=<ID=AD,Number=.,Type=Integer,Description="Allelic depths for the ##FORMAT=<ID=DP,Number=1,Type=Integer,Description="Approximate read depth describe each variant record! ... #CHROM^IPOS^IID^IREF^IALT^IQUAL^IFILTER^IINFO^IFORMAT^IHT020en^IHT0 1^I10186^I.^IT^IG^I45.44^I.^IAC=2;AF=0.500;AN=4;BaseQRankSum=-‐0.584;DP=43 1^I10198^I.^IT^IG^I33.46^I.^IAC=2;AF=0.500;AN=4;BaseQRankSum=0.277;DP=51 1^I10279^I.^IT^IG^I48.49^I.^IAC=2;AF=0.500;AN=4;BaseQRankSum=1.855;DP=28 1^I10389^I.^IAC^IA^I288.40^I.^IAC=2;AF=0.500;AN=4;BaseQRankSum=2.540;DP= ... SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 27. Strategy: Expand the Pipeline " 1. Preprocess VCF to separate header! 2. Map! 1. 2. 3. read in header to make sense of records! filter out useless records! generate key/value pairs for interesting variants! 3. Sort/Shuffle! 4. Reduce (if necessary)! 5. Postprocess (upload to PostgresQL)! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 28. HANDS ON - VCF " Located in: streaming/vcf/ • preprocess.py Extracts the header from the VCF file! • mapper.py Simple PyVCF-based mapper! • run-parsevcf.sh Commands to launch the simple VCF parser example! • sample.vcf Sample VCF (cancer)! • parsevcf.py Full preprocess+map+reduce+postprocess application! • run-parsevcf-full.sh Commands to run full pre+map+red+post pipeline! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 29. Our Hands-On Version: Mapper " #!/usr/bin/env python import vcf import sys vcf_reader = vcf.Reader(open(vcfHeader, 'r')) vcf_reader._reader = sys.stdin vcf_reader.reader = (line.rstrip() for line in vcf_reader._reader if line.rstrip() and line[0] != '#') (continued...) SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 30. Our Hands-On Version: Mapper " for record in vcf_reader: chrom = record.CHROM id = record.ID pos = record.POS ref = record.REF alt = record.ALT try: for idx, af in enumerate(record.INFO['AF']): if af > target_af: print( "%dt%st%dt%st%st%.2ft%dt%d" % ( record.POS, record.CHROM, record.POS , record.REF, record.ALT[idx], record.INFO['AF'][idx], record.INFO['AC'][idx], record.INFO['AN'] ) ) except KeyError: pass SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 31. Our Hands-On Version: Reducer " No reduction step—can turn off reducer entirely ! hadoop jar $HADOOP_HOME/contrib/streaming/hadoop-‐streaming-‐*.jar -‐D mapred.reduce.tasks=0 -‐mapper "$(which python) $PWD/parsevcf.py -‐m $PWD/header.txt,0.30" -‐reducer "$(which python) $PWD/parsevcf.py -‐r" -‐input vcfparse-‐input/sample.vcf -‐output vcfparse-‐output SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO

- 32. No Reducer – What's the Point? " 8-node test: two mappers per node = 9x speedup ! SAN DIEGO SUPERCOMPUTER CENTER UNIVERSITY OF CALIFORNIA, SAN DIEGO