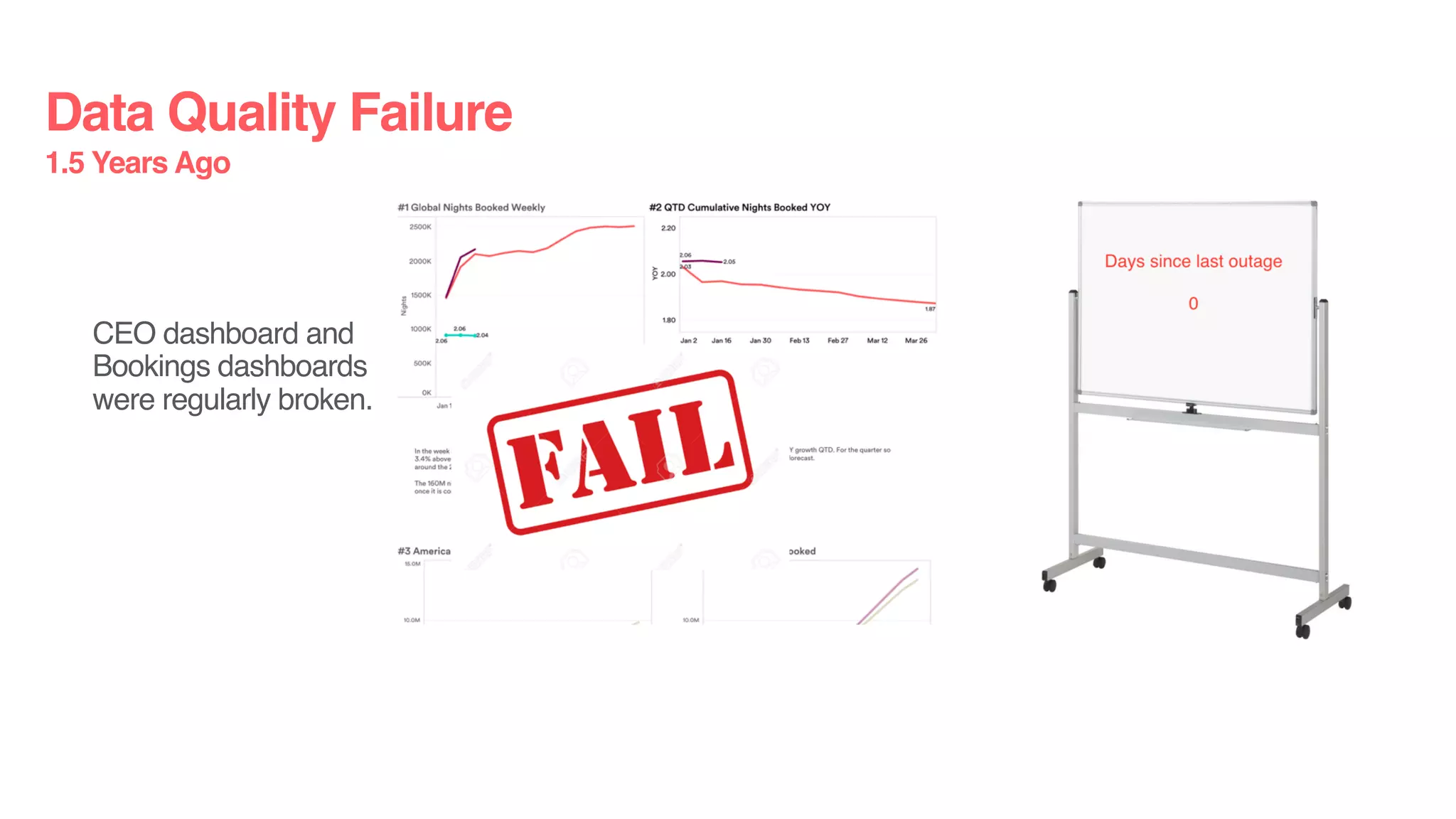

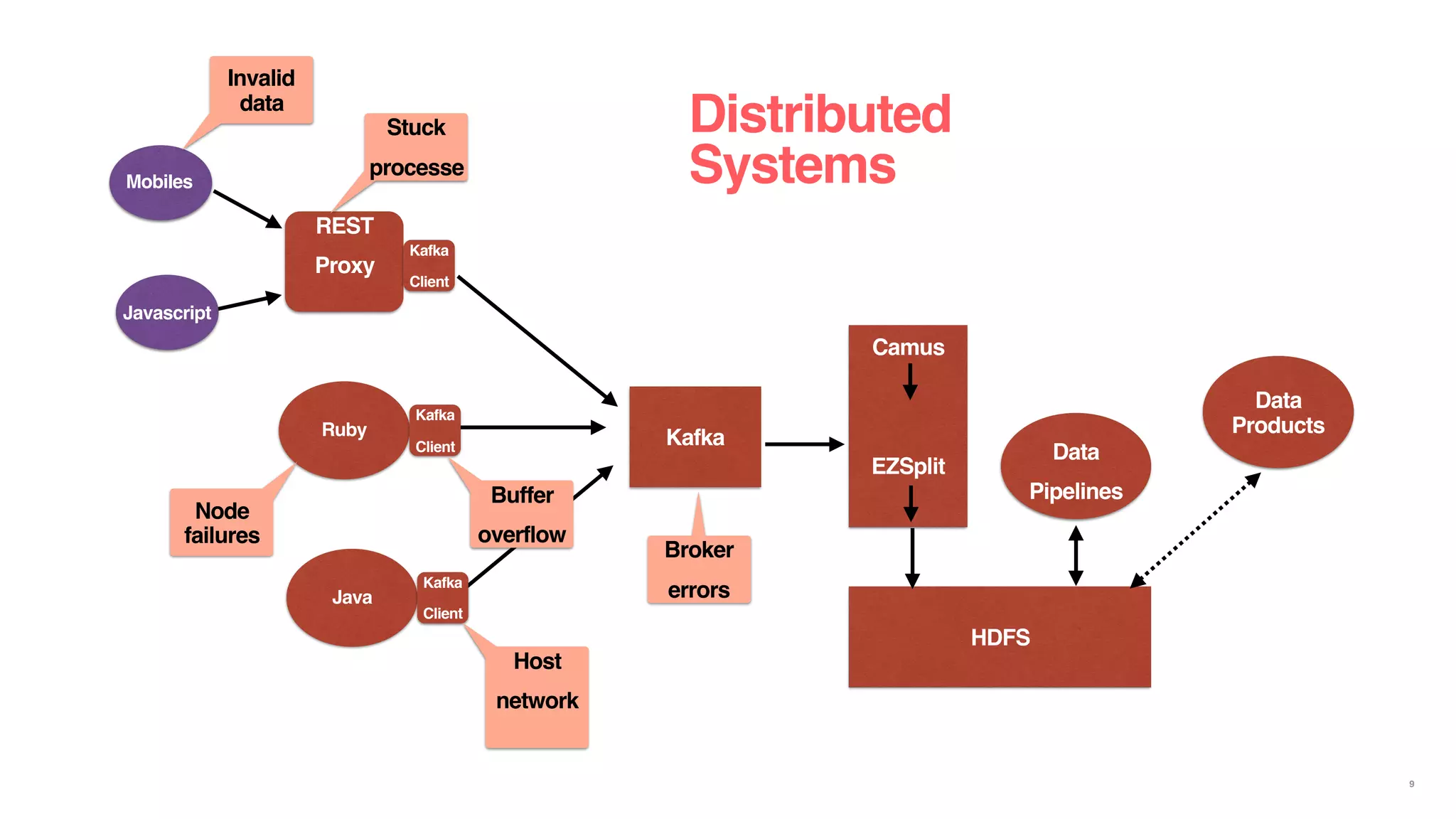

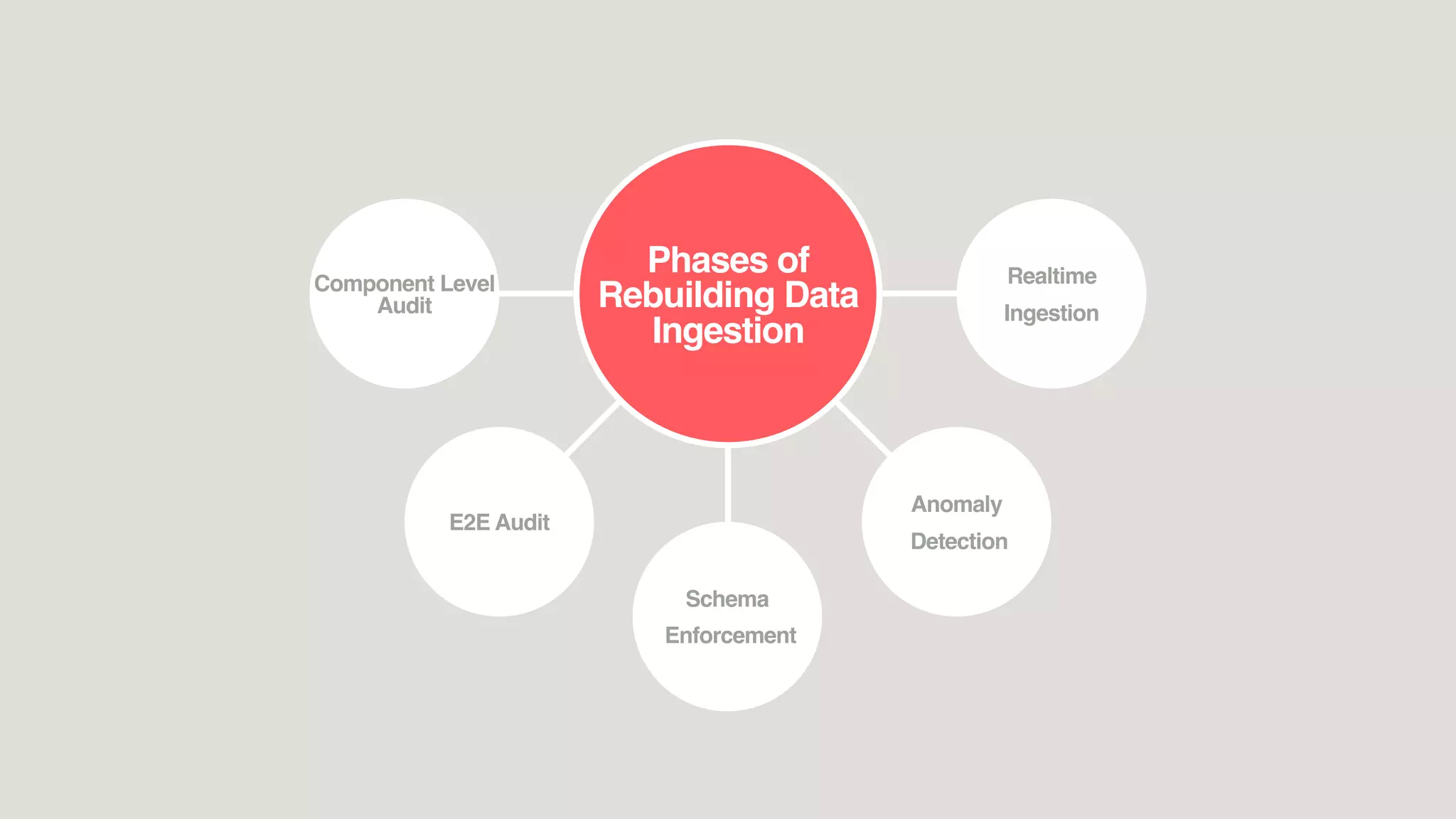

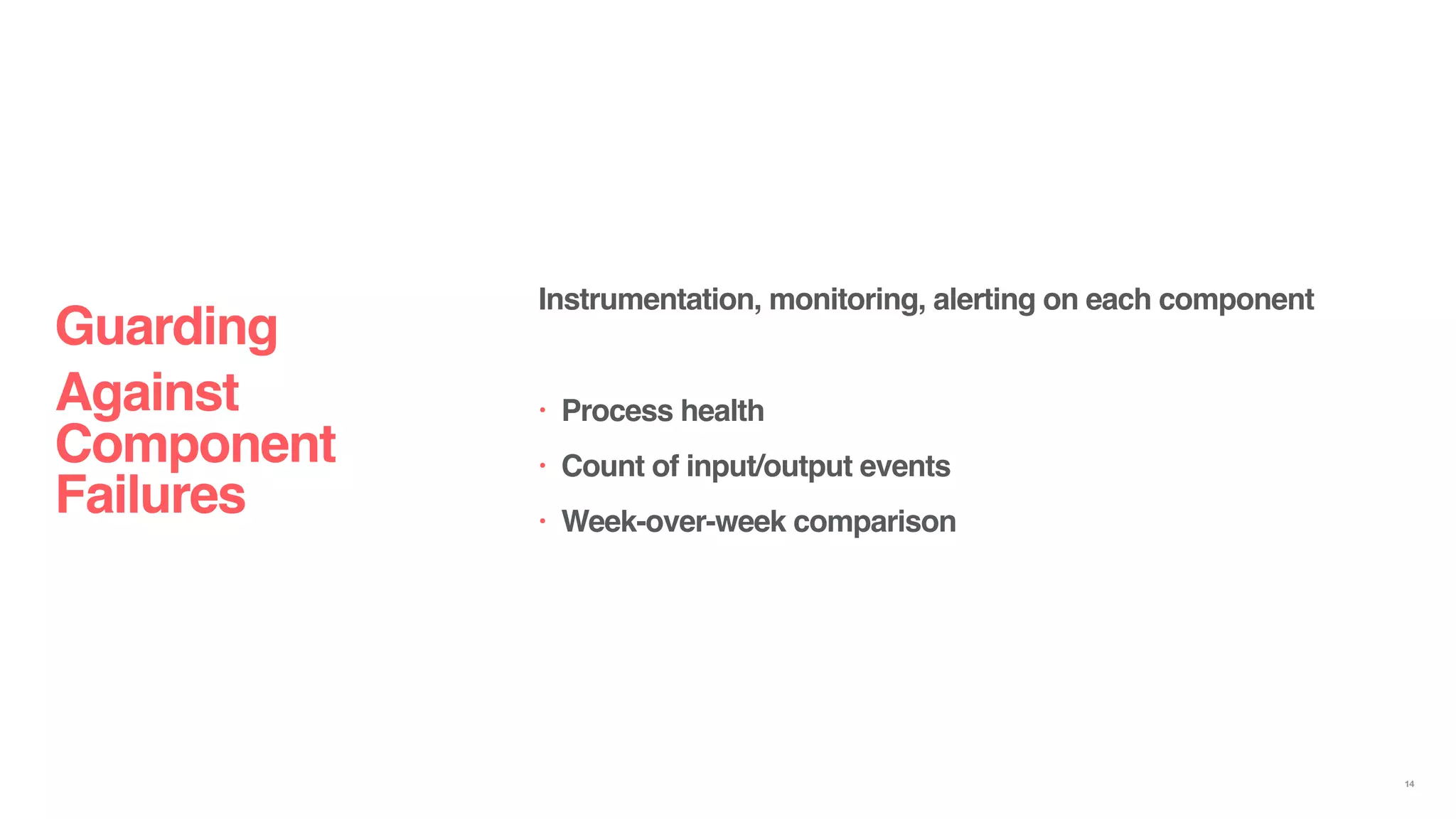

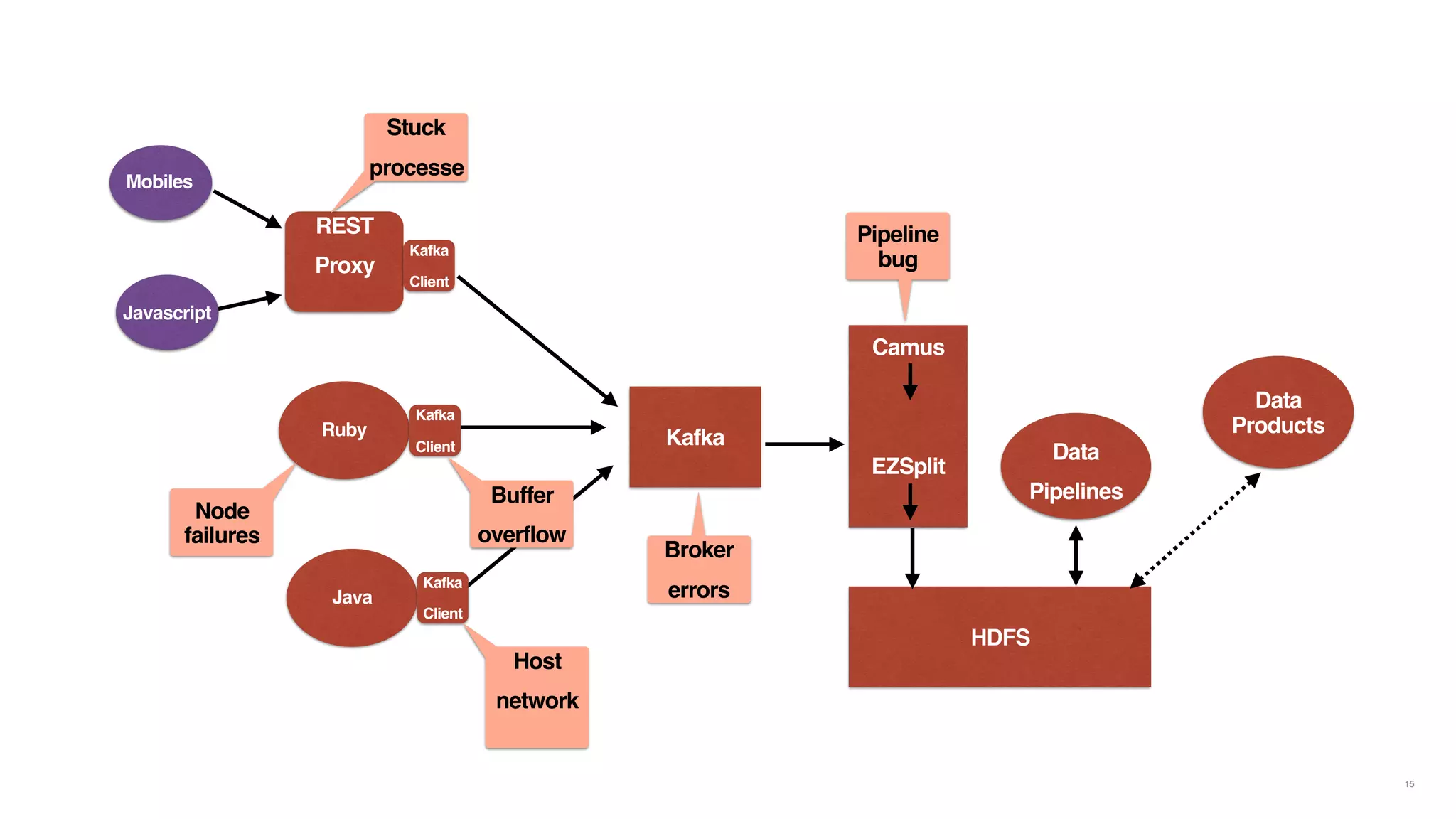

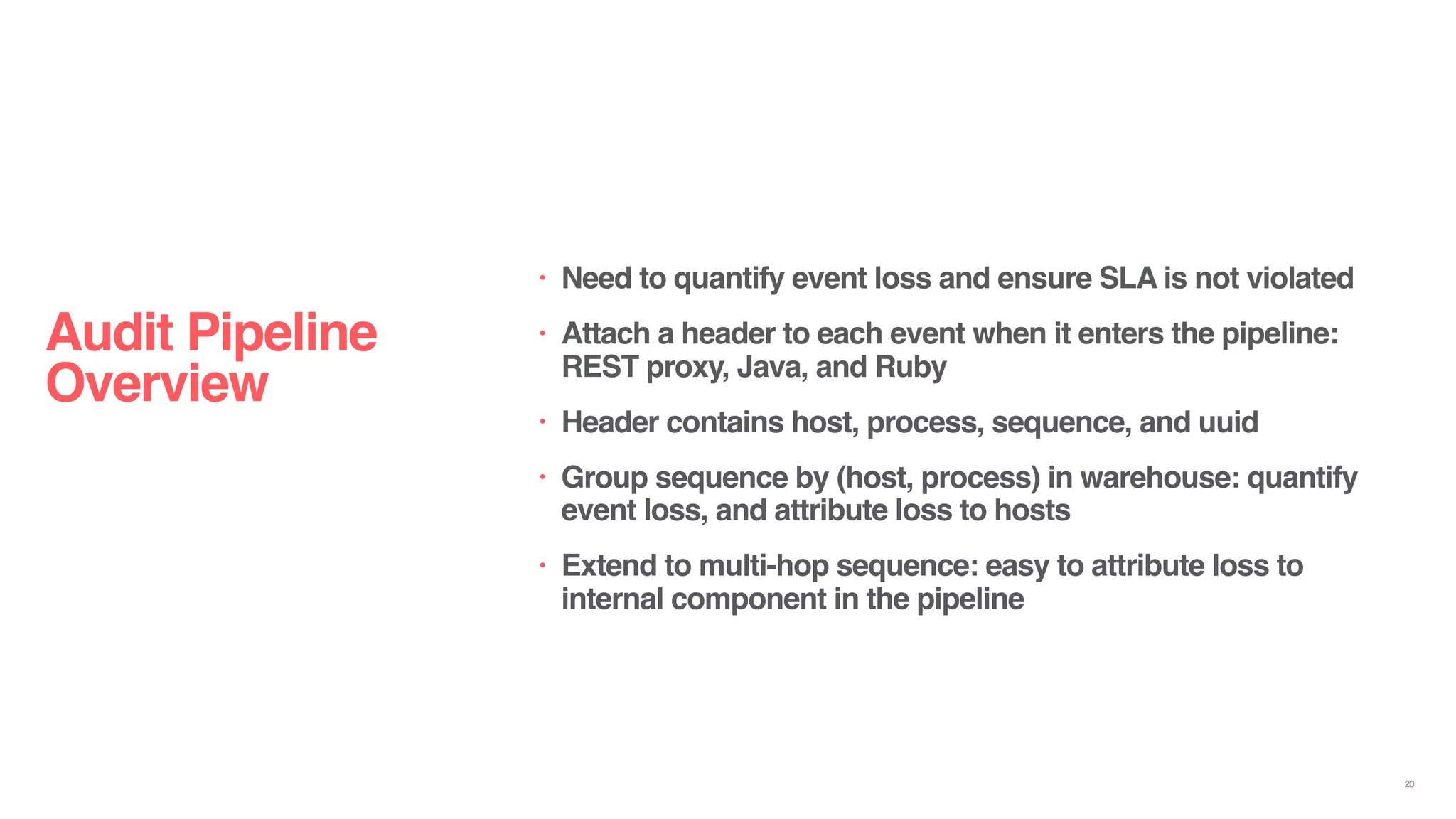

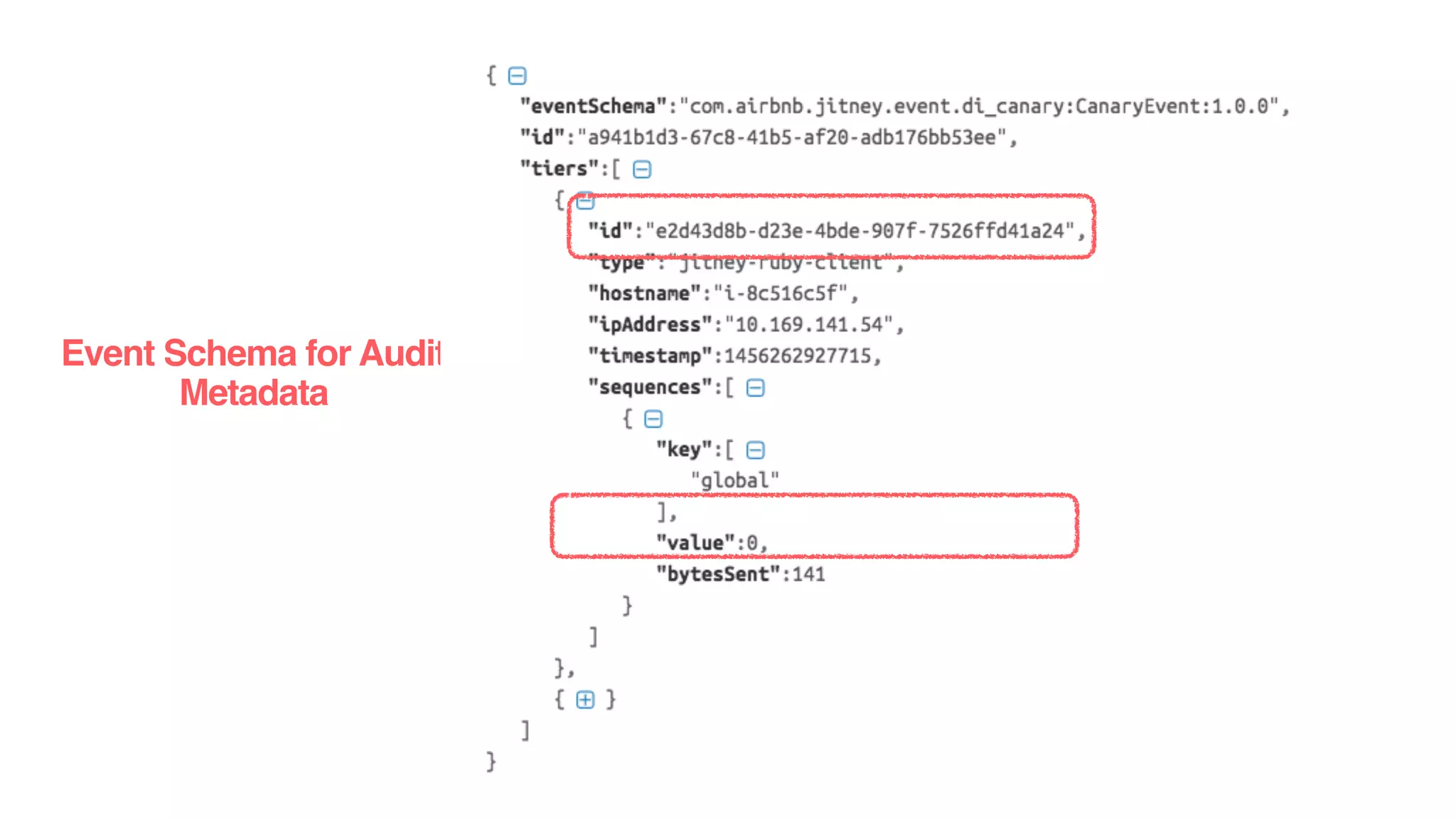

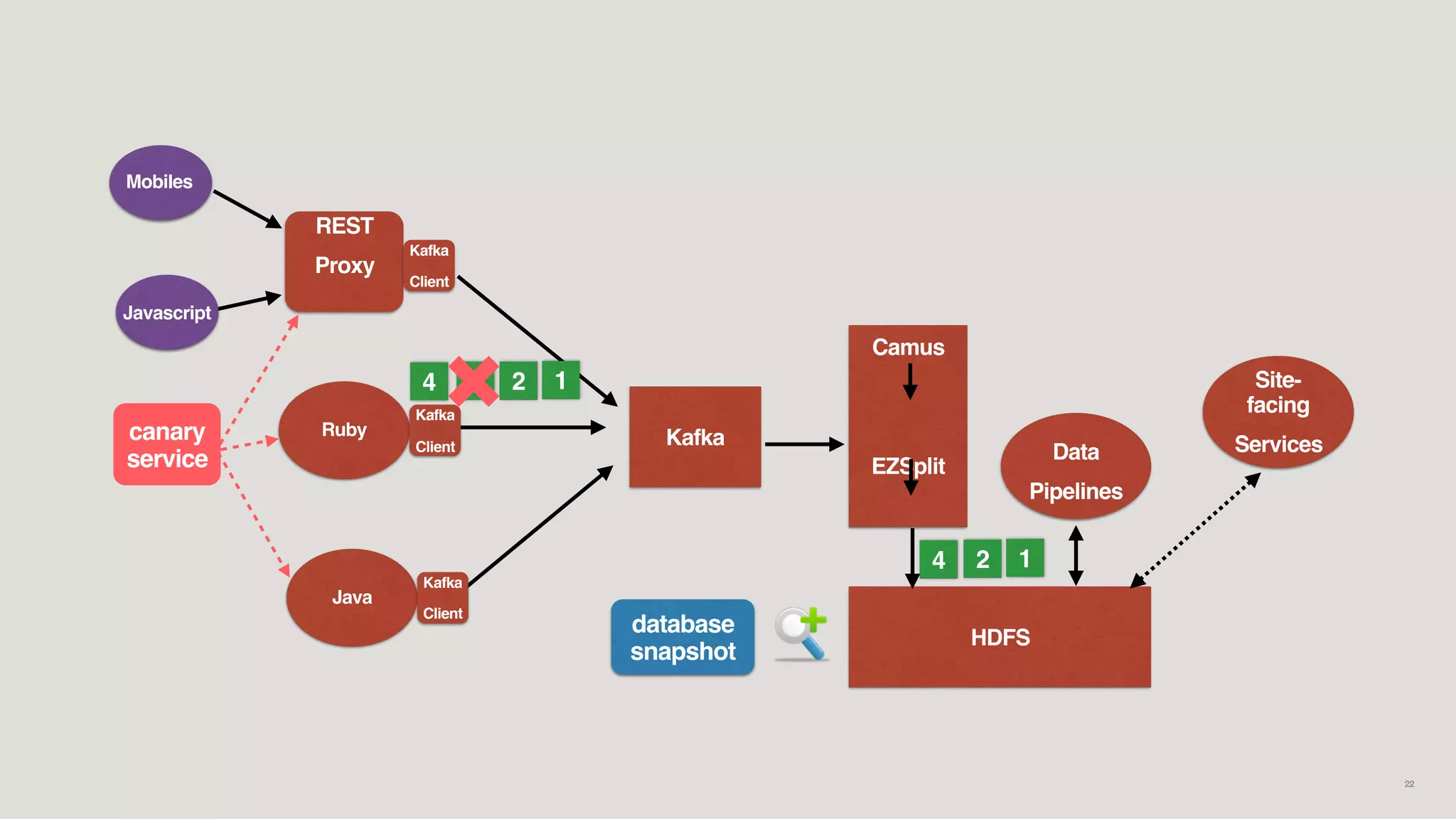

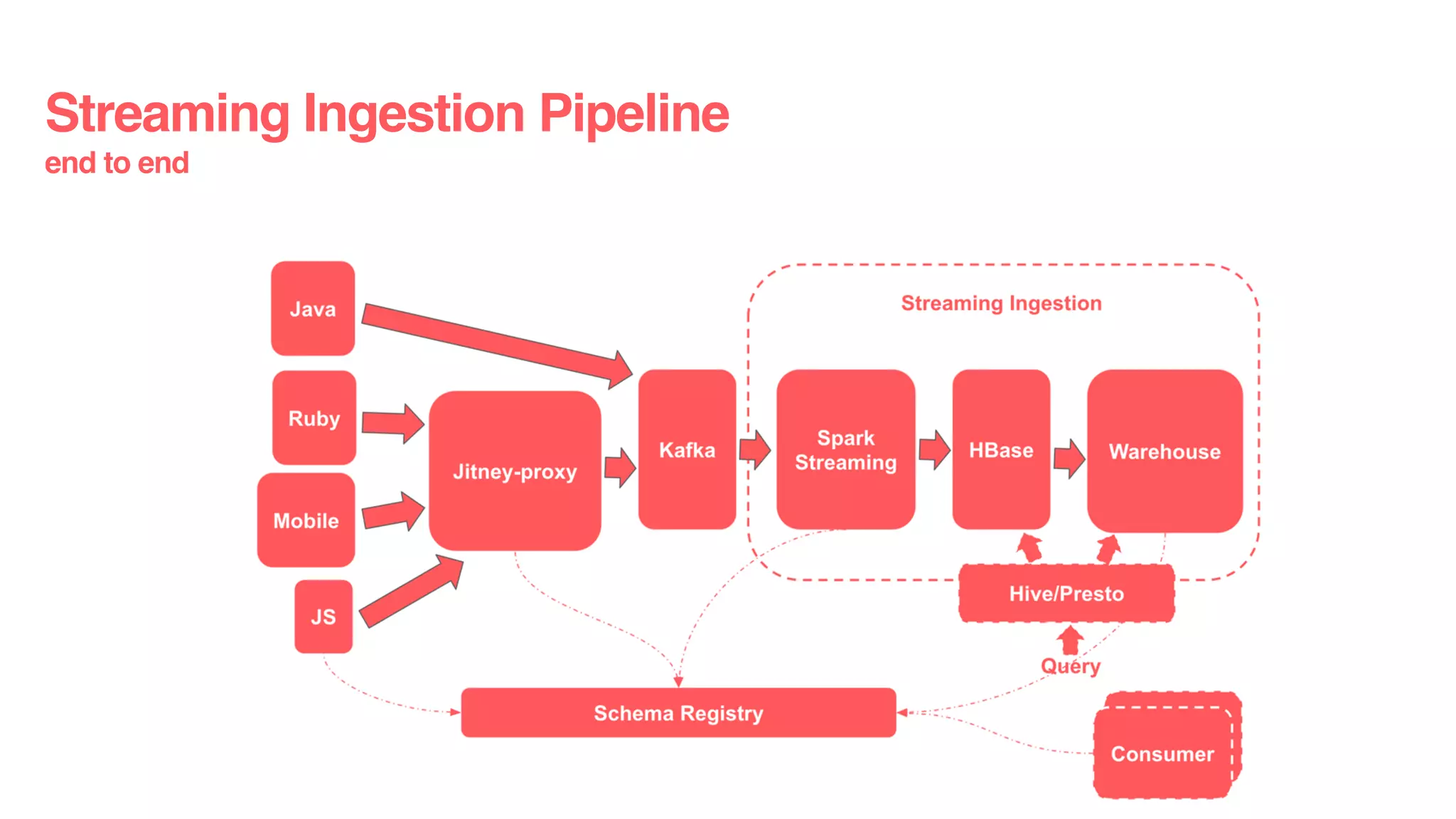

The document discusses reliable and scalable data ingestion at Airbnb. It describes the challenges they previously faced with unreliable and low quality data. It then outlines the five phases taken to rebuild their data ingestion system to be reliable: 1) auditing each component, 2) auditing the end-to-end system, 3) enforcing schemas, 4) implementing anomaly detection, and 5) building a real-time ingestion pipeline. The new system is able to ingest over 5 billion events per day with less than 100 events lost.

![HBase Row Key

• Event key = event_type.event_name.event_uuid.

Ex: air_event.canaryevent.016230ae-a3d8-434e

• Shard id = Hash (Event key) % Shard_num

• Shard key = Region_start_keys[Shard_id].

Ex: 0000000

• Row key = Shard_key.Event_key.

Ex: 0000000.air_events.canaryevent.016230-a3db-434e

40](https://image.slidesharecdn.com/june29500airbnbzhangputtaswamy-160711181834/75/Reliable-and-Scalable-Data-Ingestion-at-Airbnb-40-2048.jpg)